Can big data and AI fix our criminal-justice crisis?

America, land of the free. Yeah, right. Tell that to the nearly 7 million people incarcerated in the US prison system. The United States holds the dubious distinction of having the highest per capita incarceration rate of any nation on the planet — 716 inmates for every 100,000 population. We lock up more of our own people than Saudi Arabia, Kazakhstan or Russia. And once you’re in, you stay in. A 2005 study by the Bureau of Justice Statistics (BJS) followed 400,000 prisoners in 30 states after their release and found that within just three years, more than two-thirds had been rearrested. That figure rose to over 75 percent by 2010.

Among those who do enter the criminal justice system, a disproportionately high number are people of color. In 2010, the BJS found that for every 100,000 Americans, 380 inmates are white, while 2.5 times that many (966) are Latino. A whopping 2,207 are black — nearly six times as many black Americans are incarcerated as their Caucasian counterparts.

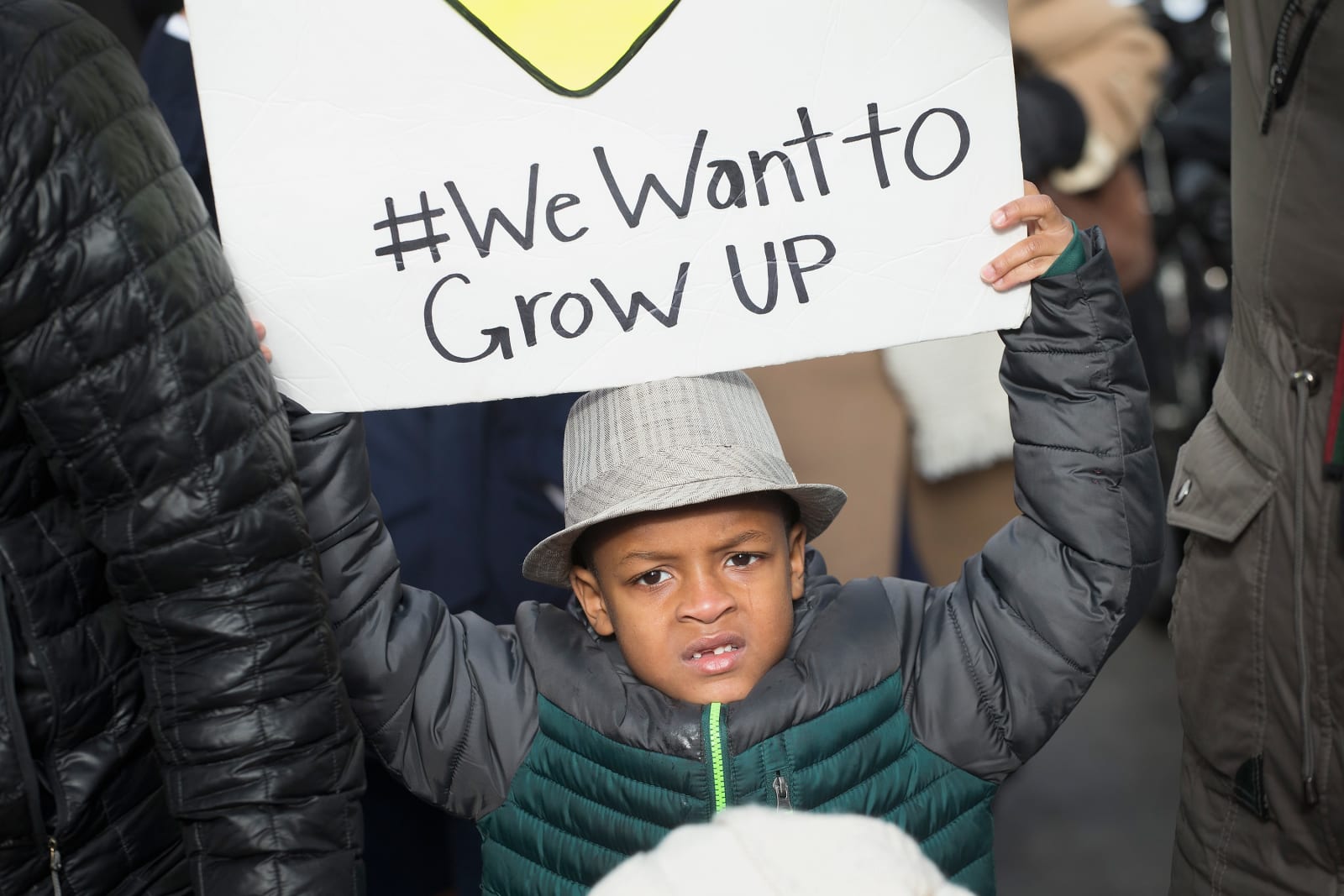

Is it any wonder, then, that America’s minority communities express such little faith in the fairness of US legal institutions? In a nation that incorporated structural racism into its social system for nearly a century — after hundreds of years of slavery — are you really surprised that people of color have historically distrusted the legal system?

Chicago demonstrators calling for an end to gun violence – Getty Images

Change is already happening. Police departments across the country are adopting the mantra “work smarter, not harder” and are leveraging big data to do it. For example, in February of 2014, the city of Chicago launched the Custom Notification Program, staging early interventions with people who were most likely to commit (or be the victim of) violent crime but who were not under investigation for such. The city sent police, community leaders and clergy to the person’s house, imploring them to change their ways and offering social services. According to Chicago Mayor Rahm Emanuel, of the 60 people approached far, not one has since been involved in a felony.

This unique method of community policing used an equally novel method of figuring out which citizens to reach out to first. The Chicago PD turned to big data to analyze “prior arrests, impact of known associates and potential sentencing outcomes for future criminal acts” and generate a “heat list” of people most in need of these interventions. According to the CPD, this analysis is “based on empirical data compared with known associates of the identified person,” though as we’ll see with other big data algorithms, the actual mechanics of how the algorithms place and rank people on these heat lists remains opaque.

President Obama and Philadelphia PD Commissioner Charles Ramsey discuss the Task Force on 21st Century Policing – Corbis/VCG via Getty Images

The Obama administration is also making forays into big-data solutions for law enforcement. In December 2014, President Obama launched the Task Force on 21st Century Policing, a blue-ribbon commission tasked with uncovering the challenges that modern police forces face and figuring out how to grow their standing within the communities they serve.

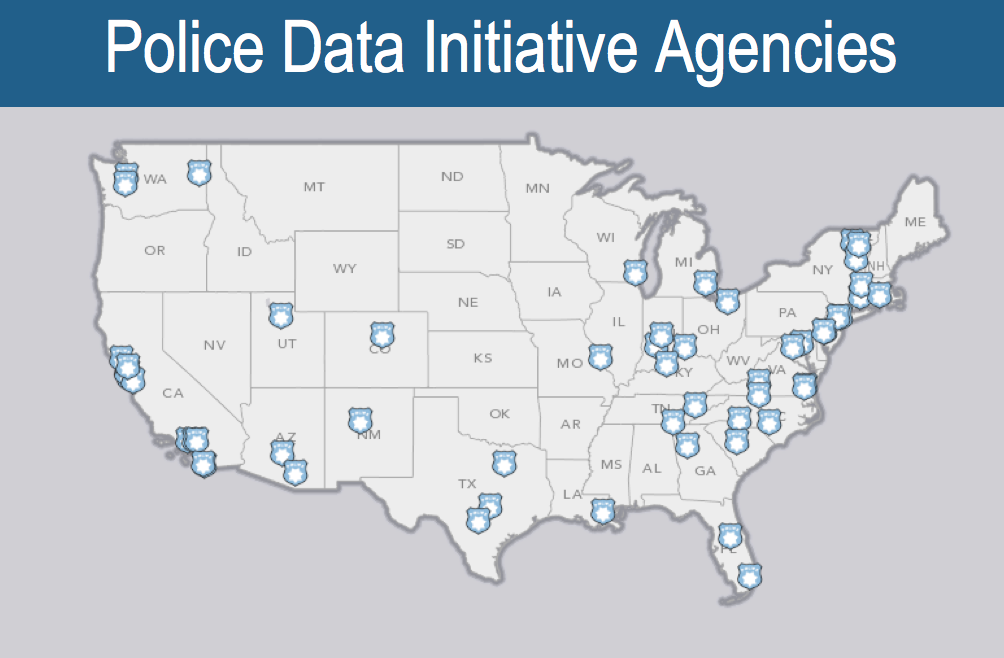

Based on the task force’s recommendations, the administration set forth the Police Data Initiative (PDI) in May 2015. This initiative focuses on using technology to improve how law enforcement handles data transparency and analysis. The goal is to empower local law enforcement with the tools and resources they need to act more transparently and rebuild the trust of the public.

Since the Initiative launched, 61 police departments have released more than 150 data sets for everything from traffic stops and community engagement to officer-involved shootings and body-camera data. All this data is collected by the local departments — neither the White House nor any federal agency collects anything — and then organized for public consumption through open data web portals as well as police department websites.

Both the police and nonprofits around the country have already begun to leverage this information. Code for America and CI Technologies are working to integrate these data sets into the popular IA Pro police-integrity software, which will enable departments to spot troublesome officers sooner. Likewise, North Carolina’s Charlotte-Mecklenburg Police Department (CMPD) has teamed with the Southern Coalition for Social Justice to better map and visualize key policing metrics within the city, making them more accessible to the public. “Local departments have found that this data transparency helps paint a more-complete picture of what policing looks like in their neighborhoods,” Denise Ross, senior policy adviser at the White House and co-lead on the Police Data Initiative, told Engadget, “and helps bring a more authentic, informed conversation to the public.”

This openness couldn’t come at a more urgent time. “I think people are feeling vulnerable in different ways on both sides,” Jennifer Eberhardt, Stanford professor of psychology, told PBS last December. “I mean, you have community members who feel vulnerable around the police. And then there’s a vulnerability on the police side, where, when something happens in Ferguson or anywhere in the country, police departments all over the nation feel it.”

Those feelings of vulnerability have life-and-death consequences. A yearlong study conducted by The Washington Post found that of the nearly 1,000 people killed by police officers in 2015, 40 percent were unarmed black men. Now consider, black men make up just 6 percent of the national population. Granted, of those 1,000 or so people, white men were more likely to be shot while brandishing a gun or threatening the officer. However, a staggering 3 in 5 who were killed in response to benign behavior — “failing to comply” with an officer, as Philando Castile was — were either black or Latino.

Philando Castile’s mother looks at a picture of her son during a press conference at the Minnesota state capitol – Reuters

“The system does not affect people in the same way,” Lynn Overmann of the White House Office of Science and Technology Policy told the Computing Community Consortium in June. “At every stage of our criminal-justice system [black and Latino citizens] are more likely to receive worse outcomes. They are more likely to be stopped, they’re more likely to be searched for evidence, they’re more likely to be arrested, they’re more likely to be convicted and, when convicted, they tend to get longer sentences.”

North Carolina’s CMPD also partnered with the University of Chicago to develop a more effective early intervention system (EIS) that helps departments identify officers most likely to incur adverse interactions with the public. These interactions can include use of force and citizen complaints. The system enables the CMPD to more accurately track at-risk officers while reducing the number of false positives. When implemented, it spotted 75 more high-risk officers than the current system, while incorrectly flagging 180 fewer low-risk officers.

In addition to the data sets released by the Police Data Initiative, many local law enforcement agencies have begun integrating body worn camera (BWC) data into their training and transparency operations. Body cams are a hot-button issue — especially following Ferguson — but in California, the Oakland Police Department implemented them nearly six years ago. Since their adoption in 2010, the Oakland PD has seen a 72 percent decrease in use-of-force incidents along with a 54 percent drop in citizen complaints.

Unfortunately, managing the large volumes of data that the body-camera system produces — eight terabytes a month from the department’s more than 600 cameras — is not easy. There’s simply too much information to comb through with any reasonable efficiency. That’s why the Oakland police have partnered with Stanford University to automate the video-curation and -review process. It can also help the department better train its officers by highlighting both negative and positive interactions to reduce the prevalence of unconscious bias exhibited by officers.

This video data has been put to good use by other organizations as well. A study conducted by Stanford SPARQ (Social Psychological Answers to Real-world Questions) researchers leveraging BWC data (as well as police reports and community surveys) found that Oakland police officers exhibit clear bias when stopping motorists. The report, which was released in June, found that black men were four times more likely to be pulled over than whites, four times more likely to be searched after being stopped and 20 percent more likely to be handcuffed during their stop, even if they were not ultimately arrested.

NYPD officers stopping and frisking individuals – NY Daily News via Getty Images

Among the 50 recommendations made by the study, SPARQ researchers strongly advocated for more police-interaction data collection and that body-camera data be regularly used to audit and continually train officers to reduce this bias. Paul Figueroa, Oakland’s assistant chief of police, echoed the sentiment in a post on The Police Chief blog. “Video can provide invaluable information about the impact of training on communication techniques,” he wrote. “Significant knowledge is available about verbal and nonverbal communication, and effective communication training on a regular basis is required for California law enforcement officers.” To that end, police cadets in California have long been taught communication and de-escalation techniques — the “tenets of procedural justice,” as ACP Figueroa calls them.

Body cameras are far from the perfect solution, especially at this relatively early stage of use by law enforcement. Among the numerous issues facing the technology is: How does a department store all that data? The Seattle PD took a novel approach earlier this year by simply dumping all of its BWC data onto YouTube in the name of transparency, though that isn’t necessarily an ideal (or even scalable) solution.

There are also issues of use requirements — that is, when, where and with whom these cameras should record interactions — and the basic technological limitations of the cameras themselves. The officers involved in the Alton Sterling encounter earlier this year reported that their body cameras “fell off” during the incident. Similarly, a scandal erupted in Chicago earlier this year when evidence came to light indicating officers had intentionally disabled their patrol cars’ dash cameras. And earlier this month, a Chicago police supervisor could be heard telling two officers to turn off their body cameras immediately after the pair fatally shot unarmed teenager Paul O’Neal.

Body-camera footage of the Paul O’Neal shooting – Reuters

These are not isolated incidents. A study by the Leadership Conference on Civil and Human Rights published last Tuesday found that only a small fraction of police departments that have implemented body cameras have also created robust policies regarding their use. For example, only four jurisdictions out of the 50 surveyed have rules in place expressly permitting citizens who file complaints against an officer to see video footage of the incident. Not one of these departments fully prohibits an officer from reviewing video footage before filing an incident report, though many will revoke that right in certain cases.

In addition, half of the departments don’t make this information easily available on their websites. And when states like North Carolina pass obfuscating laws that demand a court order before the police will release body-camera footage, transparency and accountability suffer. That’s the exact opposite of what the Police Data Initiative was designed to address.

Then there are the issues endemic to the data itself. “The era of big data is full of risk,” the White House press staff wrote in a May blog post. That’s because these algorithmic systems are not inherently infallible. They’re tools built by people who rely on imperfect and incomplete data. Whether the algorithm’s designers realize it or not, there’s the chance that they can incorporate bias into the mechanism, which, if not countered, can actually reinforce the discriminatory practices that it’s built to eliminate.

Privacy, of course, is a primary issue — both for the public and the officers themselves. As Samuel Walker, emeritus professor of criminal justice at the University of Nebraska, stated in 2013, “The camera will capture everything within its view and that will include people who are not suspects in the stop. So, will that information be stored? Will it be retained?”

What’s more, who will be in control of it and for how long? Privacy advocates have long warned that centralizing all of these data sets — which previously existed in isolation within their respective private-sector, governmental and academic databases — could shift the balance of power between those who have this data (i.e., the federal government) and the people who represent that data (the rest of us).

Oakland PD officer Huy Nguyen wearing a body camera outside of OPD HQ – Reuters

Then there’s the issue of security. It’s not just the fear of data breaches like we’ve seen with the numerous credit card and Social Security scandals over the past few years. There are also previously unseen issues such as the Mosaic Effect wherein numerous separate sources of anonymized data are inadvertently combined to reveal a person’s identity — the big-data equivalent of the game Guess Who. These sorts of leaks could very well endanger civil protections involving housing, employment or your credit score. Remember that short-lived idea about using your Facebook friends to gauge your FICO score? It could be like that, but using every friend you’ve ever had.

Finally, these data sets are large and difficult to parse without some form of automation — much like the OPD’s body camera footage. In many cases, these algorithms could replace human experts, at least partially. But who’s to say that these systems are coming up with the correct answer?

For example, a recent Harvard study found that when searching a black individual’s name (i.e., “Trevon Jones” vs. “Chet Manley”) or that of a black fraternity, arrest record ads popped up far more often. Similarly, Carnegie Mellon University recently found that Google was more likely to show ads for higher-paying executive-level jobs to men than it was to women. It’s not like there’s a team of programmers twisting their mustaches and cackling about sticking it to the minorities and women, but unconscious biases can easily make their way into algorithms or manifest through the way the data is collected, thereby skewing the results.

That’s not ideal when dealing with advertising, but it could be downright dangerous when applied to the criminal-justice system. For example, the Wisconsin Supreme Court just found that the sentencing algorithm COMPAS — Criminal Offender Management Profiling for Alternative Sanctions — does not violate due process. Granted, the algorithm’s function was only one of a number of influencing factors in the court’s decision, but that doesn’t resolve a key issue: Neither the justices nor anyone else outside of the company knows how it actually works. That’s because the algorithm is a proprietary industry secret. How is the public supposed to maintain faith in the criminal-justice system when critical functions like sentencing recommendations are reduced to black-box functionality? This is precisely why transparency and accountability are vital to good governance.

Interior of the Wisconsin State Supreme Court chambers – Daderot / Wikimedia Commons

“The problem is not the AI, it’s the humans,” Jack M. Balkin, Knight professor of constitutional law and the First Amendment at Yale Law School, told attendees at the Artificial Intelligence: Law and Policy workshop in May. “The thing that’s wrong with Skynet is that it’s imagining that there’s nobody behind the robots. It’s just robots taking over the world. That’s not how it will work.” There will always be a man behind the curtain, Balkin argues, one who can and will wield this algorithmic power to subjugate and screw over the rest of the population. It’s not the technology itself that’s the problem — it’s the person at the helm.

Balkin goes on to argue that the solution is surprisingly simple and is based on longstanding legal code. “The idea that I’ve been pushing is that we should regard people who use AI and collect large amounts of data to process for the purpose of making decisions, we should consider them as having a kind of fiduciary duty over the people [on] whom they are imposing these decisions.”

This is just one of many solutions being considered by the White House, which held four such workshops throughout the US in 2016. Broadly, the administration advocates “investing in research, broadening and diversifying technical leadership … bolstering accountability and creating standards for use within both the government and the private sector.” The administration also calls upon academia to commit to the ethical use of data and to instill those values in students and faculty.

For better or worse, body-camera footage and big-data technology are here to stay. Just like fire, automobiles and atom bombs, big data is simply a tool. It’s up to the users to decide whether it will be employed for the good of humanity or its detriment. Yes, big data could be leveraged to segregate and discriminate. It could also be used to finally bring meaningful reform our criminal-law system, deliver justice to entire cross-sections of American people — maybe even help rebuild some of the trust between the police and those they’re sworn to protect.

(27)