Chipmakers Are Racing To Build Hardware For Artificial Intelligence

In recent years, advanced machine learning techniques have enabled computers to recognize objects in images, understand commands from spoken sentences, and translate written language.

But while consumer products like Apple’s Siri and Google Translate might operate in real time, actually building the complex mathematical models these tools rely on can take traditional computers large amounts of time, energy, and processing power. As a result, chipmakers like Intel, graphics powerhouse Nvidia, mobile computing kingpin Qualcomm, and a number of startups are racing to develop specialized hardware to make modern deep learning significantly cheaper and faster.

The importance of such chips for developing and training new AI algorithms quickly cannot be understated, according to some AI researchers. “Instead of months, it could be days,” Nvidia CEO Jen-Hsun Huang said in a November earnings call, discussing the time required to train a computer to do a new task. “It’s essentially like having a time machine.”

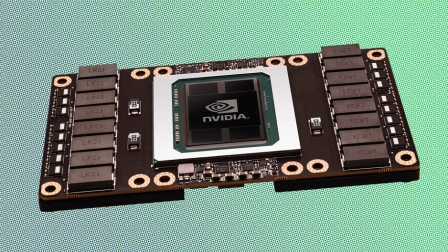

While Nvidia is primarily associated with video cards that help gamers play the latest first-person shooters at the highest resolution possible, the company has also been focusing on adapting its graphics processing unit chips, or GPUs, to serious scientific computation and data center number crunching.

“In the last 10 years, we’ve actually brought our GPU technology outside of graphics, made it more general purpose,” says Ian Buck, vice president and general manager of Nvidia’s accelerated computing business unit.

Speedily drawing video game graphics and other real-time images relies on GPUs that perform particular types of mathematical calculations, such as matrix multiplications, and handle large quantities of basic computations in parallel. Researchers have found those same characteristics are also useful for other applications of similar math, including running climate simulations and modeling attributes of complex biomolecular structures.

And lately, GPUs have proven adept at training deep neural networks, the mathematical structures loosely modeled on the human brain that are the workhorses of modern machine learning. As it happens, they also rely heavily on repeated parallel matrix calculations.

“Deep learning is peculiar in that way: It requires lots and lots of dense matrix multiplication,” says Naveen Rao, vice president and general manager for artificial intelligence solutions at Intel and founding CEO of Nervana Systems, a machine learning startup acquired by Intel earlier this year. “This is different from a workload that supports a word processor or spreadsheet.”

The similarities between graphics and AI math operations have given Nvidia a head start among competitors. The company reported that data center revenue more than doubled year-over-year in the quarter ending Oct. 31 to $240 million, partly due to deep learning-related demand. Other GPU makers are also likely excited about the new demand for product, after reports that industrywide GPU sales were declining amid decreasing desktop computer sales. Nvidia dominates the existing GPU market, with more than 70% market share, and its stock price has nearly tripled in the past year as its chips find new applications.

Graphics Cards Make The AI World Go Round

In 2012, at the annual ImageNet Large Scale Visual Recognition Challenge (a well known image classification competition), a team used GPU-powered deep learning for the first time, winning the contest and significantly outperforming previous years’ winners. “They got what was stuck at sort of a 70% accuracy range up into the 85% [range],” Buck says.

GPUs have become standard equipment in data centers for companies working on machine learning. Nvidia boasts that its GPUs are used in cloud-based machine learning services offered by Amazon and Microsoft. But Nvidia and other companies are still working on the next generations of chips that they say will be able to both train deep learning systems and use them to process information more efficiently.

Ultimately, the underlying designs of existing GPUs are adapted for graphics, not artificial intelligence, says Nigel Toon, CEO of Graphcore, a machine learning hardware startup with offices in Bristol, in the U.K. GPU limitations lead programmers to structure data in particular ways to most efficiently take advantage of the chips, which Toon says can be hard to do for more complicated data, like sequences of recorded video or speech. Graphcore is developing chips it calls “intelligent processing units” that Toon says are designed from the ground up with deep learning in mind. “And hopefully, what we can do is remove some of those restrictions,” he says.

Chipmakers say machine learning will benefit from specialized processors with speedy connections between parallel onboard computing cores, fast access to ample memory for storing complex models and data, and mathematical operations optimized for speed over precision. Google revealed in May that its Go computer AlphaGo, which beat the Go world champion, Lee Sedol, earlier this year, was powered by its own custom chips called tensor processing units. And Intel announced in November that it expects to roll out non-GPU chips within the next three years, partially based on technology acquired from Nervana, that could train machine learning models 100 times faster than current GPUs and enable new, more complex algorithms.

“A lot of the neural network solutions we see have artifacts of the hardware designs in them,” Rao says. These artifacts can include curbs on complexity because of memory and processing speed limits.

Intel and its rivals are also preparing for a future in which machine learning models are trained and deployed on portable hardware, not in data centers. That will be essential for devices like self-driving cars, which need to react to what’s going on around them and potentially learn from new input faster than they can relay data to the cloud, says Aditya Kaul, a research director at market intelligence firm Tractica.

“Over time, you’re going to see that transition from the cloud to the endpoint,” he says. That will mean a need for small, energy-efficient computers optimized for machine learning, especially for portable devices.

“When you’re wearing a headset, you don’t want to be wearing something with a brick-sized battery on your head or around your belt,” says Jack Dashwood, director of marketing communications at the San Mateo, California-based machine learning startup Movidius. That company, which Intel announced plans to acquire in September, provides computer vision-focused processors for devices including drones from Chinese maker DJI.

Nvidia, too, continues to release GPUs with increasing levels of support for machine learning-friendly features like fast, low-precision math, and AI platforms geared specifically for next-generation applications like self-driving cars. Electric carmaker Tesla Motors announced in October that all of its vehicles will be equipped with computing systems for autonomous driving, using Nvidia hardware to support neural networks to process camera and radar input. Nvidia also recently announced plans to supply hardware to a National Cancer Institute and Department of Energy initiative to study cancer and potential treatments within the federal Cancer Moonshot project.

“[Nvidia] were kind of early to spot this trend around machine learning,” Kaul says. “They’re in a very good position to innovate going forward.”

Related Video: Will AI Destroy Or Delight Us?

Fast Company , Read Full Story

(108)