Google Wants To Use Your Device To Train Its Artificial Intelligence

Google Wants To Use Your Device To Train Its Artificial Intelligence

by Laurie Sullivan, Staff Writer @lauriesullivan, April 11, 2017

Google has done a fine job of determining the difference of homographs in searches, but not so much in Gmail, the company’s email platform. Homographs — words that are spelled the same, but have different meanings, such as jaguar the animal or Jaguar the car, aspen the tree or Aspen the city, and conference speaker versus a music speaker — require completely different targeting strategies and options based on the semantics and the context of how the words are used.

This type of ad targeting in email has become a focus for Google, but this latest debacle proves to me that the Mountain View, California company has miles to go before understanding the meaning of the context to precisely target ads.

For instance, two completely different emails written in Gmail, with one email written to a building contractor using the word “speaker” to describe a built-in home speaker system and the other to describe a speaker at the MediaPost Search Insider Summit, were joined together in a chain based on one word — Speaker — in the subject line of the email. Imagine my surprise when I found the two email chains to two different people fused. The context of each email and subsequent emails that followed were completely different.

It may be as simple as finding a better training method for its algorithms. In fact, Google is experimenting with a new method of training its artificial intelligence and deep-learning algorithms that it calls Federated Learning. It’s mainly used for mobile.

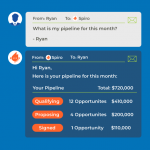

In this approach Google decentralizes — rather than collects in one place — the data it needs to make decisions and train its algorithms. The teaching process occurs on each device. The algorithms learn from shared prediction models.

Google Research Scientists Brendan McMahan and Daniel Ramage explain: “Your device downloads the current model, improves it by learning from data on your phone, and then summarizes the changes as a small focused update. Only this update to the model is sent to the cloud, using encrypted communication, where it is immediately averaged with other user updates to improve the shared model. All the training data remains on your device, and no individual updates are stored in the cloud.”

Federated Learning allows for privacy, smarter models, lower latency, and less power consumption. The model on the phone can be used immediately, powering experiences personalized by the way each person uses their phone. It can train deep networks using between 10 and 100 times less communication.

Google is testing Federated Learning in Gboard on Android, the company’s keyword. When Gboard shows a suggested query, the phone locally stores information about the current context and whether the owner of the phone clicked the suggestion. Ah, yes, but can it read and learn from the content in your emails?

MediaPost.com: Search Marketing Daily

(24)