This Job Platform Designed By A 26-Year-Old Informs Managers Of Their Bias

There are plenty of recruiting tools that aim to tackle the persistent “pipeline” problem that the tech industry in particular often cites as a reason they can’t find diverse candidates who are qualified for top tech positions.

By virtue of their featured artificial intelligence, a new wave of these platforms are also working to eliminate unconscious bias in the hiring process. For instance, HiringSolved’s recruiting platform called RAI (pronounced “ray”) helps predict candidates’ gender and ethnic backgrounds to help companies reach diversity targets. Its latest tool, TalentFeed+, takes it a step further by mining and consolidating companies’ internal hiring databases. Machine learning reviews a company’s hiring patterns to ensure the candidate pool is diverse.

Mya is an AI chatbot that gets candidates through the automated first phase of resume sorting, so those who are qualified won’t necessarily get eliminated because they aren’t using certain keywords in their application. Others like Blendoor and Interviewing.io either mask or change qualifying information (like vocal tone) so that hiring managers’ decisions won’t be colored by implicit bias.

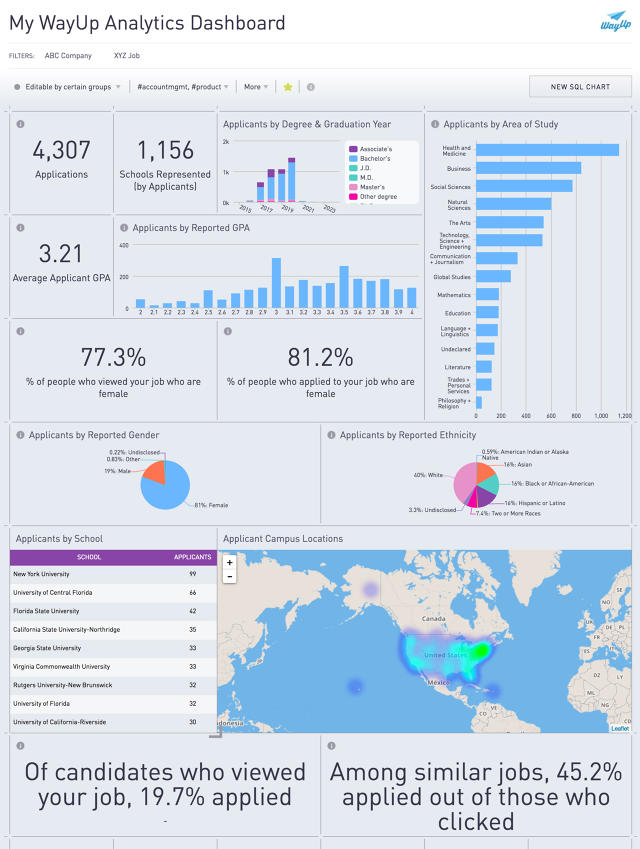

Another entrant to this rapidly burgeoning field is WayUp, a three-year-old marketplace that connects a user base of 1 million students and recent graduates with potential employers. But WayUp is taking the opposite approach by focusing on transparency. The way its 26-year-old CEO, Liz Wessel, sees it, employers can use WayUp to work towards achieving their diversity goals with the use of dashboard analytics that visualize trends and inconsistencies. Highlighting the demographics and diversity of the applicant pool is then made possible through every step of the interview process.

Wessel tells Fast Company that when a user signs up, it’s optional for them to tell WayUp their gender or race. Most people do, says Wessel, as approximately 70% of applicants complete their profiles on the platform. The difference, she explains, is that WayUp doesn’t show this information on an application. “Rather,” she says, “we show this in aggregate to an employer for the sake of being able to observe trends.” Wessel claims that no other job marketplace they’ve seen does this to help employers audit themselves.

And the reason they created this new feature was because the companies themselves were asking for it. “We often heard claims like, ‘We just don’t get enough diverse applicants when we go on campus,’” Wessel says. “So, they’d come to WayUp, where employers knew we had an active user base of diverse students.” Wessel confirms that 73% of WayUp’s user base is composed of “underrepresented minorities” that they count as including female, transgender, Hispanic, or black.

As companies went through the hiring process on WayUp, Wessel says they observed a few businesses would get high numbers of diverse applicants but wouldn’t end up with a diverse pool of hires. “That’s when we dug into the data,” she says.

They discovered that despite WayUp sending a qualified and diverse group of candidates to the employers, the interview process was filtering them out. “We learned it was an issue on their end with unconscious bias,” Wessel says.

Taking that lesson to scale, WayUp launched its analytics dashboard to show companies (in aggregate only) what the gender and ethnic breakdowns are for people who apply for their jobs, so that they can see how these breakdowns change throughout the hiring process, says Wessel. She says that some Fortune 100 companies were able to see how they started with a candidate pool made up of 40% underrepresented minority job seekers, but that number would drop to just 4% of hires. “Without our data, they may never have been able to realize that the problem was in their interview process,” she maintains.

Another point of bias that WayUp’s dashboard data reveals is whether women are more or less likely to apply for a job after reading the job description, says Wessel. Researchers at Carnegie Mellon University analyzing Google’s job advertisements recently discovered a bias towards male users, while other reports have revealed that many job descriptions and recruiting emails loaded with corporate jargon are implicitly speaking to the white, male candidate who is most likely to respond to that language.

“So, for example,” Wessel explains, “you can see that 70% of people who read your job description were female, but only 20% of applicants were female.” Wessel underscores that the data is served up in aggregate for the sake of revealing trends rather than singling out specific candidates. Still, this gives the company an opportunity to reframe the job description in more gender-neutral language.

So far, Wessel says, the company has scaled to its current user base while maintaining a level of one candidate in every three who apply for a job through the platform getting hired at a company. It’s free for candidates, and WayUp’s revenue comes from the over 12,000 companies that pay a subscription fee to get access to applicants. For now, the dashboard is still in its nascent stages, so the true measure of impacting diversity is still a way off. But every little glimpse into the process offers hiring managers the opportunity to become more aware of unconscious behavior, and perhaps make a concerted effort to change.

Fast Company , Read Full Story

(30)