What The Tesla Death Teaches Us About Designing Driverless Cars

On May 7, Joshua Brown was behind the wheel of his beloved Tesla Model S, reportedly watching a movie as the car took care of the driving. Brown didn’t notice, and neither did his Tesla, when a truck took a left onto the stretch of highway before them. It was bright and clear out, a warm Florida day, and the car simply couldn’t make out the white truck against a sunlit white sky. Neither could Brown—whether because he was distracted by the movie he watching, or because he couldn’t see. His Tesla plowed into and under the truck without braking at all, sheering the top off the vehicle and killing Brown.

Consumer Reports, in the wake of the Tesla accident, called for Tesla to immediately squash its so-called Autopilot feature, describing it as an instance of too much autonomy too soon. That much seems obvious. There have been fragmentary, even scary-sounding reports about the difficulty of passing control of a car back and forth between the machine and its driver.

Is Tesla ahead of the curve or behind it? What are we to do about driverless cars? Is this a design problem that, as my editor asked, is too hard to solve? No. Not even close. Joshua Brown died because of Tesla’s cockiness in releasing a beta wrapped in hype that encouraged drivers to push the car beyond the limits of its design. And while Tesla seems happy to unleash unsolved problems onto its customers, there are plenty of automakers who have actually solved those problems.

Unless you’ve bought a car recently, this might be news to you. It’s probably a surprise that Tesla’s Autopilot feature isn’t actually all that unique. Consider a top-of-the-line Audi Q7. Like many cars, it has “lane assistance” that prevents you from veering into a neighboring lane on the highway. But the Q7 also has “adaptive cruise control”—and that boring name belies just how far “adaptive cruise control” has come in the last 10 years. The car will literally brake and steer for itself when you’re in heavy traffic. The sensing technology behind it, which relies on cameras and radar, is the same as you’d find in a Tesla.

The Mental Model Rules

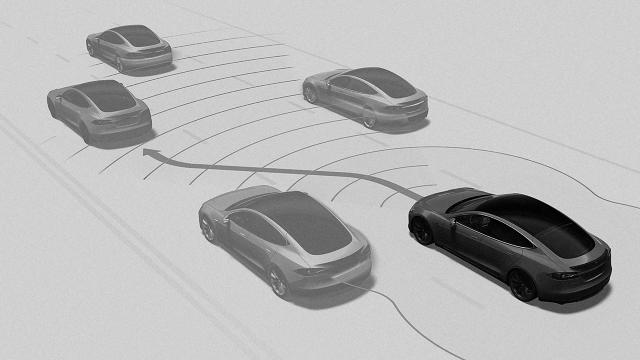

The differences between the Q7 and the Tesla that Joshua Brown drove were striking. It had the same basic technologies: radars and cameras that identified the lane markers and the cars around, so that when you hit cruise control the car could stay in its lane and brake to stay in the flow of highway traffic at a comfortable distance around you. But unlike the Tesla, you couldn’t take your hands off the wheel for much more than a couple seconds without the car pinging insistently, then frantically. But more than that, the car, which I could feel steering in the lane by itself, wasn’t totally steering. It was instead preventing me from wandering into another lane if I got too lax with the steering wheel. The whole mental model of what the technology was was different. That simple detail, of the steering wheel preventing an accident but not taking over enough for a comfortable hands-free ride: That was a detail that told me, you’re still driving so pay attention. I wouldn’t have taken my hands off the wheel, even if I could, because my understanding of the technologies’ limits and rules were different.

Tesla, for its part, was quick to say that when the Autopilot feature is engaged, all drivers are told to keep their hands on the wheel and stay at attention—and that failure to do so was ultimately their own fault. That’s a striking statement. True, the Tesla does start beeping after a few minutes if you don’t have your hands on the wheel. That’s a crucial few minutes. By letting drivers take their hands off for so long, Tesla simultaneously told drivers to pay attention but also invited them not to. Tesla, in rushing to allow drivers to experience the thrill of taking their hands off the wheel, designed a system that would work fine if it wasn’t misused. But the Audi system and others just like it are designed so that they can’t be misused. The car simply stops driving. Put another way, it’s easy to design something that works when it’s being used perfectly. It’s much slower to design something that works when people try to misuse it.

Maybe the most basic insight in the 75-year-old field of human-machine interaction (HMI) is that you can’t design for humans under ideal circumstances. You have to account for all the ways that they’ll use and misuse the tools they use everyday—from the autopilot feature in airplanes to the cruise control feature in cars.

The HMI principles that should have led to a better Tesla aren’t all that complex. We’ve been honing them for 75 years. But if you get them wrong, people die.

Maybe that sounds hyperbolic. It’s not. But understanding that requires a little bit of history.

The Roots Of An Old Discipline

It was the death of pilots during World War II that spawned the birth of the discipline of human-machine interaction. When the military began to notice that a terrifying number of pilots died not in battle, but simply trying to fly their planes, they asked the psychologist Paul Fitts to figure out why. (If you’re an interaction designer, you probably know him by Fitts’s Law, which describes how quickly you can locate a button on a screen based on how far it is and how big.) Fitts set about studying 460 airplane crashes, and found that an enormous number of them resulted from pilots simply being confused about what levers they were pulling. For example, in many planes, the handles to engage the landing gear and to engage the wing flaps were shaped the same and located right next to each other. A pilot landing his plane was all too apt to engage the airplane flaps instead of the landing gear, to disastrous effect.

That insight spawned a number of innovations and insights. In any interaction design textbook, you’ll find requirements for interfaces that include navigability and consistency—that is, the idea that interfaces should present all their functions clearly to the user, in a way that is always the same. Otherwise, people get confused, they make errors, they die. In airplanes, it was Alfonse Chapanis who proposed that airplane levers simply be coded by function—triangle-shaped knobs for the flaps, and circle-shaped knobs for the landing gear. That system persists today in airplanes. That system inspired the knobs and dials of your car, which are designed to be identified simply by feel. That’s one reason why so many HMI experts and interaction designers abhor touch screens in car interiors.

But perhaps the core insight that Fitts came upon was that disaster tends to happen when people are confused about what mode a machine is in—that is, thinking that the machine you were operating was in one mode, when it was actually in another. Today, it’s been estimated that about 90% of all “human error” airplane crashes are a result of mode confusion. A pilot reassumes control over his plane on autopilot; he doesn’t realize that some vital safety feature isn’t operative. Assuming that it’s in place, the pilot proceeds to crash his plane into the side of a mountain.

This should sound familiar. There are two tightly linked problems on the horizon for autonomous cars. The first is how to teach drivers what their machines can and can’t do. The second is to allow drivers and their cars to pass control back and forth between each other, in a way that’s unequivocal. The second problem is vastly more complicated for cars than it is for airplanes—because unlike airplanes, you don’t have expert drivers behind every wheel, vouchsafed by regulated training. But it’s a problem that’s well on its way to being solved at places like Audi and Volvo and Ford. It’ll be solved by a sympathetic dialogue between us and the cars we drive—a dialogue that sounds both futuristic and like common sense, once you dig into it.

According to all the major carmakers working on autonomous features, their next generation products will hit the road around 2018. These so-called Level 3 Autonomous vehicles will be a stepwise advance from the adaptive cruise control and lane guidance in cars today. (There are five levels of driving autonomy, as laid out by the Society of Automotive Engineers. We’re currently on Level 2.) These cars will finally be able to drive without you having your hands on the wheel by design.

To do so, they’ll need to know more about their surroundings and more about you, the driver. They’ll know about their surroundings through maps of zones where autonomous driving is safe. Autonomous driving won’t simply arrive as a feature in our car—it’ll be an invisible feature of the roads themselves. Think about them coming online like cell-phone service, in the early days—first in commonly used, heavily trafficked corridors, and then gradually filling in as our maps get more sophisticated and detailed. This reliance on mapping is one reason why carmakers are busy forging agreements to share data.

When you’ve entered a stretch of highway or city that’s been mapped for autonomous driving, the car will allow you to activate the self-driving mode. But when you reach the edges of that map—or if the road conditions change—the car will prompt you to take over once again. The automakers differ in the details of what they’ve designed, but imagine that your car, which has been driving itself, will start pinging and notifying that it’s time for you to take over. You grab the wheel. But that’s not enough to make sure you’re prepared to drive. Thus, there will have to be cameras inside the car that sense whether your eyes are forward; the steering wheel and foot pedals will also have to sense that you’ve got your hands and feet in place. Only then will the car allow you to take back over.

So what happens if you don’t? What happens if you continue not to meet all the criteria of taking back control, and the car is nearing the end of a autonomous driving zone? The car will simply pull over to a stop on the side of the road—a road that it already knows. The point of all this is that it’s not simply on the driver to make sure everything is okay. The system has to be designed so that the machine knows the driver’s capabilities and the driver knows the machine’s capabilities. The machine will have to have been designed to handle situations where its capabilities are tapped out.

Our Limitations, Ourselves

It’s a good thing that Tesla has forced other automakers to rethink the experiences they deliver. It’s a good thing that they’ve forced other automakers to move quicker. But this is a case in which by rushing something to market, they may have hurt the arrival of autonomous driving. In the small community of designers working on autonomous vehicles, there is palpable worry that Tesla’s missteps will actually slow the process of innovation, by spurring lawmakers to require licensing to operate a driverless car that won’t keep pace with the underlying technology.

In Joshua Brown’s Tesla accident, things went wrong before he even got behind the wheel. Things went wrong when Tesla called its lane assistance and adaptive cruise control features its “Autopilot” mode. When you design an experience, words matter. When you call something Autopilot, you summon an image of capabilities that the car doesn’t yet have—and when you’re Tesla, riding the strength of an unbelievable brand premised on the idea that the incumbent automakers don’t know what they’re doing, you summon the idea that you can do something that the other carmakers can’t. You give drivers a false sense of confidence in what the technology can do.

To some extent, that’s probably what people want when they buy a Tesla. They want the sense that they’re on the bleeding edge. That’s a choice they’re free to make. And I don’t doubt Tesla when they say that Autopilot is “almost twice as good as a person,” as measured by accidents per mile. But that’s only part of the point. The point is, is Tesla’s Autopilot as good as it should be?

The hard truth of any experience design is we have to know the limitations of the machine. And the machine has to be designed with our own foibles in mind.

[All Photos (unless otherwise noted): Tesla]

Fast Company , Read Full Story

(56)