With Apple’s Differential Privacy, Is Your Data Still Safe?

In the wake of its very public fight with the FBI in recent months, Apple has become associated with privacy more than any other tech company.

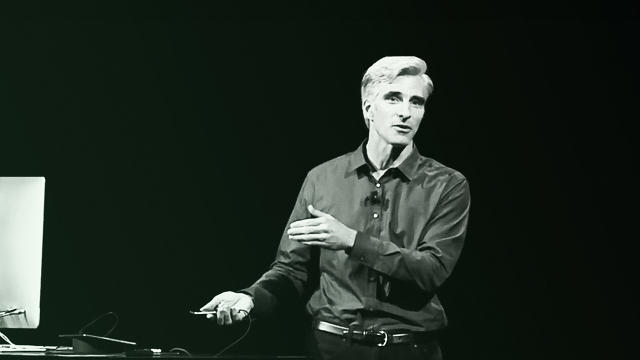

At (June 23, 2016)’s WWDC, its annual developers conference, the company announced new forays into advanced technology to learn more about its users and to automate tasks. But Apple’s software chief Craig Federighi stressed onstage that each individual’s privacy would remain a priority, via a technique called “differential privacy.”

“Apple has been doing some super-important work in this area to enable differential privacy to be deployed at scale,” he told the audience of developers. To back up these claims, Apple shared a quotation from University of Pennsylvania privacy researcher Aaron Roth, describing the company as a “clear privacy leader” if it incorporates differential privacy, meaning its ability to evaluate trends while keeping data anonymous. (Note: Roth later told Wired he couldn’t comment on anything specific that Apple is doing with differential privacy.)

In a nutshell, differential privacy essentially adds some noise or randomness to the data to make it hard to extract individual users’ information. As to the question of whether Apple is really a leader in this field, reactions were mixed among cybersecurity specialists and cryptographers.

Differential Privacy Is Still In Its Infancy

Matthew Green, a cryptographer and security technologist at Johns Hopkins, tweeted his skepticism throughout the conference. As Green argued, differential privacy has so many “tradeoffs” that “deployments decisions are everything.” And at this point, little is known about Apple’s proprietary technology. He added:

This view was backed up by the Electronic Frontier Foundation’s technology expert William Budington, who wrote me: “What remains to be seen is how these features will be implemented. Implementing privacy-protecting algorithms are often tricky to get right—just because it works on paper doesn’t mean it will act properly when implemented in software.”

Another popular thread among experts was to point out that differential privacy is still very much in its infancy. And if the technology is still so unproven, how can Apple already be a leader? As applied cryptography PhD student Nadim Kobeissi noted:

That said…

Other developers took to social media to defend and praise Apple for at least taking steps to protect users’ privacy.

And Electronic Frontier Foundation’s Budington did note that Apple is taking a step in the right direction. “If the claims are true, it will allow Apple to do aggregate data-mining while at the same time respecting the privacy of its customers,” he added.

Where experts agree is that they’ll be keeping a close eye on Apple’s privacy strategy as it takes on competitors like Facebook and Google in emerging areas like artificial intelligence. Can Apple learn about its users’ data, but not your data?

More News From Apple’s WWDC 2016

- WWDC Proved Apple Is Serious About Making The Apple Watch Super Personal

- Apple’s 7 Most Immediately Useful New OS Features

- Apple Wants China To Know Apple Loves China

- Highlights From Apple’s WWDC 2016 Keynote

- Here’s What Apple Is Bringing To The Apple TV

- Here Are Apple TV’s Biggest Challenges At WWDC And Beyond

The history of Apple in under 3 minutes

Fast Company , Read Full Story

(78)