3 things NASA learned after it put a supercomputer in space

We’re a long way from the HAL-9000 (thankfully), but NASA is considering a bigger role for high-end computers in deep-space missions, such as a journey to Mars. To prepare, the International Space Station has been hosting a system built by Hewlett Packard Enterprise (HPE) for the past 11 months. The initial findings, according to HPE: It works without major glitches.

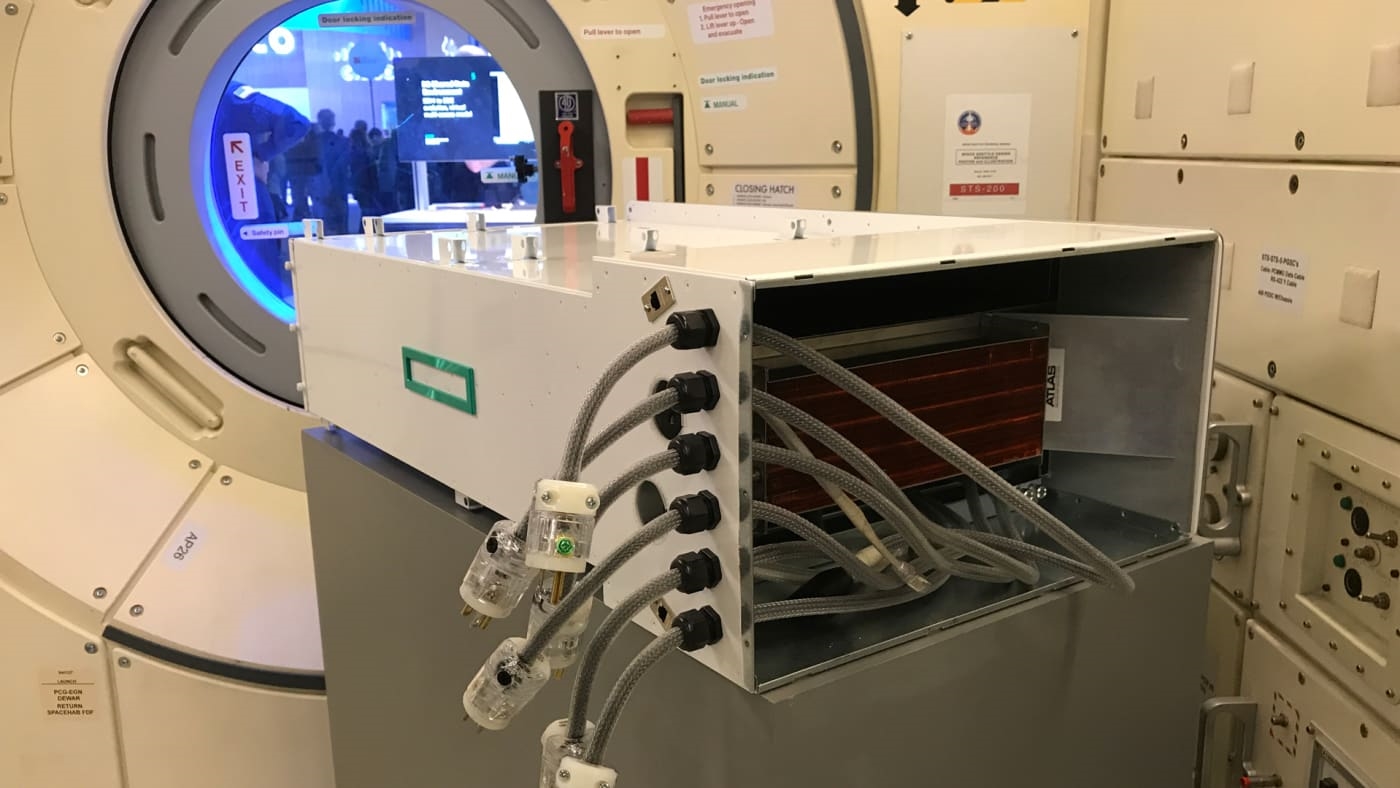

The system, an Apollo 4000-series enterprise server, is considered a “supercomputer” because it can perform 1 trillion calculations per second (one teraflop). That’s not so rare nowadays, but it’s way more computing power than NASA has had in space. Those resources can do complex analysis on large amounts of data that aren’t practical to beam back to Earth.

The key aspect of this test was to see if a standard, off-the-shelf computer could survive the abuse of life in space–especially radiation exposure–using only software modifications.

The computer will get a full evaluation when it returns to Earth later this year, but HPE says it’s already learned three valuable lessons:

(43)