6 ways Apple updated iOS to be ready for a mixed reality metaverse

For years, we’ve known that Apple was working on a mixed reality headset. And at this week’s Worldwide Developers Conference (WWDC), Apple spent yet another event not talking about it at all.

Or didn’t they?

In fact, as I watched the WWDC keynote—which outlined the latest software updates coming to iOS 16, WatchOS, and the iPad this fall—I saw a slew of changes that seemed to be the building blocks for updating Apple’s screen-based UX into something that could work in our 3D world.

The following ideas are purely speculative on my part, but it appears that Apple is updating iOS’s user interface in ways that will directly translate to the way we use an Apple headset in the future. (I was largely inspired by a recent concept developed by Argodesign, a design firm that’s consulted for the mixed reality startup Magic Leap for two years.) And if I were the betting type, I’d put my money on all of these features being built into an Apple headset to come next year.

Apple’s headset will lay graphics over the real world. However, I imagine that people in your vision will be able to walk in front of these graphics so they aren’t so distracting. Apps will be consolidated into bite-size widgets, and depending on the time of day and where you’re at, Apple will arrange your mixed reality world differently for work or leisure. To top all this off, Apple will integrate Siri more than she’s ever been on the iPhone, allowing her to type up full emails on your behalf, and giving you the option to easily edit her work.

These are all just bets, of course. But go ahead and get your wallet out while you scrutinize my circumstantial evidence.

People over graphics . . . literally

Craig Federighi, Apple’s senior vice president of software engineering, kicked things off by showing how the new iOS 16 lock screen will work. He took a photo of his daughters and dragged them right over the time, so their heads were peaking over the numbers just a bit.

This trick hides what I believe will become the predominant UI rule for an Apple headset—that graphics appearing in the real world will generally not cover human beings, cementing that the people in your life are more important than apps and the internet.

Why do I think this? Federighi demonstrated how the lock screen will cut out the shape of a person, allowing you to slide that person right over the clock. (I find myself wondering, would this trick work for the photo of a flower, too?) It’s a subtle effect that’s been used in reality TV and other programming for years, which integrates graphics to feel like they’re more part of the photography. Apple already understands how to decipher human figures; its camera uses AI in portrait mode, for instance, to outline someone’s body and keep them in focus while blurring the background. Now Apple is pushing that technology further.

Apple also demonstrated how iOS 16 will use AI to let you cut a person or pet right out of a photo, dragging that clean cutout into Messages or wherever else you’d like to share it. In mixed reality, it’s easy to imagine how these could be used in real time, for silly messages in your eyeballs, or even teleconferencing with someone who can stand in your room without a big frame around them.

Widgets are the new apps

If we do end up relying on mixed reality, we need to reimagine apps. With most things you do on a phone, you start by opening an app like Spotify, Gmail, or Snapchat, and then you can listen to music, send an email, or post an update. This approach doesn’t really make sense for mixed reality, though. Why have a holographic computer integrated into your living room just to open a single app and use it as you do your phone? You’ll likely want lots of apps working together at any given time.

It seems more likely that widgets—or bite-size versions of apps—could fill your view in mixed reality. And Apple announced major upgrades to its widgets this week, as they’re coming to the iOS 16 lock screen for the first time. You’ll be able to, at a glance, check if you’ve filled your Activity Rings (Apple’s exercise graph), see the next appointment on your calendar, check the temperature, and know your battery life.

These widgets could easily come right to any mixed reality headset, allowing users to put their most important information in their eyes, always ready to be seen at a glance.

Notifications that don’t distract our eyes

The way Apple handles notifications is objectively terrible: Every app can ping you as many times a day as it wants, and if you don’t deactivate them, these notifications pile up into one giant list that grows as endless as Twitter. No one has time to read them all, and if you’re anything like me, you just regularly purge the whole thing without checking.

While iOS 16 doesn’t solve Apple’s notification problem by any means, it will consolidate notifications into one big stack rather than a list, which makes them a less visually overwhelming pile. (It’s worth noting that iOS does use stacking today, organizing by app within its giant list. The new update seems to consolidate all these stacks into one simpler pile.)

For mixed reality to be sustainable, notifications simply can’t continue to steal our attention through their sheer pushiness, because these notifications will live in our eyes. Sweeping them all into one stack is a sensible way for Apple to mitigate the problem while, hopefully, figuring out a more sustainable solution long term.

Focus filters to swap between work, play, and more

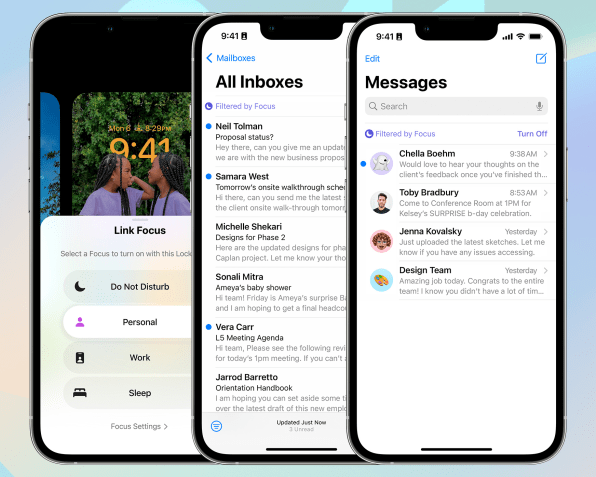

Apple’s Focus feature is supposed to add boundaries to your phone. It allows you to swap from work, personal, driving, and sleep modes, each of which will change the apps that appear on your home screen, and which can bug you with updates at any given moment.

With iOS 16, Apple is adding more to Focus with a tool called Focus Filters. Now, you’ll be able to set up rules like who can reach you via Messages or email depending on the context. Maybe that’s colleagues during the week, but only friends and family on the weekend.

These Focus features make perfect sense for mixed reality because, even if you’re stuck in one room all the time, that room could look and work differently depending on your Focus. What do I mean? Say you’re using mixed reality in your home office. During work, you might want your space to be covered in serious computational apps. If you’re a stock trader, you might want to follow trades, with many widgets working in tandem. But on the weekend, maybe you just want to play video games in that office, and put all those stocks away. Focus Filters take us half a step there. They leverage the digital interface to redefine how a physical space works.

3D sound from real life images

I’ve never quite understood Apple’s obsession with its spatial audio, which, if you’re using Airpods, allows you to turn your head and hear music as if it’s moving around in a concert hall rather than perfectly placed into your ears. I get that this is a neat gimmick. But listening to recordings in headphones often sounds better than concert audio! Why recreate the worst parts of real life?

The answer is likely Apple’s mixed reality ambitions. iOS 16 will get a big upgrade to spatial audio. Now, the phone will let you use its True Depth (3D camera) to map personal audio tuned to you in real time. How will this even work with iPhone apps? I can’t tell yet, honestly. But take that same technology and put it into a mixed reality headset? Suddenly, Apple can give every widget you see sound, and it’ll sound like it’s coming from the exact right spot perceptually. This one feature could do a ton to increase the immersion of an Apple headset.

Siri is no longer a voice interface; she’s a third hand

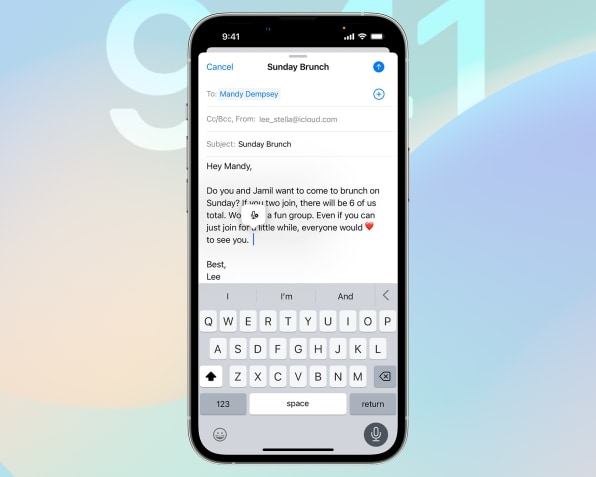

Finally, the Siri voice assistant has been one of Apple’s sleepiest projects since it acquired the technology in 2010. Over that time, the technology went from cutting edge to status quo. And aside from texting while driving, Siri isn’t all that useful. That’s a problem for Apple, as mixed reality will almost certainly require us to use our voice to jump between functions, lest we flail at the sky all day.

In iOS 16, Siri is getting a new trick. You’ll be able to dictate long messages complete with proper punctuation. But as you speak, if you see Siri get something wrong, you’ll be able to tap on the word, say it again, and have Siri retry spelling the word. This sounds like a small detail, but watching the brief demo, it’s clear that Apple is pushing voice forward as a tool you can use while typing or tapping. This new Siri operates more like a third hand than a typing alternative. That will be key for complicated mixed reality tasks.

If that’s not enough to convince you, know that Apple is also updating its Siri API, allowing developers to automatically add more Siri functions into their apps without adding extra development time. Perhaps Apple never forgot Siri. Perhaps she’s been sleeping, waiting for mixed reality to take over.

Fast Company , Read Full Story

(27)