Apple’s plan to scan iPhones for child abuse misses the point of privacy

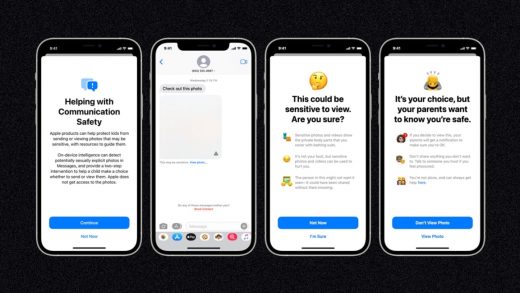

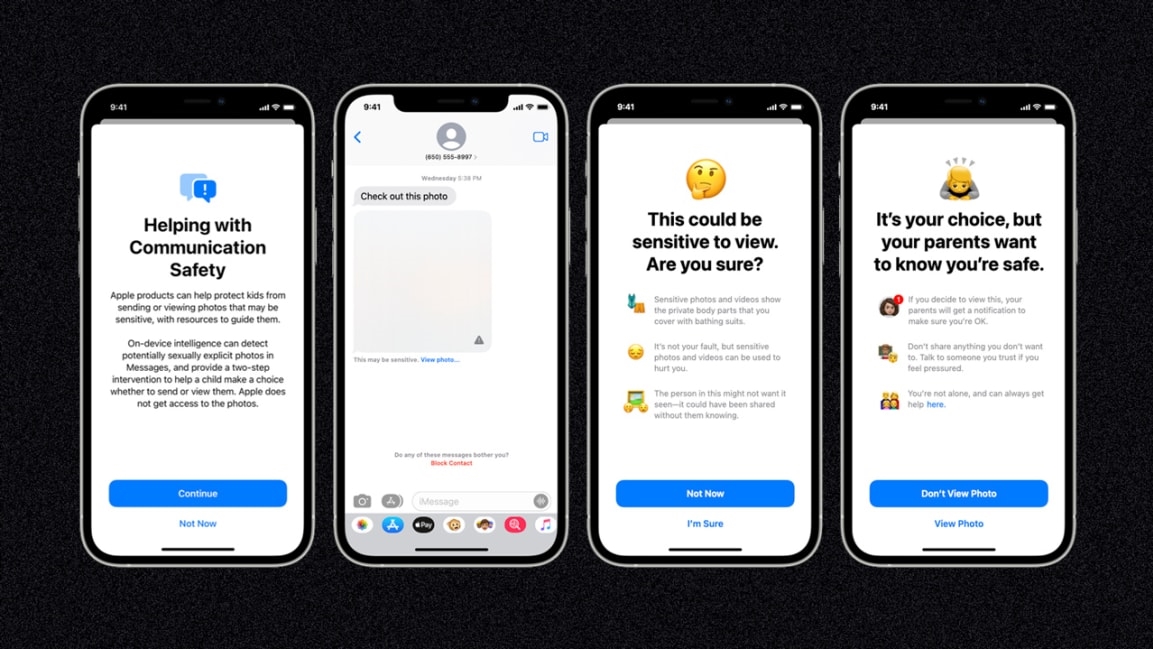

On Thursday, Apple surprised the tech world by announcing that it will actively scan some users’ iPhones for known images of child sexual abuse material. The company also plans to discourage Siri searches for this material and warn children against sending or receiving sexually explicit messages, all starting with the launch of iOS 15 this fall.

The news drew a quick backlash from security experts and civil liberties groups, who believe the company is standing on a slippery slope by adding these content-scanning systems to its devices. The news also seems to have raised some objections inside Apple, as the company has stood behind its system in a memo to employees.

In defending its new system, Apple has pointed to all the ways in which it protects privacy and avoids collecting users’ data. Its scanning systems run on the device instead of in the cloud, for instance, and it’s not looking at the content of the images themselves to detect abusive material. Instead, Apple will try to match those images’ digital signatures against a database of known child sexual abuse material, so there’s no chance of innocent bathtub photos getting swept up in the system.

But all of this technical justification misses the larger point: No amount of privacy protection will matter if people feel like they’re being watched, and the very existence of Apple’s new scanning system could make this perception difficult to avoid.

To back up for a moment, Apple’s plan consists of three parts:

Apple isn’t alone in looking out for child sexual abuse material, or CSAM. As TechCrunch’s Zack Whittaker points out, Google, Microsoft, and Dropbox also scan for potentially illegal material in their respective cloud services. Apple argues that its own system is more private by design.

But Apple’s approach feels different precisely because of the company’s focus on privacy. For years, Apple has made a point of minimizing what it sends to the cloud and doing as much processing as it can on people’s devices, where it can protect data rather than collecting it on remote servers. Apple’s scanning system shows that even if it’s not breaking encryption or pulling data off your device, the company can still see what you’re doing and act on it. That in itself is unnerving, even if the cause is noble.

Don’t just take it from me, however. This is what Apple CEO Tim Cook told my colleague Michael Grothaus in January as part of a wide-ranging interview on privacy:

“I try to get somebody to think about what happens in a world where you know that you’re being surveilled all the time,” Cook said. “What changes do you then make in your own behavior? What do you do less of? What do you not do anymore? What are you not as curious about anymore if you know that each time you’re on the web, looking at different things, exploring different things, you’re going to wind up constricting yourself more and more and more and more? That kind of world is not a world that any of us should aspire to.”

“And so I think most people, when they think of it like that . . . start thinking quickly about, ‘Well, what am I searching for?” Cook continued. “I look for this and that. I don’t really want people to know I’m looking at this and that, because I’m just curious about what it is or whatever. So it’s this change of behavior that happens that is one of the things that I deeply worry about, and I think that everyone should worry about it.”

It’s hard to square Cook’s comments with Apple’s decision to start watching for certain kinds of behavior on iOS, even if it’s heinous behavior that most people will never stumble into by accident. The mere existence of this monitoring changes the relationship between Apple and its users, no matter how many promises the company makes or technological safeguards it invents.

(35)