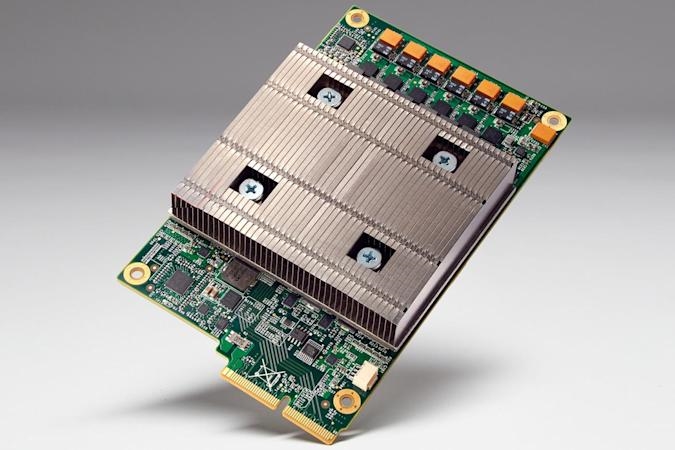

Google built a processor just for AI

Tensor Processing Units are designed to speed up machine learning.

Google is no stranger to building hardware for its data centers, but it’s now going so far as to design its own processors. The internet giant has revealed the Tensor Processing Unit, a custom chip built expressly for machine learning. As Google doesn’t need high precision for artificial intelligence tasks, the TPU is focused more on raw operations per second than anything else: It’s an “order of magnitude” faster in AI than conventional processors at similar energy levels. It’s space-efficient too, fitting into the hard drive bays in data center racks.

The fun part? You’ve already seen what TPUs can do. Google has been quietly using them for over a year, and they’ve handled everything from improving map quality to securing AlphaGo’s victory over the human Go champion. The AI could both move faster and predict further ahead thanks to the chip, Google says. You won’t get to buy the chip yourself, alas, but you might just notice its impact as AI becomes an ever more important part of Google’s services.

(36)