Siri’s next frontier is human intelligence, not AI

A few days before Apple’s keynote at Monday’s WWDC, I declared that no aspect of the company’s announcements would matter more than the news relating to Siri. Though Apple did end up devoting a fair amount of the two-hour presentation to its voice assistant, the new developments were less of a showcase for AI than I expected. Nor did they overshadow the morning’s other reveals relating to iOS, MacOS, WatchOS, and tvOS. Still, Siri is going to get a meaningfully new direction when iOS 12 ships this fall.

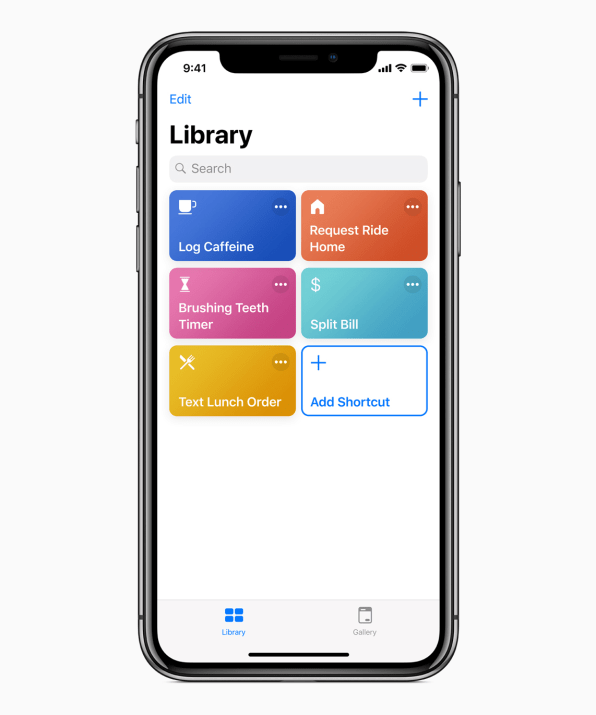

The key is a new feature called shortcuts, akin to the “routines” in Google’s Assistant and Amazon’s Alexa. Shortcuts will let you set up Siri to perform an action or series of actions when it hears you speak a particular command. (You’ll be able to choose from a library of shortcuts or roll your own, and will be able to initiate a shortcut by tapping as well as speaking.) These actions will be able to dig into the functionality of a third-party app: Apple’s examples included a shortcut that lets you use a Tile locator gizmo to find your keys, and another that allows you to pull up travel information from within the Kayak app.

Shortcuts will tie into Siri’s suggestions, an existing feature that is getting beefed up in iOS 12. It’ll present you with shortcuts you might need based on contextual clues. One of Apple’s examples will switch your phone to Do Not Disturb when you’re at a movie. Another will send a message to a meeting organizer if you’re running late (presumably based on your calendar items and GPS location).

Shortcuts will be available across the iPhone, iPad, Apple Watch, and HomePod. (They look like they could be especially useful on an Apple Watch, since they can achieve complex tasks without requiring you to poke around at your watch’s tiny interface.) Their importance to the iOS platform will go beyond Siri itself; power users have begged Apple for more automation features within iOS for years, and that’s exactly what shortcuts offer.

People-powered features

AI is not altogether absent from Siri’s new features. It will come into play, for instance, when the service decides which shortcuts to suggest to you. Mostly, though, what’s new appears to be about leveraging human intelligence, not simulating it. By creating shortcuts, you’ll be able to customize Siri to your own preferences rather than settling for a one-size-fits-all assistant. And developers will be able to call on Siri with a degree of flexibility that seems to go well beyond past integrations, which have been limited to a few scenarios specified by Apple, such as ride hailing.

Siri shortcuts are a technical and spiritual heir to Workflow, a powerful third-party automation tool that Apple acquired last year. They’re also reminiscent of IFTTT, which not only lets you create automations but also allows you to share them with other users. It’s easy to envision a future version of shortcuts enabling similar sharing, thereby taking a hive-mind approach to exponentially increasing the range of tasks that Siri can accomplish. That could help Apple’s digital assistant shed its image as an underdog compared to Alexa and the Google Assistant, even as Apple continues to play catch-up in AI.

What’s next?

The news that got announced at WWDC only tells us so much about where Siri is going. As a largely cloud-based service, it can get new functionality on a speedier schedule than the traditional once-a-year timetable of an operating system like iOS or MacOS. Beyond that, Apple sometimes slips into an every-other-year cadence for certain aspects of its updates; a quiet 2018 for Siri AI could be a sign that the company is already working on more fundamental changes for 2019. That could be especially true after the company recently managed to hire away a titan of Google AI to work on its products.

One other thing: I’d like to cheerfully eat some of the words I wrote in that pre-WWDC story about Apple, Siri, and AI. “When the company does make major strides in this field,” I helpfuly explained, “they’ll be most likely to show up as new capabilities within Siri.”

Wrong! The most intriguing AI that Apple announced at WWDC shows up in iOS 12’s Photos app, where the search features and a new tab called For You will use a variety of clues to pinpoint the pictures you’re most likely to care about among the thousands that matter less. (My phone currently says I have 52,144 photos stored away—how about yours?)

iOS 12’s AI will look for photos that show people—and show them smiling, with their eyes open. It will check to make sure photos are in focus, and will logically assume that those you’ve edited or shared might be keepers. The app will also use face recognition to help you share photos you’ve taken with the people they depict, and to let those folks share back the snapshots they took at the same event. And it will perform these feats on your device rather than on Apple servers, hewing to the company’s stance that it wants to avoid nosing around in your data in the cloud.

None of this involves any breakthroughs. Instead, it builds on AI already in use in Photos and helps the app keep pace with Google Photos, which has been infused with AI from the start. Even so, it’s a reminder that the AI wars have many fronts—and that it’s a mistake to assume those battles are synonymous with the one going on among voice assistants in particular.

(34)