When Tesla revealed that Autopilot was engaged during the fatal Model X crash in Mountain View, it only said that the vehicle’s “logs show[ed] that no action was taken” even though the driver had time to react. Now, the automaker has issued another statement much stronger than that, saying that the only way the accident could have happened was if the driver (identified as Apple engineer Walter Huang) wasn’t paying attention.

“We are very sorry for the family’s loss,” the statement sent to ABC 7 News reporter Dan Noyes started. “According to the family, Mr. Huang was well aware that Autopilot was not perfect and, specifically, he told them it was not reliable in that exact location, yet he nonetheless engaged Autopilot at that location. The crash happened on a clear day with several hundred feet of visibility ahead, which means that the only way for this accident to have occurred is if Mr. Huang was not paying attention to the road, despite the car providing multiple warnings to do so.” (Emphasis ours.)

The family’s lawyer believes that the company is doubling down on putting the blame on Huang in order to distract from their concerns about his vehicle’s Autopilot. Mike Fong, one of their attorneys, told ABC 7 that the sensors in Huang’s car misread the road’s lane lines and that its braking system failed to detect a stationary object on its way. Those are presumably the reasons why, on several occasions, his car veered towards the barrier where he crashed. While it sounds like the family is gearing up to sue, Fong said he doesn’t expect to file a lawsuit until after the National Transportation Safety Board is fully done with its investigation.

As for the rest of Tesla’s statement, it stresses that Autopilot requires drivers to have their hands on the wheel:

“The fundamental premise of both moral and legal liability is a broken promise, and there was none here. Tesla is extremely clear that Autopilot requires the driver to be alert and have hands on the wheel. This reminder is made every single time Autopilot is engaged. If the system detects that hands are not on, it provides visual and auditory alerts. This happened several times on Mr. Huang’s drive that day.

We empathize with Mr. Huang’s family, who are understandably facing loss and grief, but the false impression that Autopilot is unsafe will cause harm to others on the road. NHTSA found that even the early version of Tesla Autopilot resulted in 40% fewer crashes and it has improved substantially since then. The reason that other families are not on TV is because their loved ones are still alive.”

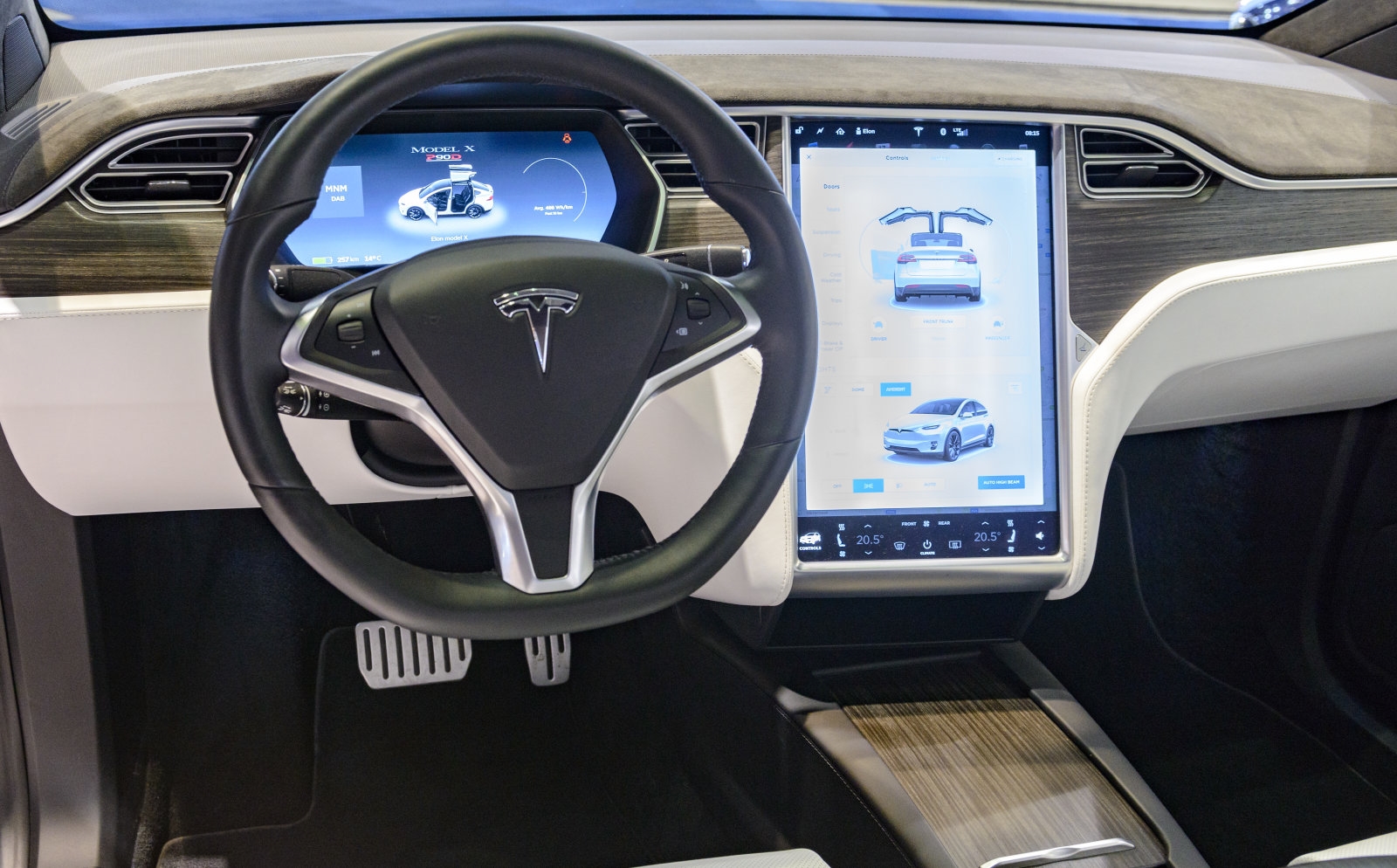

The Autopilot section on Tesla’s website does state that “every driver is responsible for remaining alert and active… and must be prepared to take action at any time.” However, it also features a video showing a person in the driver’s seat with their hands on their lap instead of the wheel, as Twitter product manager Patrick Traughber points out:

“Tesla is extremely clear that Autopilot requires the driver to be alert and have hands on the wheel.” Tesla’s website features a person sitting in the driver’s seat without their hands on the wheel: pic.twitter.com/38dsPzbPH3

— Patrick Traughber (@ptraughber) April 12, 2018

It’s far from the first time the automaker defended its Autopilot technology after a fatal crash. Tesla eyed its braking system instead of its self-driving tech for the 2016 incident wherein a Model S collided with a truck. The NTSB, however, blamed both the drivers in the incident and Autopilot itself. It cited previous findings that Autopilot’s ability to monitor drivers’ actions was extremely limited. The agency asked automakers to do more to ensure drivers are paying attention, and according to Bloomberg, Tesla told the NTSB it had already incorporated features to make that happen.

(54)