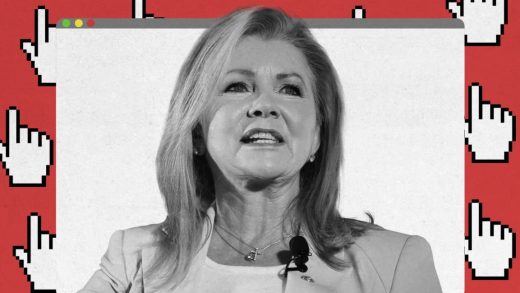

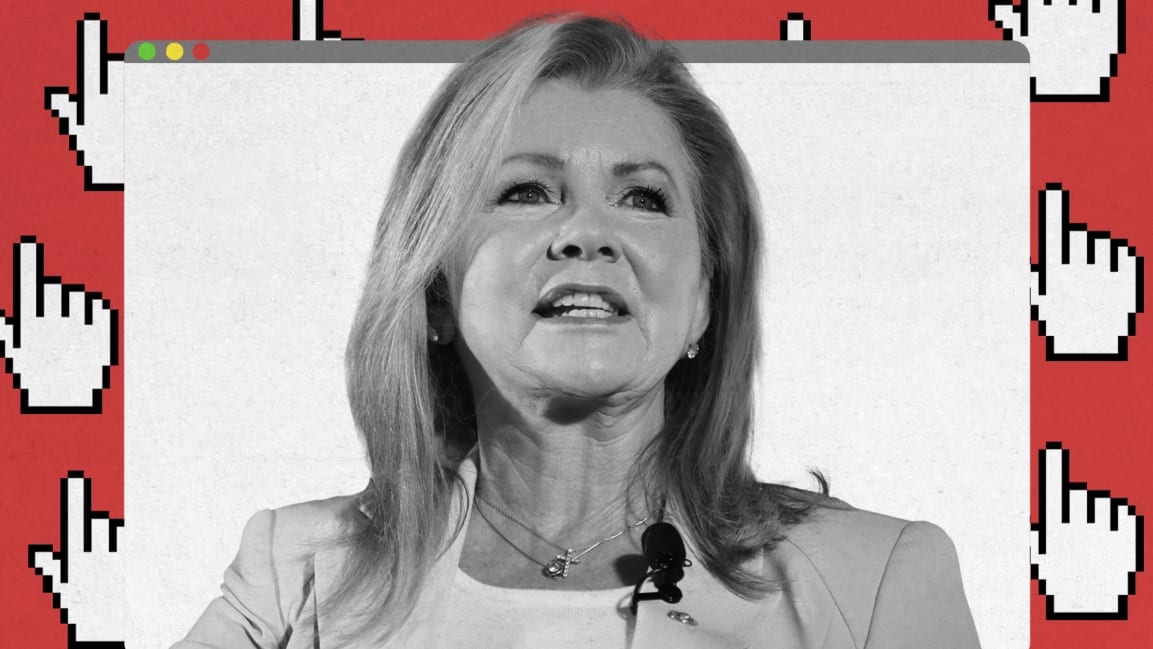

The GOP’s Marsha Blackburn explains her new bill to rein in social media companies

Republican Senators Marsha Blackburn of Tennessee, Roger Wicker of Mississippi, and Lindsey Graham of South Carolina introduced a new bill Tuesday that would change the terms of Section 230 of the Communications Decency Act, which shields tech companies from legal responsibility for user content they host, or expunge, from their platforms. The bill, called the Online Freedom and Viewpoint Diversity Act, would narrow the kind of user content tech companies can remove from their platforms, and restrict their ability to make “editorial” choices about what content to host and where it appears.

The new bill comes after the White House released an executive order in May calling for the reform of Section 230 protections in response to Twitter adding a fact-check label to a presidential tweet for the first time. Section 230 is considered essential by tech companies that host and profit from user content. Exposure to lawsuits over that content might radically reshape their business models and limit the diversity of the content they host.

It’s worthwhile to talk about ways of regulating companies like Facebook and Twitter, which have replaced news media as our primary source of information. Despite their central role in daily life, these companies have largely escaped regulation. And Section 230, which was passed into law back in 1996, has shielded big tech companies from responsibility for content that users post on their platforms. But bills reforming Section 230 offered by lawmakers on the right have been motivated by a largely unproven notion that tech platforms systematically censor conservative viewpoints. It’s unlikely that a 230 reform bill will gain bipartisan support without addressing the full universe of harmful content social media platforms have so far failed to clean up.

I spoke with Blackburn Wednesday night about the new bill, its motivations, and its future. The senator stresses that the bill’s purpose is to compel tech companies to be more transparent about their content-moderation decisions. But the bill’s language suggests that tech platforms that change or alter user content (including conservative content) are no longer neutral and therefore no longer deserving of legal immunity from civil suits.

The following interview has been edited for brevity and clarity.

Fast Company: Was your new bill motivated by the president’s executive order in May concerning Section 230?

Marsha Blackburn: It is really not in response to that. Actually, when I was in the House and chairing the Communications & Technology subcommittee at Energy and Commerce, we started looking at Section 230 and the issues around censorship and prioritization. It’s been a three-year effort for me; we worked on it in 2017 and 2018 in the House. We had good bipartisan conversations [regarding] what should be done around Big Tech. Coming over to the Senate, Chairman Graham asked me to lead this tech task force, a working group in the Judiciary Committee looking at some of the issues around Big Tech.

Republicans feel that Social Media Platforms totally silence conservatives voices. We will strongly regulate, or close them down, before we can ever allow this to happen. We saw what they attempted to do, and failed, in 2016. We can’t let a more sophisticated version of that….

— Donald J. Trump (@realDonaldTrump) May 27, 2020

I think it is fair to say it is in response to a growing bipartisan concern, and I hear it from Tennessee all the time. They feel that different tech companies are hiding behind 230, or that they are subjective in how they operate—not a lot of transparency. If they take something down, if they get a takedown notice, in the entertainment industry there in Nashville, this is something that happened. It means there needs to be some more transparency around it so that we can address some of this.

[The Senator is referring to the Nashville music industry using Digital Millennium Copyright Act (DMCA) takedown notices to remove copyright-protected music from tech platforms. Copyright-infringing content is outside the scope of Section 230 and Blackburn’s new bill. Blackburn was using the DMCA example to demonstrate that her constituents are concerned about how tech companies handle their content.]

FC: I notice that the bill would modify Section 230’s definition of objectionable content. (Section 230 currently lets tech platforms remove “obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable” content. The new bill would replace the words otherwise objectionable with “promoting self-harm, promoting terrorism, or unlawful.”)

MB: The reason for doing this is because tech companies are notorious for having these vague and complex terms of service and community standards. We think that having that additional layer of flexibility [i.e., “otherwise objectionable”] gives them an easy out for their [content moderation] decisions. What we want to do is make them come back and explain these decisions, require them to give you something that is definitive of the process.

FC: Who acts as the referee here to decide whether they have taken something down for good reason?

MB: This is just bringing some clarity to existing [Section 230] law. The whole bill is a total of three pages.

FC: The White House’s executive order was signed right after Twitter first put a fact-check label on a Trump tweet falsely claiming that mail-in voting would lead to a rigged election. Under the language in your bill, would a platform that does that then be considered a “publisher” that “editorializes” and therefore be stripped of its Section 230 protections?

MB: No, it’s not going to strip them of protections. What the bill is going to do is address some of the issues that we have seen surrounding this subjective nature of platforms’ decision-making process. So we are bringing clarification to “information content provider” and making certain that we include this language that [defines that as an entity that] “creates, develops, or editorializes.” It does leave some flexibility that is going to be necessary for these platforms. They may want to make stylistic changes or things of that nature.

But we have to keep in mind that we are focused on increasing accountability, and you are going to have accountability for bias. We think that transparency is important for an industry that really has hidden behind this shield, and actually [has] been unregulated in this space. So going in here and putting these provisions in place I think is a good solid step.

We think that transparency is important for an industry that really

has hidden behind

this shield.”

Marsha Blackburn

It’s something that I think we can secure bipartisan support on. We know that this is going to take a while to get it finished. We know that some of these tech companies and their support groups are not happy with this. I don’t expect them to be happy with this. They have been very pleased to have this shield and kind of go about their business in a very subjective and opaque way.

FC: If you had to identify the core problem addressed by this bill, is it that issue of bias against conservative viewpoints?

MB: It is a lack of transparency and the way they have misused 230. It’s not just conservative issues. Like I said, we hear from individuals in the entertainment industry.

[Again, copyright holders don’t need Section 230 to remove instances of their content from platforms. They can use DMCA takedown notices.]

Now it does seem that conservatives are more adversely impacted by censorship. Absolutely, it does. When [Mark] Zuckerberg was in front of us when I was in the House and one of the questions that I asked him was, “Do you subjectively manipulate your algorithms when it comes to what you are going to give preference to?” he really kind of fumbled that question. He wasn’t really prepared for how to answer that, and he finally rolled around to saying that their content moderators live on the West Coast and that they bring their bias to work with them.

I’ve got protections in the physical space. I want more protections in the virtual space, and I want to be controlling my content. I want to know what people are doing with my content. I want to know why they’re blocking certain content. If I go to Google and then I go to Bing, why am I getting different things in different orders? I really think people are just more attuned to this.

FC: But when we talk about this issue of “editorializing” by these platforms, are Twitter or Facebook in fact doing that when they apply these fact-check labels or “for more information” labels to tweets or posts?

MB: We’ll continue to work this through, and it could be that what you want to do is see some more specifics from a content provider and information content provider. More sourcing. This [bill] is going to address some of these issues that have been subjective in nature of these platforms’ decision-making process, but we want to give them a little bit of flexibility when it comes to stylistic changes and we need to get this pointed in the right direction.

Big Tech needs to understand that while they have tried to make Section 230 something that was an opaque shield, what we’re saying is, “No, you’ve got to be transparent and you’ve got to give individuals and users of your service a way to know what your reasons are for making decisions.”

Fast Company , Read Full Story

(12)