Twitter’s Periscope Has A New Way To Deal With Trolls: Trial By Jury

Like every social network, Periscope struggles with how to deal with abuse and harassment. But on the Twitter-owned live-streaming platform, the issue is especially salient, since the comments float in real time across the screen—often directly over the broadcaster’s face—and a determined troll can easily overtake a live stream with a barrage of nasty remarks.

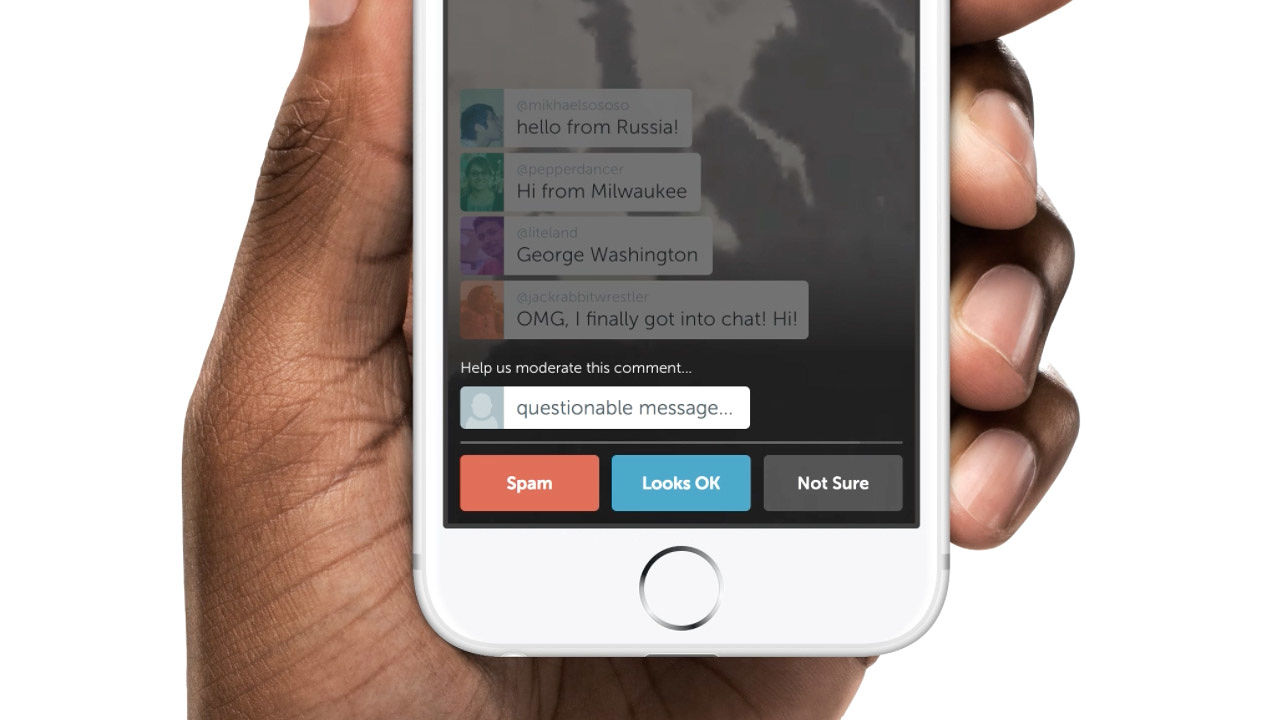

On Tuesday, Periscope is rolling out an abuse-reporting system that puts comment moderation in the hands of so-called flash juries. During a broadcast, if a comment is reported as abusive or spam by one user, other randomly selected viewers of the stream will be shown the comment and asked if they think it is inappropriate. If the majority agrees that it abusive, the offending user will receive a 60-second ban from posting further comments. If the same user is reported and convicted a second time in the same broadcast, he or she loses commenting privileges for the rest of the stream.

The flash jury method has a few benefits over a more traditional review system, in which a small team of employees or volunteers examines a queue of flagged comments. For one thing, it preserves the context in which the comment was made; a vulgar remark that would be offensive in one setting may be appropriate if the live stream features a risqué stand-up comedy act, for example.

It also works quickly. In a demonstration of the system last week, the whole process, from the posting of an abusive comment to enforcement of the ban, took less than 10 seconds. Another benefit: It takes the onus of moderating comments off the broadcasters, allowing them to focus on filming and interacting with other viewers.

Aaron Wasserman, a Periscope engineer who has been with the company since its founding, says the platform will gather data about how this system is used in order to improve it over time. For example, if it turns out that all first-time offenders go on to become second-time offenders, Periscope may make the punishment for a first-time offense more severe than a 60-second ban.

Why not just fully ban any user who posts abusive comments? “It’s important to offer a path to rehabilitation,” Wasserman says. “That person may not have intended for it to be as harmful as it really was. We’re inviting you to stick around and do better next time.”

While this new system is an innovative approach to corralling harmful comments, it doesn’t prevent criminal or abusive broadcasts, like the recent case in which a teenage girl was accused of streaming her friend’s rape. Flagged broadcasts will still be reviewed by Twitter’s safety team—the flash jury moderation will apply only to comments within a stream.

Periscope users will be able to opt out of serving on these juries, but Wasserman says he thinks most people will want to participate. “One thing that was unexpected” in tests of the system, he says, is that “when people are put on a flash jury, it’s a surprisingly engaging experience. They didn’t feel like it was a pop-up ad—they felt like they were participating in the broadcast.”

Whether or not this approach works to reduce harassment will depend on how Periscope continues to refine it over time, such as by identifying repeat offenders and keeping them off the platform altogether. “I definitely do not believe this is going to solve Internet abuse 100%,” Wasserman says. “I don’t think there is a silver bullet to Internet abuse. I hope that we will be able to learn from the analytics of how this works. . . . Ultimately, the goal is to make it as hard as possible to get gratification from being abusive.”

By making its users judge the value or harmfulness of comments, rather than taking on that task itself, Periscope is letting majority rule set the standards of decency on its platform. That could result in a safer, friendlier social network—or, depending on the community, it could result in a platform that, like so many places on the Internet, is openly hostile to women and minorities.

Fast Company , Read Full Story

(17)