We’re All Being Recorded. Who Reaps The Rewards?

The sunlight is nearly blinding as it bounces off the squat, square, perfectly beige and banal government complex across the street. In the foreground, a police officer squints into the camera, shrugs, and shakes his head.

Man’s Voice: Look, I’m out in public taking photographs. And beyond that, it’s none of your business what I’m doing.

Officer 1: Did you say it’s none of our business?

Man’s Voice: Yep. If I’m not being detained, you guys have a nice day.

At that, another officer pulls out a pad and the man is told he’s being detained. Officers pat him down to ensure he’s not carrying any weapons. When the search is complete, he gets a warning.

Officer 2: Here’s the thing: just make it easy for all of us. We’ll get your name; we’re going to make sure you’re not someone who’s out here trying to kill us.

Man’s Voice: Is it illegal to take pictures of you? Is it illegal?

There’s a long pause as the officer stares into the camera—at least, it seems that’s what he’s doing. It’s impossible to know exactly where he’s looking, as his eyes are obscured by his mirrored sunglasses. He tightens his lips and sets his jaw; the furrow between his eyebrows deepens. Finally, he answers the man: “No.”

The sun is just as bright in a second video recorded that day, but this time the glare glances on the front hood of a police cruiser. The dashcam observes the officer waiting patiently for the traffic light to turn green before he can reach a station wagon about to leave a parking lot. The officer, whose voice sounds familiar, reports his location to radio dispatch, then orders the driver to roll down his window. The officer informs the driver that he got pulled over because his license plate is partly obscured.

The driver quickly informs the officer that he used to be qualified to drive commercial vehicles on his license, but that’s been suspended—something he knows might cause confusion when his license number is radioed into dispatch. Sure enough, the dispatcher says the driver’s license is invalid, and the officer arrests him, not merely for driving with a suspended license but for doing so knowingly. The day wasn’t going quite the way Joe Gray had planned.

Gray is a member of a citizen group that records videos of authorities in public and posts them at the website Photography Is Not a Crime. Earlier in the day, he had been conducting a “First Amendment audit,” recording officers coming and going from the Orlando police department to see if they would challenge his right to do so. He hadn’t expected one of the officers to follow him from the scene and arrest him for a minor traffic violation because of it.

Gray didn’t record the traffic stop or his arrest. However, the police car’s dashcam was recording, and that audio captured the moment the police officer changed his frequency from general broadcast to direct car-to-car communication, which he used to consult the officer who had given Gray the warning across the street from the police department.

Officer 2: Hey, do you want me to go straight to BRC [the booking and release center] or do anything special?

Officer 1: [unintelligible]

Officer 2: I’m sorry?

Officer 1: Frankie’s on his way over there, and we’re going to find the L.T. [lieutenant] to see if there’s anything else we need to do. You’re not recording right now while you’re talking?

Officer 2: I’m sorry?

Officer 1: Are you still recording yourself?

Officer 2: Uh, I only have same-car right now, but the mic is running.

When it came time for Gray to challenge his arrest in court, he was lucky: the “Sunshine State” of Florida has the strongest public records laws in the United States. He could point to the note in a state attorney’s office newsletter urging police officers to pull over drivers for an obstructed license plate only if it was impossible to identify the plate number. He could also refer to a Department of Motor Vehicles memo explaining why the driver of a noncommercial vehicle should not be arrested for having a disqualified commercial license.

Eventually, he could replay the dashcam footage, which made clear that his tag number was visible—and he’d also get to hear the police officers discuss whether to take him straight to booking or do something “special” to him.

As these Orlando police officers learned, because sensors have become so small and storage has become so cheap, today anything and everything you say and do might be recorded. The sign has flipped: the default condition of life has shifted from “off the record” to “on the record.”

Consider the number of networked cameras that capture data about you as you go about your day. Surveillance cameras are mounted in offices, stores, public transportation; on city streets, ATM machines, and car dashboards. You or your neighbors may have installed cameras to watch over your front door; you may have a webcam watching over your valuables—perhaps even your children. Security cameras are virtually everywhere, installed both to provide a record if a crime is committed and to deter people from committing a crime in the first place. Based on an exhaustive survey of the number of such cameras in one English county in 2011, it was estimated there were 2 million surveillance cameras in the United Kingdom alone—about one camera for every thirty people.

Generalizing this to the rest of the world, there are about 100 million cameras watching public spaces, all day and all night. Yet, this is only one-tenth of the 1 billion cameras on smartphones. Within the next few years, there will be one networked camera for every single person on the planet.

Then think about the other sensors on our smartphones. There’s at least one microphone; a global positioning system receiver, which determines your location based on satellite signals; a magnetometer, which can serve as a compass to orient your phone based on the strength and direction of the earth’s magnetic field; a barometer, which senses air pressure and your relative elevation; a gyroscope, which senses the phone’s rate of rotation; an accelerometer, which senses the phone’s movement; a thermometer; a humidity sensor; an ambient light sensor; and a proximity sensor, which deactivates the touchscreen when you bring the phone to your face. That’s a dozen or so networked sensors per phone, bringing us to a total of more than 10 billion sensors on mobile phones alone. And that’s not counting cars, watches, thermostats, electricity meters, and other networked devices.

If technology continues to follow Moore’s Law, doubling the computing power available at the same price every 18 months, we will very likely be sharing the world with roughly 1 trillion sensors by 2020, in line with projections from Bosch, HP, IBM, and others.

To record the Orlando Police Department, Joe Gray used a standard video camera, a device that could be identified from many meters away. He did not try to hide his camera, since one of his motivations for recording the officers was to see if they would challenge his right to collect the data. In contrast, while the officer who pulled Gray over knew his patrol car had a live dashcam with a microphone, Gray was never notified during his arrest that the encounter was being recorded. Further, Gray always intended to share his video on the internet. The police department may never have shared the dashcam footage if it hadn’t been forced to do so under the state’s “Sunshine” laws.

Not all governments give citizens the right to access the type of records that helped Gray fight his arrest. Most organizations that collect data about a person do not share it with the subject. These records of our interactions will transform expectations about privacy. Even more fundamentally, they will also change the context and conditions of our interactions. We have significant issues to consider. Why do the laws currently treat photos and videos taken within public view differently from sounds taken within public earshot?

Which new types of sensors will be treated like cameras, and which like microphones? Why would a person’s “right to record” depend on the type of sensor used? Should access to data be solely put in the hands of the person or entity that owns the sensor, or should all the participants in the event have access to the recording? If a person records an event involving another person, who has a “right” to decide how those data are used? If everything is recorded, will it encourage “better” behavior? And how will the lack of any recording be interpreted?

The complications of continuous recording can be seen in another case involving the Orlando police. In court, a driver successfully challenged a charge of driving under the influence of alcohol or drugs by noting that dashcam video of the arrest wasn’t available. It didn’t matter to the judge whether the camera was broken or the officer forgot to turn it on: The lack of video to “corroborate” the officer’s arrest report meant the officer’s word was no better than hearsay.

As our world gets increasingly sensorized, we will need to come to terms with the trade-offs of collecting and sharing data about our bodies, our feelings, and our environment that others might put to some future, unknown use. Now is the time to set down the conditions for how the data can be used so that the rewards—to individuals and to society—will outweigh the risks. Small differences in the rules we set today may have tremendous consequences for the future. I believe it is essential to examine how sensor data can be utilized following the principles of transparency and agency.

A Personalized Point Of View

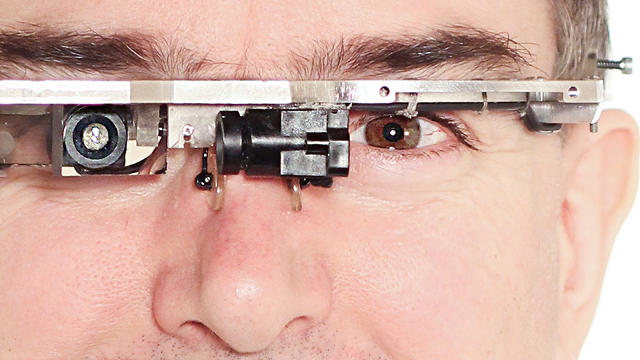

Why does a camera worn at all times bother people any more than a smartphone carried at all times? I was a user of the Explorer version of Google Glass and as a social experiment, wore my Glass pretty much all the time for nearly a year. Quite a few of the people who became unsettled when they saw I was wearing Glass would probably have been comfortable with me holding my phone. I wondered if the difference was the ease of recording hands-free videos, or the difficulty for the other party of detecting when Glass was recording. Still, an attentive person might notice a reflection on the display prism—unless, that is, his attention was focused on some nearby spectacle rather than on my spectacles.

Further, while I was wearing Glass, people also seemed annoyed when they thought I was splitting my attention between our conversation and the display. I decided to “run” an informal experiment where I would pretend to look up information about the individuals I was talking to, or that I was receiving automatic “image search” results based on what was in front of me (including their faces). My companions were dismayed. They felt this put them at a disadvantage in the conversation. They were used to others having information at their fingertips, but Glass put it at the tip of my nose, where they had no way to see for themselves when I was focusing on them or on a screen.

Another possibility was that people were unsettled that I might share the conversation. I could upload a recording to the cloud, or send the video stream via my mobile phone, for others to watch in real time. What would stop someone from inviting a third party to surreptitiously listen in on a conversation? What would stop her from storing the conversation so that it could be shared with some interested party in the future?

Many people feel uncomfortable talking with someone who refuses to take off a pair of mirrored sunglasses, because they aren’t able to see her eyes and get a better sense of what she is feeling about the conversation. Glass may not obscure the eyes, but it still challenges norms of conversation. The various reactions to Glass suggest that people have three main fears about living with ubiquitous sensors, and how they are either violating social conventions or forcing them to change.

First, there is the fear of information imbalance, of data being retrieved about others or the situation in a way that will alter the outcome of the interaction. When one side of a conversation has access to information and the other does not, the power imbalance can be unbearable. People may be worried about the “lemon” problem, whereby asymmetries in access to information cause them to get ripped off (as in the classic example of a used-car salesman hiding information from a buyer). There’s also the issue of distractions from the immediate context, which heightens feelings of insecurity about whether the attention of your conversational partner is focused on you or something else.

Second, there is the fear of dissemination, of others sharing data with people, companies, or the web without permission. If a new person joins a group meeting in person, each conversationalist can shift the topic in response; the new “audience” is patently transparent. This transparency doesn’t occur automatically with cameras, which is why organizations stick up warning notices. This allows individuals to decide how to act in view of the fact that there might be repercussions for having their actions witnessed and analyzed by the camera’s owner or associates. Glass itself served as a warning notice, but it put people on alert all the time, even when the feed wasn’t on.

Third, there is the fear of permanence, of others recording data and saving the data somewhere. In this case, the fear comes down to an uncertainty about how the data might be analyzed or used in the future. With no guarantee that the consequences of the recording will be positive, it might be best to assume the worst. In addition, laws differ from place to place about who is allowed to record what without permission. For instance, an individual or organization has the right to install a camera that records the movements of people visiting their property, but the people visiting the property don’t have the same right. Regulation of privately owned cameras attached to drones, which can observe conversations from the air, will take years to be hashed out.

Perhaps the person with the most experience wearing a recording device is Steve Mann, a professor of electrical engineering and computer science at the University of Toronto. Mann has been wearing different versions of a “Digital Eye Glass” for more than three decades. While a student in the 1980s at MIT, where Mann was a founding member of the Wearable Computing Project, he rarely removed his version of Glass and constantly experimented with applications for it, including transmitting a live web feed of everything he saw (in the early 1990s, when there were only a handful of live feeds on the web). He also coined the term “sousveillance” to describe his video and audio recordings of organizations that had surveillance cameras on their premises. Surveillance is conducted from above, sousveillance from below.

Mann is an advocate of using wearable computers for personal empowerment. He believes an “always on” computer allows people to capture data they do not yet realize could be relevant in the future, even only a few minutes later. To demonstrate this, he has played around with methods to augment human senses and memory with wearables—for example, by zooming in on objects in the distance or cueing up super-slow-motion replays of information that the eye cannot process in real time. In Mann’s experience, wearables allow people to filter incoming data—for example, by obscuring ads they don’t want to see.

While such features are interesting, I believe the full value of sensor data will be unleashed only if they are shared with and refined by companies that can combine and analyze data from many different sources. In my year experimenting with Glass, I recorded many weeks’ worth of video. However, I reviewed only a few minutes of the videos and never actually used them to help me make any decision or learn anything about my behavior. I wasn’t able to efficiently search through and retrieve relevant parts of a video I had taken, let alone run the data through a data refinery in real time to get feedback and suggestions for what to do. I had the tools to collect data but I didn’t have the tools to find data that were relevant to my current context, let alone analyze the data to recognize patterns or generate predictions.

In the next few years, as work in artificial intelligence progresses and data refineries automate the process of labeling data, this will change. Companies are discovering the value of analyzing everything from where their customers walk in a store to how concentrated their employees are, and the technology will become affordable enough for most organizations. Increasingly, we’ll all depend on sensor data to give us recommendations pertinent to our given situation.

What is relevant to you varies based on your context. Take the search engine problem involved in a term like “jaguar”—which might be a cat, a car, or a computer operating system. To handle such ambiguous terms, algorithms rank search results based on a variety of content categories, highlighting the information that best matches your intentions. Knowing your context helps refineries improve the relevance of their outputs to you. For instance, let’s say you search for “jaguar” on your phone while standing in a zoo. If the app knows your geolocation, it can compare your coordinates to a map of the area and rank content about the cat higher in your results. If you’re standing in the parking lot of the zoo, the app could use the phone’s cameras to detect whether you might be curious about the newest model of a luxury car in front of you rather than exploring a facet of big-cat life after the day’s visit.

Not all contextualized search terms are as innocent as a jaguar in the jungle, however. If a person searches for “jasmine” after a long night spent at a club, it’s highly unlikely that he’s looking for information about the flower in the hope of squeezing in some gardening time before dawn. He might be looking for the address of a 24-hour Chinese food take-out that’s on his way home, or—just maybe—he might be tempted to check out the live models on the adult entertainment site Livejasmine. Is he wandering around downtown, or is he in his bedroom? A data refinery can get him where he wants to be more efficiently, using current and recent geolocation data to personalize his results.

Taking context into account can also help us make better decisions for the long term—in Danny Kahneman’s terms, “thinking slow” rather than “thinking fast.” For instance, at least one bank considered offering a customer service code-named “no regrets” that looked at an individual’s past transactions and current context. You walk up to an ATM in Las Vegas at 4 a.m. and ask to withdraw a thousand bucks. Instead of spitting out the bills, the machine prompts: “Are you really sure you want to withdraw that much cash right now? People like you who confirmed ‘yes’ in a similar situation tended to regret it later.”

If the sensor is in your hands, you can choose the conditions when you share your context with the data refineries. But some of the trillion sensors that will record your life over the next decade will be controlled by banks, stores, employers, schools, and governments. There’s a growing interest in using far more personal information than just where you are at any given point in time, and that interest encompasses who you’re with, how you’re feeling, and where your focus is—compared to where it “ought” to be.

The Long Eye Of The Law

Many police departments maintain a private database of dashcam and bodycam video recordings that are made available to the public only after a tortuous legal process. When the Los Angeles Police Department deployed 7,000 body-mounted cameras among its force in the summer of 2015, it announced that a recording would be shared with the public only if a lawsuit cites the video as evidence. But that wasn’t the worst asymmetry of the system. The bodycams did not automatically record everything. The decision to record—or not record—was left up to the officer. Further, a very small group of people—the department’s very own officers—were given unbridled access to the database. They could pull up and review any footage collected by bodycams before writing incident reports, including instances in which their conduct was subject to an investigation. This gives them the power to describe events in a way that would shield them from disciplinary action, by taking advantage of “blind spots” in the video. Why is there one rule for the police and another for the citizens they’re pledged to serve?

The ACLU has tried to battle this blatant imbalance of power by providing a cloud platform that allows individuals to automatically upload their own recordings in real time in case they might be useful in the future. One of the apps gives users the option of alerting others in the area that an incident is being recorded, in case they are in a position to collect another point of view.

Some localities, such as the Seattle Police Department, are embracing more transparency. Twelve officers in the department were outfitted with bodycams as part of a pilot program in December 2014. “We were talking about the video and what to do with it, and someone said, ‘What do people do with police videos?,’” the department’s chief operating officer told the New York Times. Instead of dumping the videos into a private database, the department decided to upload them all to YouTube. In order to keep on the right side of current laws, much of the footage had to be redacted: Faces and other identifying features were blurred and in some cases the audio tracks were removed.

Despite these redactions, the bodycam trial provided unprecedented access to data about the police department’s conduct. If an entrepreneurial data detective wanted to, she could analyze the videos to uncover patterns in the city’s policing, perhaps looking at how officers approach suspects, witnesses, and other members of the public. The data could be used to propose A/B tests to the police department to determine whether changes in training or procedures might improve the interactions between the police and community. Residents would have a powerful tool for improving their community.

Such applications don’t protect us from the possibility that the data will be used against us, unfortunately. We cannot stop every click, view, connection, conversation, step, glance, wheeze, and word from being collected. But we can demand a set of rights with respect to our social data that ensures the fullest potential of transparency and agency.

Andreas Weigend is the former chief scientist of Amazon.com and author of DATA FOR THE PEOPLE: How to Make Our Post-Privacy Economy Work for You, from which this essay is excerpted.

Fast Company , Read Full Story

(58)