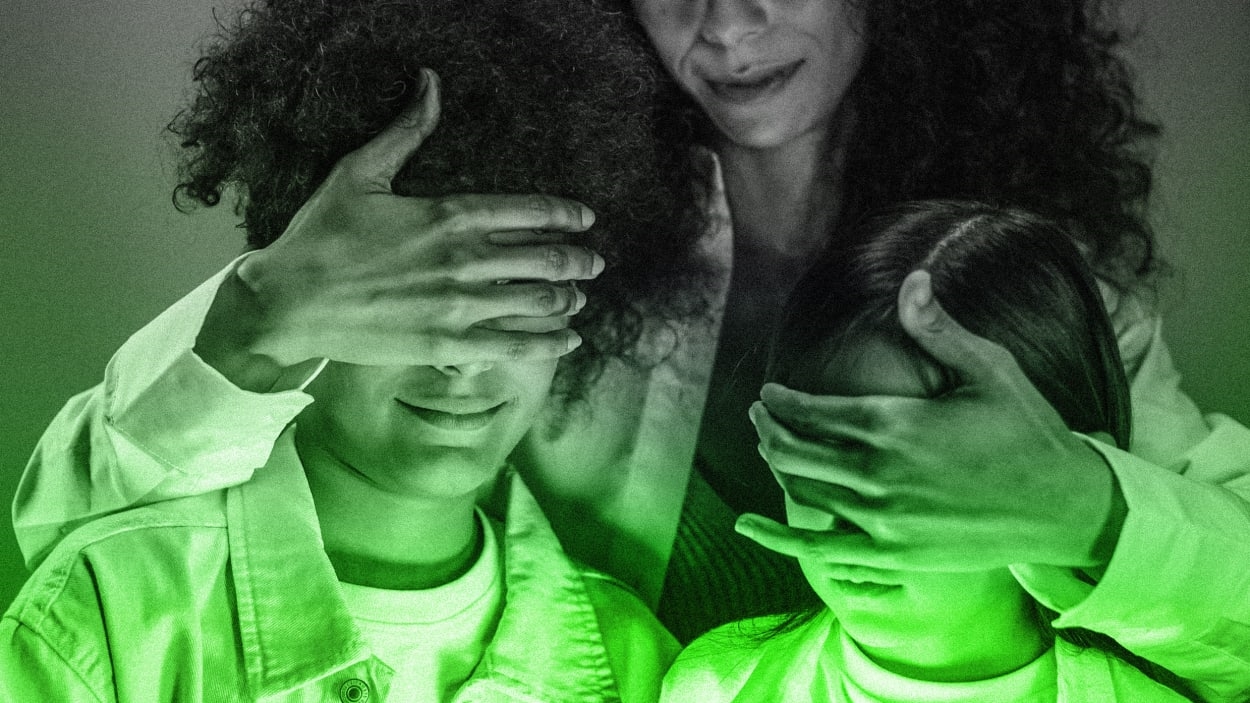

Both TikTok and Meta introduce new parental controls

As concerns rise about children’s exposure to social media, two of the leading sites in that space are giving parents more control over what their kids see, ideally helping to protect them from inappropriate content.

Both TikTok and Meta, on Tuesday, separately unveiled new initiatives that are meant to assist parents. And more could be on the way.

TikTok announced it was giving parents the ability to filter out videos they don’t want children to see. That’s a new feature for the app’s family pairing functionality, which links the accounts of adults and teens, giving them control over features like screen time.

“To adapt this feature for Family Pairing, we engaged with experts, including the Family Online Safety Institute, on how to strike a balance between enabling families to choose the best experience for their needs while also ensuring we respect young people’s rights to participate in the online world,” TikTok wrote in a blog post.

In the interest of transparency, teens will be able to see which keywords their parents have blocked (which is likely to lead to some family arguments). This will live on top of the company’s Content Levels system, which TikTok says blocks mature themes from users under 18.

Teens are the prime audience for TikTok, of course. And the company is still under fire about how it handles data for its users, with specific concern about the level of knowledge China could be gaining about a generation that’s fast becoming a critical part of the U.S. workforce and economy.

Meta, meanwhile, announced separately it was rolling out parental supervision tools for Messenger and Instagram DMs that will let parents see how their child uses the service, as well as any changes to their contact list.

Parents will not be able to read their children’s messages. They will, however, be able to review which types of users—friends, friends of friends, or no one—can send messages to their teens. They’ll also receive alerts if the teen makes changes to their privacy and safety settings.

Should a child report another user to Meta, the parents will be notified as well.

These new features, like those at TikTok, come on top of existing parental controls for Messenger for kids 18 and under.

For Meta, the changes come nearly three weeks after a Wall Street Journal investigation alleged pedophiles used Instagram and its messaging system to purchase and sell sexual content featuring minors. Meta, in response to the accusations, told the Journal in a statement, “Child exploitation is a horrific crime. We’re continuously investigating ways to actively defend against this behavior.”

Meta, in its blog post announcing the updated security measures, said more parental controls are on the way.

“Over the next year, we’ll add more features to Parental Supervision on Messenger so parents can help their teens better manage their time and interactions, while still balancing their privacy as these tools function in both unencrypted and end-to-end encrypted chats,” the company wrote.

Social media companies have been beefing up parental controls at a faster pace since January of this year, when Surgeon General Vivek Murthy said 13-year-olds were too young to join the sites, saying the mental health impacts could be considerable.

That’s spurring plenty of legislative action. A bipartisan proposal, introduced to the Senate in April, would set a national age limit for social media users, effectively banning anyone 12 and under from using the apps. California, meanwhile, has introduced a children’s online safety bill that requires social media sites (and other platforms) to install new safety measures that would curb some features for younger users, such as messaging.

(8)