Despite Facebook’s fact checks, it’s losing the war on misleading news

After everything that’s happened since Russia used disinformation on social media to help Donald Trump in 2016, people are even more engaged with fake news on Facebook than they were back then. Actually, a lot more.

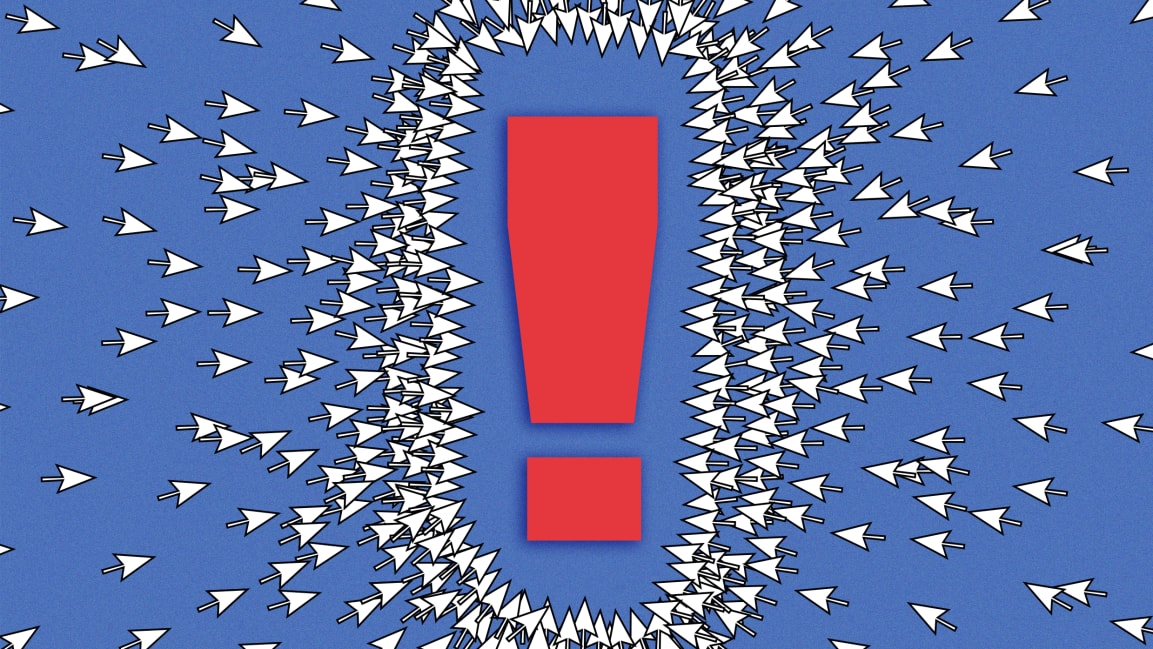

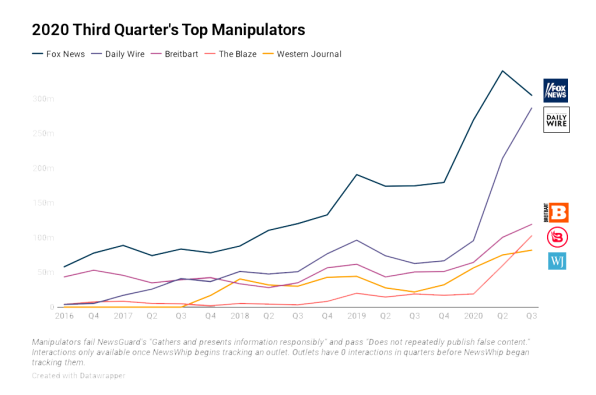

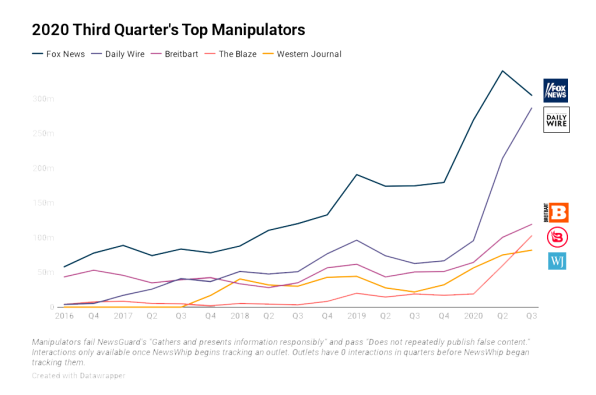

That’s the finding of a new study from the German Marshall Fund, a nonpartisan, nonprofit organization that was founded in 1972 using a financial gift from Germany to the United States. The study reports that Facebook engagement with content from media outlets that repeatedly publish articles that are inaccurate or misleading has increased 242% since the third quarter of 2016. Such links have flourished on Facebook in this awful election-slash-pandemic year, rocketing up 177% in 2020 alone.

“A handful of sites masquerading as news outlets are spreading even more outright false and manipulative information than in the run-up to the 2016 election,” says GMF senior fellow and director Karen Kornbluh, who cowrote the study. The GMF worked with the media literacy startup NewsGuard and the social media analytics company NewsWhip to identify and track the sources of disinformation.

The liking and sharing of this content is mainly a right-wing phenomenon. Most of the interactions—62%, according to GMF—come from the top 10 misinformation superspreaders. These include Breitbart (“Colorado Secretary of State Encourages Non-Citizens, Deceased to Register to Vote”), The Blaze (“Hydroxychloroquine is SAFER than Tylenol: America’s most ‘dangerous’ doctors speak out”), The Federalist (“The Left Is Planning To Litigate A Biden Loss Into A Military Coup”), and others. The researchers point out, however, that many left-leaning sites also circulate highly partisan and misleading news stories.

Much of the growth in engagement around inaccurate and misleading news comes from publishers NewsGuard defines as “Manipulators.” These outlets—Fox News and Breitbart are examples—often present claims that are not supported by evidence, or egregiously distort or misrepresent information to make an argument, but do not go as far as printing demonstrably false information. For example, Breitbart’s “Colorado Secretary of State Encourages Non-Citizens, Deceased to Register to Vote,” headline is not completely untrue, but it’s misleading. The Colorado Secretary of State office sent mailings (not ballots) to everyone it believed might be eligible to vote in the state, and a few of them were inadvertently sent to about a dozen people who were deceased or not citizens.

Facebook uses thousands of human moderators with the help of advanced artificial intelligence algorithms to detect disinformation. Rather than delete inaccurate news articles, the company’s policy is to label it with links to factual information, and down-rank the stuff so that people see it in their news feeds less. The GMF study paints a damning picture of the true efficacy of that policy.

“Engagement does not capture what most people actually see on Facebook,” a Facebook spokesperson said in an email. “Using it to draw conclusions about the progress we’ve made in limiting misinformation and promoting authoritative sources of information since 2016 is misleading. Over the past four years we’ve built the largest fact-checking network of any platform, made investments in highlighting original, informative reporting, and changed our products to ensure fewer people see false information and are made aware of it when they do.”

This defense is less than satisfying, because Facebook doesn’t release numbers showing how many users specific news articles reach. We do know that highly partisan and/or controversial articles get liked, discussed, and passed around far more than straight news articles from the Associated Press or Reuters, despite Facebook’s efforts to promote those legit news sources.

Zuckerberg has said numerous times that his company shouldn’t be the “arbiter of truth” on the internet.

As for the content labels, they’re not likely to influence the type of people who engage with articles from Breitbart or The Federalist. Many in that audience are deeply suspicious of the Silicon Valley company that’s applying the labels, and even more skeptical of the mainstream media outlets to whose authoritative coverage the labels often point. Such users are more likely to conclude that the labels are simply an attempt by Facebook to suppress “conservative” viewpoints.

In fact, what Facebook wants is to stay out of the fray whenever possible. CEO Mark Zuckerberg has said numerous times that his company shouldn’t be the “arbiter of truth” on the internet, which helps to explain why the company has historically avoided simply deleting bad content altogether. He once used as an example people who deny the Holocaust on Facebook, saying people shouldn’t be punished for “getting things wrong.”

Distributing highly partisan, inaccurate news also has real-world effects. Facebook played a key role in fostering the U.S.’s nasty partisan climate. The German Marshall Fund’s data on increased engagement for misleading news indicates that the actions the company has taken in response are falling short. What must happen before Zuckerberg evolves his thinking here?

(33)