Exclusive: The iPhone 12 Pro camera will use Sony’s lidar technology

This year’s iPhones will get the same kind of Sony lidar depth camera that Apple put in the 2020 iPad Pro it released in March, a source with knowledge of the company’s plans has told Fast Company. After planning the feature for years, our source says, the technology will show up in the top-tier “Pro” iPhone models Apple is set to announce this fall.

Manufactured by Sony, this lidar system uses pulses of light to precisely measure the distances of objects from the camera lens. Using this data, the camera can autofocus more precisely, and better differentiate between foreground and background to create effects such as Portrait mode. The depth camera will also help augmented reality apps place digital objects within real-world settings more realistically.

Starting with 2017’s iPhone X, high-end iPhones already have a different type of front-facing 3D camera system, branded TrueDepth, which is used for Face ID authentication and Animoji. That system projects 30,000 infrared dots on the surface of the user’s face to form a 3D map of its contours. The system offers high levels of accuracy at less than a meter of distance, so it’s fine for measurements within arm’s length of the phone.

How it works

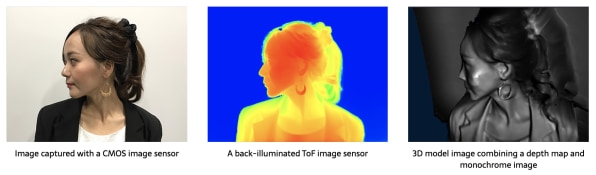

The iPhone’s new lidar system will measure the distance of objects both close to the camera and far away. The system uses a “direct time-of-flight” approach, in which a Vertical Cavity Surface Emitting Laser (VCSEL) sends out bursts of photons. They travel at the speed of light to deflect off of objects or surfaces within the camera’s view. Some of the photons bounce back to a small sensor housed near the camera. Software then uses the time of their round trip to derive the distance of the objects or surfaces from the camera lens. It’s very similar to the way radar works, only lidar uses laser light instead of radio waves.

The iPhones on the market today get some sense of distance by measuring differences in their various cameras’ perspectives on an object. Since that difference (called “parallax“) is larger in nearby objects and smaller in faraway ones, it’s possible to estimate the objects’ distance. The lidar system, on the other hand, is purpose-built to measure depth and can do so far more precisely.

How it improves photos

This precise distance data is added to visual data collected by the phone’s other camera sensors, giving the camera a much better understanding of the objects in front of it. It can, for example, make objects in the foreground appear sharp and distinct from the features of the background, which the human eye would naturally see as a bit more blurry and ill-defined. Dialing in those nuances could help the iPhone’s autofocus function create far more true-to-life photographs, closer to those captured by a conventional camera with a full-sized lens.

The main depth effect offered by current iPhones is Portrait mode, which gives photos the “bokeh” effect that blurs the background layer and places the foreground subject (a person, usually) in sharp focus. Sometimes, however, the software has trouble separating foreground from background. The addition of the depth camera data will go a long way toward fixing that. It may even make it possible to adjust the focus or blur of more than two layers in a photo.

How it improves AR

The lidar system will also make AR experiences on the phone better. The depth data, along with additional data from the other camera sensors and motion sensors, will help the AR software more accurately place digital images within layers of real-world images seen through the camera lens. The lidar system can also bring more accuracy to tasks such as measuring spaces, objects, or people. For example, a medical rehabilitation AR app might use the depth data to more accurately measure the range of motion of a patient’s arm.

[Photo: Apple]

“When you use AR apps without depth information, it’s a bit glitchy and not as powerful as it ultimately could be,” Andre Wong, Lumentum’s VP of 3D sensing, told me back in March. “Now that [Apple’s] ARKit and [Google’s] ARCore have both been out for some time now, you’ll see new AR apps coming out that are more accurate in the way they place objects within a space.”

Sony inside

The sensor chip in the iPhone’s front-facing TrueDepth camera is provided by ST Microelectronics, and many in the industry believed that company would provide the sensor chip for this year’s iPad Pro. But over the past two years, Sony’s image sensor business has flowered as it has focused on developing CMOS (complementary metal-oxide-semiconductor) image sensors. In fact, Sony changed the name of its semiconductors segment to “Imaging & Sensing Solutions” in the second quarter of 2019 to reflect its new-found source of growth.

[Image: Sony]

The move was largely fueled by Sony’s development of advanced sensor technologies that integrate computer vision AI, and by its success in winning contracts to provide image sensors for mass-market smartphones. Sony provides the time-of-flight depth-sensing modules used in high-end Samsung and Huawei smartphones, in Apple’s iPad Pro, and now in the new iPhone Pro models.

Sony has also long been a major supplier of camera sensors to Apple and other smartphone manufacturers.

What the leaks say

This year’s leaks say that Apple plans to announce four new iPhones sometime this fall. All four devices will reportedly support 5G wireless connectivity, but, as always, advancements to the camera features will also get top billing.

At the top of the line, a number of reports say, are two Pro-tier phones—a 6.1-inch device likely called the iPhone 12 Pro, and a 6.5-inch device likely called the iPhone 12 Pro Max. It’s these high-end devices that will feature the new lidar system, our source says.

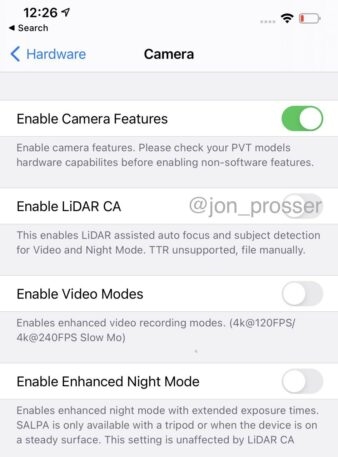

YouTuber Jon Prosser, a prolific sharer of leaks, posted video of screen images from the camera settings of a PVT (product validation test) version of the iPhone 12 Pro Max, in which several lidar-related camera functions are shown. There’s an option to turn on and off a mode that employs the lidar system to measure objects in low-light situations, as well as while shooting video.

For video, the lidar system would continuously track the depth of different parts of an object (such as a person) as it moves in the frame, Prosser’s image suggests. This could let the camera software detach a 3D object from the background of a video and apply effects to it, like super-slow motion. The options screen also shows a new “Enhanced Night Mode” that will open the shutter for a longer period of time to gather more light for the shot.

Apple, the leaks say, will also release a lower-priced 5.4-inch iPhone, possibly called the iPhone 12, and a larger 6.1-inch lower-priced device, possibly called the iPhone 12 Max. These devices will reportedly serve as the successors to 2018’s iPhone XR and 2019’s iPhone 11, both of which sold well.

Bloomberg’s Debby Wu and Mark Gurman report that Apple will also announce two new Apple Watch models and an update to the iPad Air, with a new Apple TV in the works for later release.

The coronavirus has slowed down the work of Apple’s parts suppliers this year, so it’s very likely the new products will arrive later than with Apple’s typical fall schedule. Apple CFO Luca Maestri said during the company’s Q3 earnings call that the new iPhones will go on sale “a few weeks” after the announcement event. Apple normally does its fall event in the first or second week of September, but this year it could happen pretty much any time in September or October. The event will certainly be virtual, and will likely come in the oddly effective infomercial-style format used for the company’s WWDC presentation.

(29)