Google is designing computers that respect your personal space

You’re binging a show on Netflix late at night when you realize that you want to grab a snack. The room is dark, so you feel around for the remote. Maybe you even hit the wrong button to pause and end up fast-forwarding instead.

Now imagine another way. Once you stand up from the couch, the movie simply pauses. And once you sit back down, it continues.

“We’re really inspired by the way people understand each other,” says Leonardo Giusti, Head of Design at Google’s Advanced Technology & Projects (ATAP) Lab. “When you walk behind someone, they hold the door open to you. When you reach to something, it’s handed to you. As humans, we understand each other intuitively often without saying a word.”

Soli first debuted as a way to track gestures in midair, and landed in Pixel phones to allow you to do things like air swiping past songs—intriguing technologically, but pointless practically. Then Google integrated Soli into its Nest Hub, where it could track your sleep by sensing when you laid down, how much you tossed and turned, and even your cadence of breath. It was a promising use case. But it was also exactly one use case for Soli, which was highly situational and context dependent.

ATAP’s new demos push Soli’s capabilities to new extremes. Soli’s first iterations scanned a few feet. Now it can scan a small room. Then by using a pile of algorithms, it can let a Google phone, thermostat, or smart screen read your body language much like another person would, to anticipate what you’d like next.

“As this technology gets more present in our life, it’s fair to ask tech to take a few more cues from us,” says Giusti. “The same way a partner would tell you to grab an umbrella on the way out [the door on a rainy day], a thermostat by the door could do the same thing.”

Google isn’t there yet, but it has trained Soli to understand something key to it all: “We’ve imagined that people and devices can have a personal space,” says Giusti, “and the overlap between these spaces can give us a good understanding of the type of engagement, and social relation between them, at any given moment.”

If I were to walk right up to you—standing four feet away—and make eye contact, you’d know that I wanted to talk to you, so you’d perk up and acknowledge my presence. Soli can understand this, and the ATAP team proposes that a Google Hub of the future might display the time when I was at a distance, but pull up my emails to be waiting as I moved closer and gave it my attention.

How does it know how close is close enough to matter? For that, the team is mining the social research of Edward T. Hall, who proposed the concept of Proxemics in the book The Hidden Dimension. With Proxemics, he was the first to propose the social context of the space around our bodies—that we consider anything inside 18 inches to be personal space, anything within 12 feet to be social space for chatting, and anything beyond that to just be public space to be shared by all without expectation.

ATAP is codifying this research into its own software with a few specific movements. It can understand as you “approach,” pulling up UI elements that may have been off the screen earlier. But walking up to a device, and having the device acknowledge you, is a relatively simple task.The tricker work ATAP demoed was in showing how all the in-between, murkier situations might be handled.

They trained their system to understand that if you walked closely by a screen without glancing in its direction, you weren’t interested in seeing more. They called this “pass.” And it would mean that my Nest thermostat wouldn’t light up every single time I walked by.

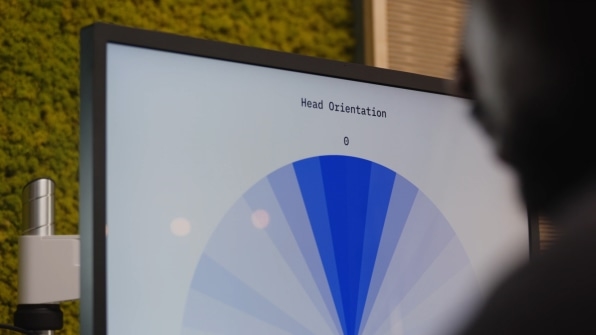

They also trained Soli to read which way you are facing, even if you were standing close. They call this “turn,” and it already has a killer use case for anyone who’s tried to cook a recipe from a YouTube clip.

“Let’s say you’re cooking in the kitchen, watching a tutorial for a new recipe. In this case, [turning] can be considered and maybe pause a video when you’re grabbing an ingredient, then resume when you’re back,” says Lauren Bedal, design lead at Google ATAP. “Cooking is a great example, because your hands could be wet or messy.”

Finally, the system can tell if you “glance.” Now, the iPhone already tracks your face to see if you’re looking at it, unlocking the screen or displaying messages automatically in response.This absolutely counts as a glance, but ATAP is considering the gesture on a larger scale, like glancing at someone from across the room. Bedal suggests that if you were talking on the phone and glanced across the room at your tablet, that your tablet might automatically bring up the option to make it a video call. But I imagine the possibility is so much broader. For instance, if your oven knew you were glancing at it, it could also know when you were talking to it. Glancing could work much as it does with humans, signaling that someone has your sudden attention.

Breaking down Google ATAP’s approach to the future of computing, I find my own feelings split. On one hand, I’ve interviewed many of the researchers from Xerox PARC and related technology labs, who championed ubiquitous computing in the late ’80s. Back then, integrating technology more naturally into our environments was a humanist path forward for a world that had already gotten sucked into tiny screens and command lines. And I still want to see that future play out. On the other, Google, and its ad tracking products, are largely responsible for our modern, always-on, capitalist surveillance state. Any quieter, more integrated era of computing would just embed that state deeper into our lives. Google, like Apple, is putting more sanctions on this front. But 82% of Google’s revenue came from ads in 2021, and to expect that to change overnight would be naive.

As for when we might see these features in devices that Google actually ships, ATAP is making no promises. But I wouldn’t classify the work as meaningless academics. Under VP of design Ivy Ross, Google’s hardware division has sewn hard technology into our plush, domestic environments for years. We’ve seen Google build charming smart speakers covered in cloth, and measure how different living rooms impact a person’s stress levels. These aren’t disparate products, but part of a more unified vision we’re only beginning to glimpse: While most technology companies are gunning to claim the Metaverse, Google seems content to conquer our Universe.

Fast Company , Read Full Story

(32)