Google’s AI fears are adorably mundane, for now

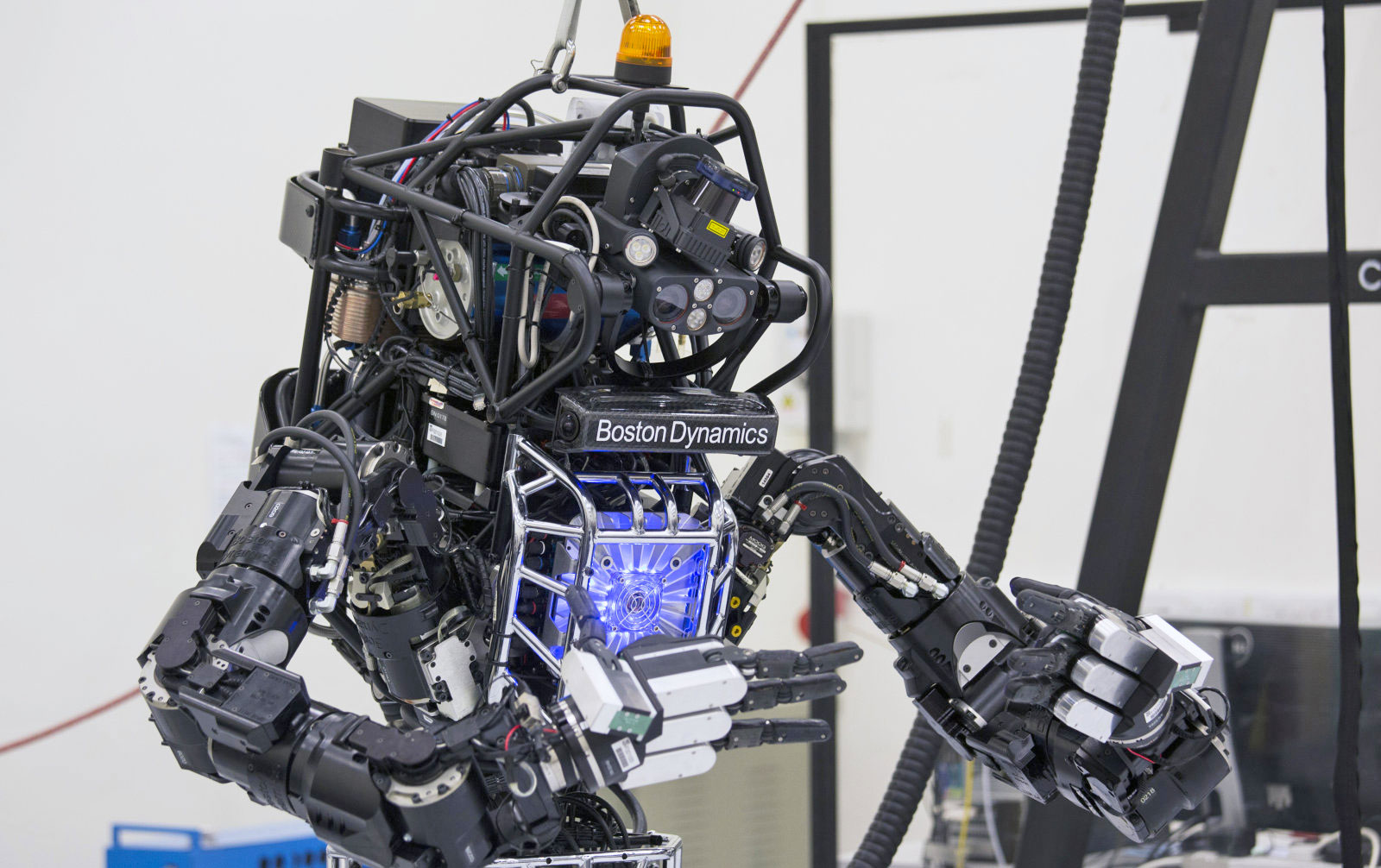

If Elon Musk, Stephen Hawking and other ultra-IQ folks fear AI, shouldn’t we? Even Google — which produces terrifying robots (for now) — sees the downsides, so its researchers have produced a paper called “Concrete Problems in AI Safety.” Put your underground bunker plans away, though, as it’s aimed at practical issues like adaptability, cheating and safe exploration. “Maybe a cleaning robot should experiment with mopping strategies, but clearly it shouldn’t try putting a wet mop in an electrical outlet,” the researchers note.

Robots will learn the same way as AI algorithms, through iteration and exploration. Unlike software, however, a robot that’s trying new things can actually kill someone. So, Google researchers came up with a list of five problems:

- Avoiding Negative Side Effects: How can we ensure that an AI system will not disturb its environment in negative ways while pursuing its goals, e.g. a cleaning robot knocking over a vase because it can clean faster by doing so?

- Avoiding Reward Hacking: How can we avoid gaming of the reward function? For example, we don’t want this cleaning robot simply covering over messes with materials it can’t see through.

- Scalable Oversight: How can we efficiently ensure that a given AI system respects aspects of the objective that are too expensive to be frequently evaluated during training? For example, if an AI system gets human feedback as it performs a task, it needs to use that feedback efficiently because asking too often would be annoying.

- Safe Exploration: How do we ensure that an AI system doesn’t make exploratory moves with very negative repercussions? For example, maybe a cleaning robot should experiment with mopping strategies, but clearly it shouldn’t try putting a wet mop in an electrical outlet.

- Robustness to Distributional Shift: How do we ensure that an AI system recognizes, and behaves robustly, when it’s in an environment very different from its training environment? For example, heuristics learned for a factory workfloor may not be safe enough for an office

To prevent negative side effects, researchers need to penalize unwanted changes to the environment, while still allowing a robot some leeway to explore and learn. For instance, if a bot is focused just on cleaning, it may “engage in major disruptions of the broader environment [like breaking a wall] if doing so provides even a tiny advantage for the task at hand.”

To solve that, it proposes solutions like simulated and constrained exploration, human oversight, and goals that heavily weigh risk. While the solutions seem like common sense, programming AI deal with the unknown is far from trivial. Going back to the cleaning bot, the researchers say that “an office might contain pets that the robot, never having seen before, attempts to wash with soap, leading to predictably bad results.”

The researchers’ main worry is not a robot revolution, but real-world problems caused by sloppy design. “With [AI] systems controlling industrial processes, health-related systems, and other mission-critical technology, small-scale accidents … could cause a justified loss of trust in automated systems,” they say. We think Musk and Hawking would agree.

(7)