Google’s secretive ATAP lab is imagining the future of smart devices

In 2015, Dan Kaufman, the director of the information innovation office at the U.S. Department’s fabled DARPA lab, began talking to Google about joining the company in some capacity. Maybe he could work on Android. Or take a job at X, the Alphabet moonshot factory formerly known as Google X. And then another possibility came up: ATAP (Advanced Technology and Projects), a Google research skunkworks that was “just like DARPA, but in Silicon Valley,” as he describes it. His reaction: “That sounds awesome!”

At the time, ATAP was even led by Regina Dugan, Kaufman’s former boss at DARPA. But not long after he arrived as Dugan’s deputy, she abruptly left to start a similar group at Facebook.

“She laughed and sort of threw the keys to me on the way out,” he remembers. “And I was like, ‘Holy cow, I now run this place.’”

Kaufman, whose background also includes time at DreamWorks and in venture capital, has headed ATAP ever since. If that comes as news to you, it’s understandable—he has kept a public profile so low as to border on invisibility. When we met at the organization’s Mountain View office—which we did before the COVID-19 shutdown—he told me that the last interview he granted was back in 2015, when he was still at DARPA and spoke with 60 Minutes’ Lesley Stahl.

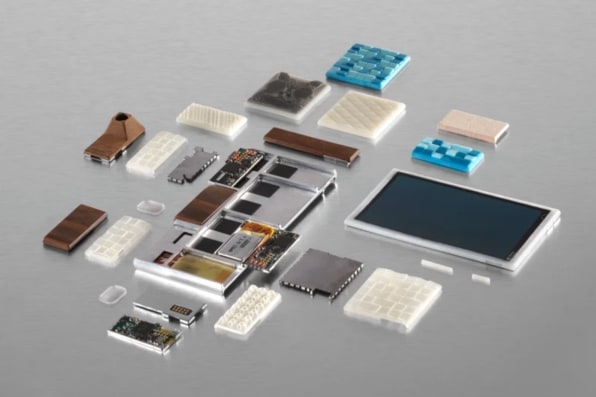

This quietude has been a shift from ATAP’s early days. Dugan was a regular on the tech-conference circuit, bending minds by waxing futuristic about ideas like a password-authentication device you could swallow. The lab also talked freely about works in progress such as Project Ara, a wildly ambitious plan to build low-cost, Lego-like modular smartphones. (I wrote about it in 2014; Google ended up killing it in 2016.)

[Photo: courtesy of Google]

Now Kaufman is ready to open up a bit about ATAP’s current work. That includes some technologies that have already made their way to market—such as Soli, the radar-based gesture-detecting feature in the Pixel 4 smartphone—but also research investigations that might be a long way from consumerization, if they make it there at all.

Though ATAP has changed quite a bit from its early days, its ambitions remain expansive. Adopting the bass tones of movie-trailer narrator Don LaFontaine for emphasis, Kaufman recounts the pitch he gave other high muckety-mucks when discussing potential efforts: “I say, ‘Imagine a world in which this thing existed. I want you to forget all your objections. Just go on a fantasy with me. If I could build this, would you want it?’”

“If I can’t get a ‘yes,’ then it’s probably not that good of an idea,” he says. “If I get a ‘yeah, that’d be great, but how are you going to do that?’ . . . . That’s why we’re here.”

Under new management

As initially conceived, ATAP focused on mobile technology—an artifact of its origins within Motorola during the brief period when Google owned the venerable phone maker. In 2014, Google sold Motorola to Lenovo. But it held onto ATAP—not a huge shock given the group’s focus on cutting-edge research.

Another new dynamic came into play two years later, when Google formed a unified hardware group with responsibility for products such as Pixel phones and Home speakers (and, later, the Nest smart home portfolio). ATAP became part of this operation, which is headed by former Motorola executive Rick Osterloh.

[Photo: courtesy of Google]

All of this change called for a new ATAP mandate more in line with Google’s overarching game plan for hardware. “When I came here, I thought there were a lot of really, really interesting projects,” says Kaufman. “It was just cool stuff. But there wasn’t really a cohesive vision or strategy. And I think if you want to have impact at a company like Google, you need to have a bit more focus. There’s that great [Wayne] Gretzky quote—’don’t go to where the puck is, go to where the puck is going to be.’ If I wanted to influence Google, then I had to be really, really tightly aligned with Rick Osterloh’s vision, and how he sees things.”

To Kaufman and his ATAP colleagues, it was pretty clear where the puck was going. Some, he says, call it ambient computing; others either the fourth or fifth wave of computing. Whatever the name, they’re talking about the post-PC, post-smartphone world where devices of all kinds sport sensors and at least a modicum of their own intelligence, and cloud computing ties everything together.

None of that was a revelation. Nor did it automatically provide boundaries that would guide ATAP in a unique direction. “The mission that we got excited about,” says Kaufman, was “could we make Google hardware as helpful as Google software?”

It was a little insane to say we are going to put radar—60 gigahertz radar!—onto a chip.”

Dan Kaufman

For Google software such as Google Maps, Gmail, and its eponymous search engine, the contours of helpfulness are well established. Hardware is much more of a green field. Kaufman gives an example: Using a Home speaker in the kitchen to set a timer to remind you to turn down the oven. That’s useful. But if the timer announces its completion when you’re in another room, isn’t it no more helpful than a plain old kitchen timer? And maybe true helpfulness involves anticipating that you want to turn down the oven, so your cookies don’t burn? Regardless of whether ATAP is actually tackling the scourge of burnt cookies, it’s asking itself similarly open-ended questions about the areas it’s investigating.

Along with tweaking ATAP’s research areas, Kaufman has also rethought how the group goes about conducting its work. The original DARPA-inspired vision was that no research effort would last for more than a couple of years. If it died along the way, that was perfectly fine. And if it thrived, it would get spun out into a real business run by product people rather than researchers.

The relatively small number of projects that ATAP has publicized don’t add up to a rousing endorsement for this approach. At the end of two years, the Ara modular phone was still an experiment, not a business; it never got into the hands of the consumers in emerging markets it was designed for. And Tango, a technology for mapping real-world environments as 3D spaces, made it into shipping devices and then gave way to a different tech called ARCore.

[Photo: courtesy of Google]

Another ATAP technology, Jacquard—which turns fabric into a touch-sensitive surface so items such as jean jackets and backpacks can sport user interfaces—hasn’t been under such pressure. First publicly acknowledged at the Google I/O conference in 2015, it’s been in shipping products for a while. Most recently, in a collaboration between Google, Adidas, and EA, it’s powered a new smart insole for soccer enthusiasts that can convert real-world soccer kicks into rewards inside EA’s FIFA Mobile game.

Jacquard remains a boutique technology. But even though Google is typically most enthusiastic about ideas with the potential to become everyday necessities for massive quantities of people, Kaufman says that there’s value in getting Jacquard out there in its current form. Slide decks and prototypes can only tell you so much about how real people will react to products they’ve bought.

[Photo: courtesy of Google]

“What we do is we actually go all the way to shipping, but in low quantities,” he says. “Because when you ship a product, you can start to really mitigate risks.” One example: In an interview, a bike courier who bought the Jacquard-enabled Levi’s Commuter Trucker Jacket said that she found its turn-by-turn navigation directions to be a tad annoying. Commuters, after all, generally know where they’re going. Google reworked the product based on her input, and learned a lesson that might help Jacquard along its way to broader impact.

Shrinking radar down to size

The patience Google has shown with Jacquard isn’t a fluke. Rather than treating ATAP’s original system for rapid innovation as sacrosanct, Kaufman decided that it was an artificial construct, especially as ATAP got more serious about serving Google hardware’s overarching goals. Some ideas just need more than two years of research before they’re ripe for commercialization. “If you want to have real impact and build something really meaningful at a scale that Google’s going to do, four or five years is not surprising,” he explains.

This less-rushed approach is also visible in Soli, a technology which debuted last October in Google’s Pixel 4 smartphone. First previewed in 2015—and then only after it was well into development—Soli powers a Pixel feature called Motion Sense that the phone uses for several purposes. It allows you to dismiss phone calls by waving your hand or control music with a contactless swipe, for instance. Motion Sense can also detect if someone is near the phone or reaching out to grab it. That way, it can shut off the Pixel’s “always-on” display if nobody’s there to see it, and prepare to unlock via face recognition even while you’re still lifting the phone.

Soli can also control games. And Google is encouraging third-party developers to come up with new applications for it.

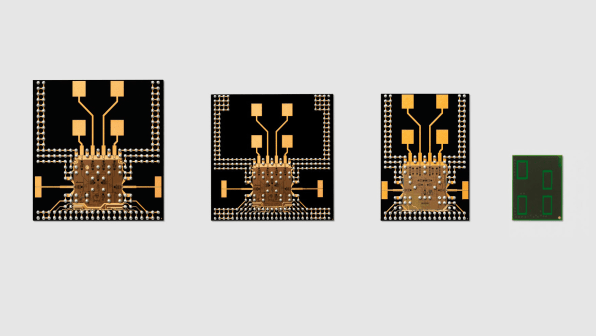

[Photo: courtesy of Google]

From Samsung’s “Air View” feature to the Leap Motion controller, various approaches to interacting with a screen without touching it have been around for years. But ATAP director of engineering Ivan Poupyrev and head of design Leo Giusti—who spearhead Jacquard as well as Soli—took a fresh look at the challenge of interpreting such gestures. The goal was to create something that was intuitive, power-efficient, capable of working in the dark, and (by not using a camera) privacy-minded. The enabling technology they settled on: radar.

If Google had required Soli to prove its worth in two years, it might never have emerged from the lab. It was “a little insane to say we are going to put radar—60 gigahertz radar!—onto a chip,” Kaufman says. And even if ATAP did manage the feat, that was a long way from being able to suss out specific gestures being made by a human being.

“We spent the first years exploring this new material that is the electromagnetic spectrum,” says Giusti. “We really didn’t think about use cases at the very beginning. So it’s kind of different from a normal product development cycle. This was truly research and development.”

Once the Soli team was ready to consider specific real-world scenarios, it envisioned using Soli in smartwatches, where screens are dinky and traditional touch input can be tough to pull off. “We just needed a target,” says Poupyrev. “And we focused a lot on small gestures and fine finger motions, just to [be] super aggressive.” Eventually, the researchers began experimenting with the technology’s possibilities in smartphones—though at first, the requisite technology ate up so much internal space that an early prototype had no room for a camera.

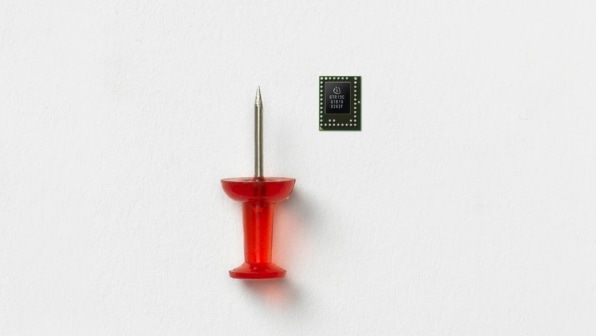

After multiple iterations, ATAP had a Soli chip that looks tiny even when sitting next to a pushpin. That’s what went into the Pixel 4 to enable its Motion Sense features.

[Photo: courtesy of Google]

As part of the Pixel 4, Soli has received mixed reviews, ranging from “Soli is great for the Pixel 4” to “In a smartphone . . . Soli makes zero sense.” With Google reportedly disappointed by the phone’s sales, Soli isn’t the first example of an ATAP invention triumphantly becoming a major selling point for a hit product. Still, being part of a Google flagship phone was a far bigger vote of confidence than if it had arrived in something more inherently niche-y such as Lenovo’s Phab 2 Pro, the first phone to incorporate ATAP’s Tango space-mapping technology.

If Soli shows up in future Google devices in a more refined form, we’ll know that Google is serious about giving ATAP’s innovations every chance to succeed.

A pen, not a pen-shaped computer

ATAP technical project lead Alex Kauffmann (no relation to Dan Kaufman) has been at Google for almost a decade. Back in 2014, he was one of the creators of Cardboard, which uses a smartphone as the brains of a dirt-cheap VR headset. Now at ATAP, he’s still thinking about how to build devices that are useful without being overcomplicated pieces of technology. The name for this category within ATAP: direct objects.

Kauffmann says that many companies that aspire to rethink existing products do so by essentially turning them into special-purpose computers. ATAP’s more Google-esque approach involves nudging more of the intelligence into the cloud, so familiar items can preserve more of their familiarity.

I don’t want like, a computer shaped like a pen, or a computer shaped like a car. I just want a car, and I want the car to be smart.”

Alex Kauffmann

“I don’t want like, a computer shaped like a pen, or a computer shaped like a car,” he declares. “I just want a car, and I want the car to be smart. It’s like, can you take a regular everyday object and give it access to smarts? Because I don’t think you have to put the smarts in it.”

The reference to pens is not just by way of example. When I visit with Kauffmann, he’s at work on one—and he definitely doesn’t want anyone to mistake it for a computer. “I’m not competing with some sort of $200 Parker fancy pen,” he says, brandishing a Paper Mate ballpoint from his desk. “I’m competing with this.”

Smart pens are a venerable category of gadget, with the most prominent example being those long offered by Livescribe. But Livescribe’s intelligence is dependent on you using special paper with tiny dots printed on it, which lets the pen track your motions as you write and thereby turn your scrawls into digital ink.

Though Livescribe attracted a cult following, the special paper always felt like an obstacle in the way of mass acceptance. Then again, a smart pen will only be interesting to the masses if it performs useful tasks that a 40¢ Paper Mate can’t.

In the form I saw, Kauffmann’s prototype—which hasn’t yet undergone a miniaturization effort—is a bulbous contraption that looks more like a Popsicle than a pen. But it can already digitize writing without the use of special paper or other technological workarounds. And it works well enough for Kauffmann to explore its possibilities as a new interface for existing Google technologies. You can circle words to convert them into editable text, using the company’s handwriting-recognition engine. Or do math problems and have Google supply the answer. Or write in one language for translation into another.

Then there’s the AI-infused software toy called Quick, Draw. Unveiled by Google in 2016, it asks you to doodle common items—such as a baseball or ambulance. It then has its algorithm guess what you’ve drawn, based on its crowdsourced data from all the other doodlers who have tried the service. Having plugged his pen into this service, Kauffmann invites me to try it out.

When I sketch a cat, the Quick, Draw algorithm thinks it’s a kangaroo—possibly because it’s whiskerless and standing up. The software’s confusion is not that big a deal. At this point, Kauffmann’s pen is as much about starting conversations as anything else.

He explains his approach to early prototypes: “I show it in a really rough stage, and if people sort of lean in and they get excited about a particular thing, that’s the thing I’ll dig into. And then I’ll try to understand the insight behind that? Is there a thing? Is it the translation? Is it the transcription? What part of this interaction is the part that’s really juicy? And I steer the research that way.”

The ‘Hey, how do I get it to you?’ dance

Another one of Alex Kauffmann’s works in progress involves quick transfers of information—from one device, such as a smartphone, to another. He demos it to me by choosing a map location on a test phone, hovering that phone over another one I’m holding, and then “dropping” the location onto my device. The goal, he says, is to eliminate the “‘Hey, how do I get it to you?’ dance” that people often go through when trying to share stuff.

That aspiration isn’t new or unique to ATAP. Actually, it goes back at least as far as the 1990s, when Palm Pilot owners would “beam” contact info to each other via infrared ports. Today, Bluetooth-based features such as Apple’s Airdrop serve a similar purpose. Kauffmann’s take on the idea aims to be simpler and more reliable; the only enabling features it requires are a speaker, a microphone, and an internet connection. It doesn’t require devices to be paired or logged in to any particular account, and is designed to work on iPhones as well as Pixels and other Android phones.

The technology that makes it work has taken a circuitous route through Google. Back when Bluetooth Low Energy was new, some engineers tried to use the technology to design a digital tape measure. When they found Bluetooth lacking for the purpose, one of them switched to using sound waves to measure distances. The noise his electronics generated annoyed nearby coworkers, which led to the use of silent, ultrasonic tech. And even though the tape-measure project didn’t go anywhere, it eventually morphed into the idea of using ultrasonic audio to transfer data from one device to another.

If anyone starts comparing what Kauffmann is working on to Bluetooth—or even thinking about it as technology—it will have failed to reach the level of ease he’s shooting for. “With the proliferation of lots of devices, you have this baffling panoply of protocols and other things that normally you wouldn’t expect the end users to know anything about, like the fact that everybody knows what Bluetooth is,” he says. “It’s kind of amazing, because you don’t know the name of the protocol that runs your lights.”

When might Kauffmann’s technology be available to consumers, and in what form? He tells me that he isn’t sure, and that it depends in part on his success selling it to other stakeholders within Google. “I’m in the unenviable position of having developed the tech, and now I have to convince every single part of Google that it makes sense to integrate it,” he says. “And to do it in a way that doesn’t require a login, and that doesn’t use personal information, and that actually maintains the vision.”

In a way, the fact that Kauffmann must make such a pitch—even in the new era of a more deeply embedded ATAP—is reassuring. Better that brainstorms be forced to run a gauntlet of internal skepticism than be shoved out the door before they’re ready.

A mouse for your house

ATAP chief Kaufman is waxing nostalgic about the early reaction to the mouse as a PC input device. “I still remember having this conversation: Everybody who was a computer person thought [mice] were stupid,” he says. But the mouse didn’t cater to those who were already efficiently accomplishing tasks purely through jabbing at a keyboard. Instead, it unlocked the power of computing for the vastly larger number of people who didn’t think like programmers.

Today, for all the success in recent years of gadgetry such as Amazon’s Echo speakers, Sonos sound systems, and Google’s own Nest products, making the elements of a smart home work together can still feel like programming. Which leads to a project jokingly referred to inside ATAP as the “house mouse.”

When you first got a VCR back in the day, you could program it, but nobody did. It’s the same thing with smart stuff.”

Rick Marks

Along with the house mouse’s spiritual debt to Doug Engelbart’s rodent-like pointing device, it’s a radical rethinking of the remote control—a gizmo that, in its conventional form, has barely matured since the days when we didn’t use one for much other than switching on a TV, surfing through channels, and adjusting the volume. Spearheading the project is technical project lead Rick Marks, who joined ATAP in 2018, after 19 years as part of Sony’s PlayStation team, where his handiwork included forward-thinking efforts such as the PlayStation virtual-reality platform.

“When you first got a VCR back in the day, you could program it, but nobody did,” he says. “It’s the same thing with smart stuff. A lot of things you can do right now, but it’s just a little bit of a pain.” His research is devoted to new experiences that might help consumers take better advantage of the devices they own.

Google, Amazon, and other companies have already invested overwhelming intellectual capital in letting people interact with smart home devices through voice control. “Voice is really great for a lot of things,” says Marks. “But it’s missing the kind of key things that a mouse brought to computers—spatial input and easy selection and things like that. So I’ve been working on trying to bring that kind of thing into the ambient computing world.” He mentions another source of inspiration: Star Trek’s tricorder.

With MacGyver-like aplomb, Marks started by cobbling together a working version of his futuristic remote using off-the-shelf parts. The gizmo’s brains are provided by a Palm—the undersize, Android-powered phone designed to help people wean themselves off a giant, addictive smartphone. Marks lashed the Palm to a hand controller from a Vive VR system, whose sensors give the remote its ability to understand where you’re pointing it. “This is just a duct-tape version of the thing we’d like to eventually see,” he explains. (Indeed, since he showed it to me, he’s created a version using ATAP-built technology.)

Using items set up around his desk, Marks shows off his concept. He points his remote at a lamp to turn it on. Then he performs more complex maneuvers, including dragging the Star Wars theme from YouTube on his computer to the speaker where he’d like to hear it, and then to the lamp, which begins pulsating through different colors in a rhythm determined by the music.

Google’s Lens image-recognition technology is also part of Marks’s toolkit, powering a feature that lets you point the remote’s camera at a CD and then virtually drag its contents to a speaker for listening. (“It only works with Mötley Crüe right now,” he apologizes.)

When I ask about the use of Lens, Dan Kaufman—who has been hovering and observing Marks’s demo—points out the power of being able to piggyback on the technology rather than attempt to build something similar within ATAP. The Lens engineers “are my friends,” he says. They’re 10 minutes away, and they’ve got a ton of people. Same thing with the [Google] Assistant.”

Which brings up a question: How many Googlers are in the employ of ATAP itself? The company declines to provide a figure, saying that it has a policy against disclosing team sizes. But it doesn’t appear to be an enormous number, at least in comparison to the overall headcount in Google’s hardware group. (In 2018, when the company acquired most of HTC’s smartphone operations, it added 2,000 engineers just in that one transaction.)

For what it’s worth, Kaufman argues that the headcount that matters most isn’t ATAP’s own. “There’s that quote everyone uses—I always butcher it,” he says. “‘If you want to travel fast, travel alone. If you want to get travel far, travel together.’ I don’t care how many smart engineers we hire. If I can tap into 100,000 Googlers, that just feels like a really good idea.” He adds that one of his most critical responsibilities is exploring all the opportunities for collaboration—not just with other parts of Google but also far-flung Alphabet siblings such as Verily and Waymo.

More than seven years after ATAP began teasing its first research projects, it’s hardly a startup within Google. But even if its history to date is short on world-changing breakthroughs, its brainpower remains formidable. Its increasing integration with other parts of the company makes sense. And if it succeeds at imbuing Google hardware with the kind of smarts the company’s software has always had, Google’s patience with the whole idea will look . . . well, smart.

Fast Company , Read Full Story

(26)