How OpenAI designed DALL-E 3 to bring AI image generation to the masses

ChatGPT may be the easiest-to-use AI ever built. It’s a computer you can just talk to in order to write stories, do research, or even produce code. And so, as OpenAI readies the next release of its AI image generator, DALL-E 3, it should come as no surprise: It’s building DALL-E 3 right into ChatGPT.

Starting today, anyone using ChatGPT Plus or Enterprise will be able to generate images with DALL-E 3 right in the flow of natural conversation in the ChatGPT app or website. That’s significant. Previous versions of DALL-E required complicated “prompt” engineering to get what you wanted, leading people to copy and paste strings of keywords and coded language in hopes of stylizing the richest and most detailed images the system could produce.

But now, ChatGPT will serve as the go-between interpreter, translating your words for DALL-E to follow. And so I found that even the simplest image requests, like “kid party,” will generate high quality, high resolution imagery. And at the same time, the DALL-E model itself has been trained to follow much longer queries for juggling your instructions.

“I think what we hope to do . . . is just enable a much wider part of the population to make use of AI image generation,” says Aditya Ramesh, the creator and head of DALL-E at OpenAI. “Now, a casual user who just wants to generate images to fit into a PowerPoint . . . can get what they’re looking for.”

This improved, conversational accessibility is just one part of OpenAI’s strategy for DALL-E 3, which was first released on Bing earlier this month. Under the hood, the engineering team has been improving DALL-E’s core competency, too. The combination of better images and conversational prompting is powerful. To simply chat your way to photorealistic images or silly comics feels eerily natural.

Better interface, better models

Natural language has improved the feel and accessibility of DALL-E immensely. It feels like you’re in more control of the system’s autopilot imagination.

New interface tricks include the option to set up a guiding request inside ChatGPT—“let’s make ’90s anime images”—and it will automatically apply that filter to vehicles, fashion, and anything else you request across your conversation without the need to repeat yourself. Then, when the system offers four images to choose from, you can edit them with just your words, for example, “Add bubbles to the first image.” (Warning: that image may be reimagined a bit more than you’d hope, but those bubbles will appear.)

This UX combines with DALL-E’s under the hood improvements to make the entire product feel more satisfying and worthwhile.

One of the largest upgrades in DALL-E 3 is simply its ability to create images that look and seem more logical. And that logic often comes down to relatively minute details. DALL-E could create a convincing sofa, but it has famously struggled with arranging furniture naturally together in a room. It can make lifelike humans, but struggled at putting the right number of fingers on a hand.

These issues are largely solved, according to the team. And the improvements to these finer details—offered at a higher resolution than before—scale all the way down to text itself.

While DALL-E used to draw words as gibberish, now its lettering can look strikingly professional (and the system can sometimes even generate a clean logo)—though I found in many cases, it would still add letters, misspell words, or misform the occasional glyph.

“We didn’t train it specifically to be able to write text in images, or anything like that,” notes Ramesh. “It sort of came about as a result of us just improving language understanding properly.”

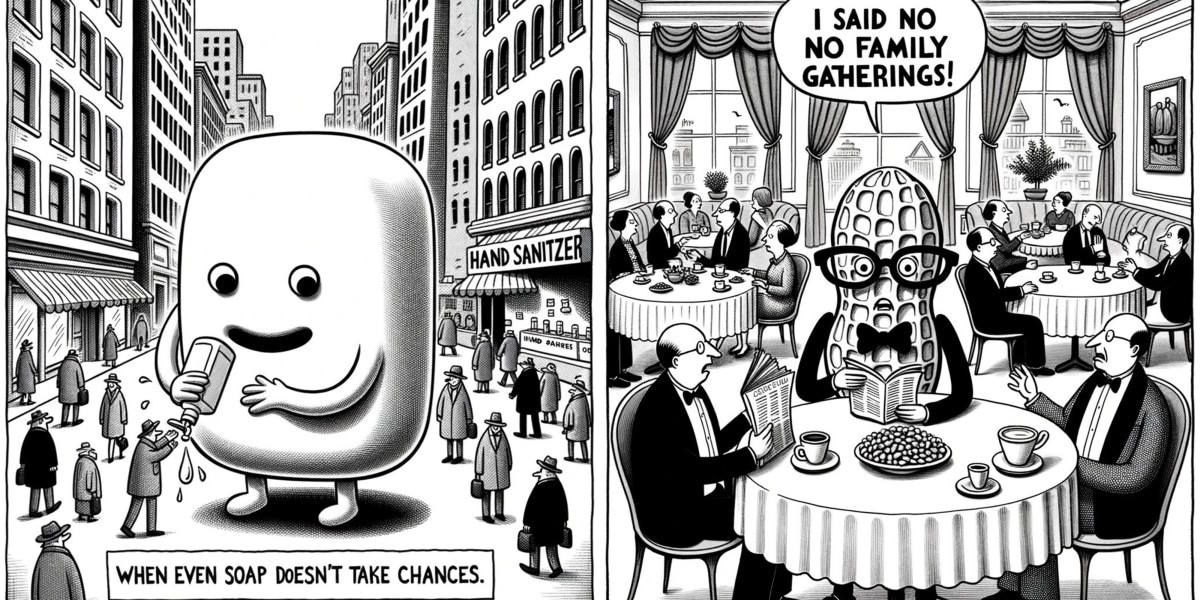

Now that it can (often) render words, DALL-E 3 can create whole comics—and ChatGPT and DALL-E will tag team the work automatically to build them. ChatGPT writes the dialogue (or you can just tell the system what you want written in the frame), while DALL-E draws the art and words on the screen. But that process is invisible to the user, who only needs to describe what they want to see next without any technical understanding of how it’s appearing on the screen.

I had a lot of fun last night creating comics in various styles, and I was impressed by DALL-E’s ability to add art and caption everything ranging from 1950s pulp to pseudo-New Yorker cartoons to my spec. Overall, the function bats about .300 at best, but it’s a good example of why .300 is enough to get you into the Hall of Fame.

Another lingering issue that the OpenAI team believes it’s solved is called variable binding—and it’s one more reason that DALL-E will create images far closer to your descriptions than it used to. Basically, if you ask most image generators to create “a man with blue hair and a woman with green hair,” they are likely to mix up those hair colors because they don’t actually follow the syntax of your request. And that sort of problem only becomes more apparent as image requests become more complicated and nuanced with several elements in a scene.

Now, DALL-E has been trained to handle much longer requests, which the team says has taught the model how to follow specificity—so much so that when I toss the challenge “a man in a green velvet suit standing on a skateboard and a woman in a polka dot jumper wearing a mardi gras mask and roller skates,” it absolutely excels at producing four images, ranging from photography to illustration, depicting all these details, visually gelled and properly placed. I was even able to say “change the woman into overalls” to edit the collection, too.

“Getting the model to understand the exact state of a sentence was hard, and that’s what DALL-E 3 does very well,” says Gabriel Goh, machine learning researcher at OpenAI.

Aa result, I found myself binging on DALL-E more than I have any image generator over the last year. The sense of control has never been finer, and OpenAI seems to be crossing that gap from amazing magic trick to a satisfying creative tool.

But at the same time, when you can communicate more clearly with the machine, the things it doesn’t really want you doing seem more clear. For instance, when I asked DALL-E to render “a friendly photo of a man baking a pie,” its results were definitely a step more illustrated and soft feeling than the hyper-realistic, completely convincing faces that the best prompt engineers can nudge out of the system. Even adding words like “photorealistic” didn’t appear to help.

But trying the same prompt in DALL-E 3 running on Bing, and the people look far more realistic, with believable lighting, closer skin textures, and even tangible flour dust on their hands.

DALL-E seems to be purposefully holding back its talents for reasons it never discloses in the interface. There’s no feedback loop that you’re getting a subpar human figure. Yet, even OpenAI admits that they are heading toward a future of creative limiters. Any given query must filter through checks at the ChatGPT level, and then additional checks within DALL-E itself. The system is making decisions on your behalf that you never see.

Safety precautions

OpenAI is aware of pushback from both creators and regulators, and our conversation didn’t end before the team broke down some of the protections it was building into DALL-E 3.

No longer can you create images in the style of a living artist, they say, or public figures. The system will reject the request. (There also appear to be restrictions on some brands. I couldn’t make a custom Barbie, either.)

Yet other policy-related updates are just about improving results. Algorithmic bias is being reduced, the team promises, pointing out that the system’s propensity for generating white women in their twenties and thirties should be lessened now. Aside from that, the team has also cut down on random, unwelcome surprises.

“The model shouldn’t ever do anything unintended,” says OpenAI policy researcher Sandhini Agarwal. “If you are a user who says, ‘give me a picture of three people in the room,’ it shouldn’t become an overly racy image.”

But I was most curious about whether DALL-E would enact general censorship around more pearl-clutching content, like a recent debate over an image created by a user asking DALL-E via Bing to depict SpongeBob crashing into the World Trade Center. While in my opinion, you can’t blame the pencil in your hand for drawing the dicks all over your own notebook, others claimed to be very upset that software could generate such imagery at all. And in the weeks since, Microsoft locked down DALL-E on Bing significantly. For instance, if you literally try to generate “a wholesome photo” in Bing right now, you will get a content warning “this prompt has been blocked.”

When I broach the topic with the OpenAI team, Agarwal says that, on its own, on your laptop, images like SpongeBob probably won’t cause any harm. But at the same time, “distribution matters a lot,” she admits. Naturally, the internet is a distribution machine with no limit.

OpenAI has enlisted red-teamers to try to weaponize DALL-E, and has published a research paper on how it has mitigated issues, such as overly sexualized content. What gets messier is simply, who makes the decisions about what DALL-E will show you, and why? Specifically, for images like SpongeBob-as-terrorist, OpenAI simply plans to self-censor. To them, the decision seems influenced less by free speech or ethics than by how they are framing their product: DALL-E not as a generator of unbridled creativity, but as a tool in your corporate workflow. You may disagree with that policy, but it is the company’s working point of view.

“There’s often little use that comes from images like that . . . there’s not often high demand for content like that,” says Agarwal.

In many ways, OpenAI is proving that it can address many of the technical barriers of its product, and coupled with a more natural language interface, DALL-E 3 can be a joy to use. Especially when you consider that DALL-E 3 is also being incorporated into Bing, it’s clear that image generation has gone from niche coder stuff to mainstream entertainment at a blinding pace.

What will define companies in this space years from now will most likely be less about technical achievement, and more about usability and control. Which is why OpenAI and its competitors’ most important decisions might not be how well they train their AI, but how freely they allow the public to use their product. Because as AI companions seem poised to take over, it’s worth remembering that no one wants a friend who constantly self-censors.

(10)