New record: It’s more necessary To discover people Than Bots

the primary file from Detroit-primarily based Are You a Human, Inc. recommends we give the an identical of “passports” to established humans.

With software robots (bots) settling into what appears to be a permanent and main a part of website visitors, a Detroit-based firm is out with a record that takes a special attitude.

as a substitute of specializing in settling on and heading off bots, says “State of the Human web record, 2015,” why no longer focus on verifying which guests are human?

That’s the mission of Are You A Human (AYAH), Inc., a company that tracks guests on over three million web sites in virtually 200 international locations and determines which ones are naturally exhibiting human habits. Its new report quantifies the consequences.

It’s like a country setting up border security, CEO Ben Trenda informed me. It “starts with the aid of issuing passports” so its voters can move, and focuses much less on selecting everybody who can’t go.

along with, he mentioned, bots are hastily altering objectives. the common lifespan of a bot is 4 to 6 days — and then its makers difficulty a unique one.

With a tested Human whitelist, web sites might want fewer, anxious screening approaches — which might mean disposing of captchas, further security questions or other filters.

no longer All bad Guys

This orientation toward humans is indisputably an positive strategy, in gentle of the breakdown in the AYAH file.

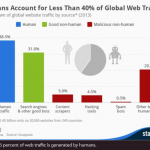

handiest 42 percent of three.2 billion impressions from greater than 600 million gadgets will be tested as coming from our species.

the remaining fifty eight p.c could not be demonstrated. they might all be bots, even supposing at the least some may be people who weren’t behaving as pointedly as they would possibly.

And those which might be bots would possibly not all be unhealthy guys.

Cofounder and COO Reid Tatoris cited that some of those bots are Google spiders helping to populate its search engine, shops’ pricing bots looking at opponents’ pricetags, or different benign software marketers. while Google’s identifies itself to web sites, no longer all just right bots do.

Malicious bots, after all, include these finishing up assaults, posting faux critiques, fraudulently loading pages to get ad impressions, automating content material piracy and conducting different nefarious occupations.

Tatoris mentioned his company doesn’t ruin down the non-human complete because its venture is to inform websites which visitors are, , decaying organic entities.

but, of the bots it did determine in that fifty eight p.c, the split is almost equal: about forty eight percent were regarded as helpful to human experiences and about fifty two % have been thought to be harmful.

The file also factors out that this forty two-58 percent validated Human-to-different split isn’t consistent across websites. most effective about 30 percent of traffic on courting web sites, for example, is certifiably human — a statistic that many singles on the lookout for companions on these websites may now not find surprising.

schooling websites have a relatively excessive level of verified Human task (VHA) of 64 %, and job search web sites have very low VHA levels — 6.2 %. Dot coms have a typical VHA of fifty three p.c, .gov web sites express a whopping seventy one percent and .information sites showcase best 3 %.

Blacklists now not so much help

traffic additionally varies by means of U.S. states. Oregon and Virginia have abnormally low ranges of demonstrated impressions, with best 23.8 percent of Oregon’s pegged as indisputably human and a mere 28.6 % for Virginia. many of the other states have demonstrated people within the forty to 54 p.c vary.

not coincidentally, the document notes, Oregon and Virginia are home to Amazon net services and products’ internet hosting services. That major cloud platform, it points out, is utilized by companies to host instrument “applications for malicious purposes.”

The document additionally shoots down the idea that bot blacklists help so much. as an example, the Interactive promotion Bureau (IAB) maintains an inventory of identified spiders and bots — but simplest about zero.14 percent of the non-established impressions had been on that checklist.

AYAH detects people thru a number of tactics, which embody tool and OS types, patterns of mouse movements and clicks, and non-duplicating conduct across websites.

visitors are tracked between web sites by means of cookies or software fingerprinting. Reid noted that a significant portion of bots are mimicking some kinds of human habits, such as one bot that follows a hexagonal sample as it browses and clicks.

that is supposed to indicate human-like random motion, he indicated, however when that customer shows the same hexagonal patterns across various websites, its cover is blown.

I requested if bots may turn into so adept at mimicking human habits that they may ultimately move as confirmed humans.

It’s very troublesome to do that throughout web sites, Tatoris mentioned, in view that “most bots are written to do one factor particularly” — and their makers don’t have so much incentive to make them more human-like across web sites.

but what if AYAH and different human detectors transform the norm? Wouldn’t bot-makers then have an incentive?

“The extra successful we’re,” Trenda admitted, “the greater that incentive will likely be.”

(Some images used underneath license from Shutterstock.com.)

advertising Land – web marketing information, strategies & pointers

(44)