Tech giants pinky-swear to Biden they’ll develop AI safely

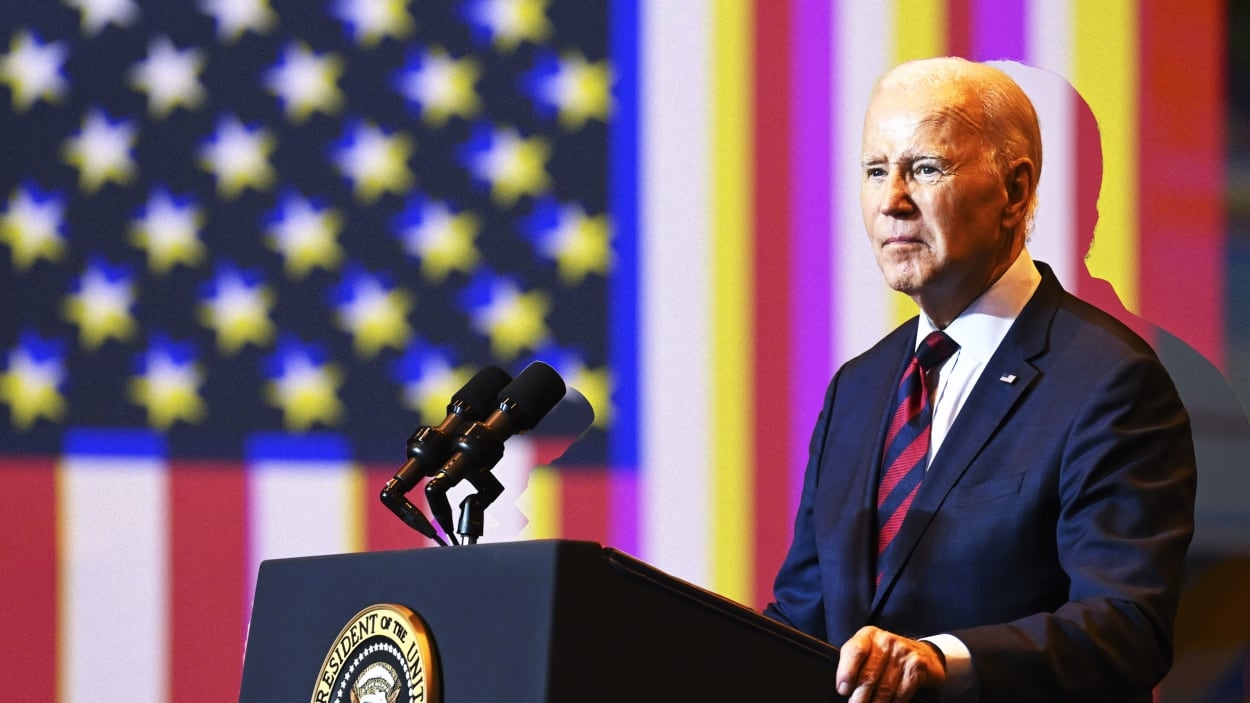

The seven biggest U.S. developers of AI systems have committed to a list of eight nonbinding guidelines for developing new AI systems, the White House announced Friday. The developers—Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI—will publicly state their commitments at a White House ceremony Friday afternoon.

The White House guidelines address some of the fundamental near-term risks from emergent AI systems, asking AI companies to ensure that their systems respect user privacy, eliminate bias and descrimination in their outputs, mitigate the chances of security breaches, and that the systems are used for the public good. Specifically, guidelines ask that the companies:

The White House’s efforts are helpful in that they raise a serious issue to the level of public awareness, but the guidelines are voluntary; and if history is a guide they shouldn’t be expected to substantially change the R&D or safety practices of the tech industry.

The tech industry has for many years made an art of stiff-arming regulators by committing to self-regulate. Facebook kept regulators at bay as it tracked users and harvested their personal data, monopolized the social networking space, allowed foreign adversaries to disseminate election propaganda on its platforms, allowed children to be addicted to and harmed by social networks, and on and on.

Many U.S. lawmakers are determined to pass binding legislation to prevent further tech industry abuses as the next technology wave, AI, arrives. Some of them were quick to register their skepticism Friday.

“While we often hear AI vendors talk about their commitment to security and safety, we have repeatedly seen the expedited release of products that are exploitable, prone to generating unreliable outputs, and susceptible to misuse,” said Senator Mark Warner, a Democrat, of Virginia, in a statement. “These commitments are a step in the right direction, but, as I have said before, we need more than industry commitments.”

(18)