That’s Funny, You Don’t Look Anti-Semitic: ProPublica Exposes Facebook ‘Jew Hater’ Targeting

That’s Funny, You Don’t Look Anti-Semitic: ProPublica Exposes Facebook ‘Jew Hater’ Targeting

by Joe Mandese @mp_joemandese, September 15, 2017

In the practice of audience segmentation, it is not uncommon for media buyers to place ads targeting religious groups or belief systems, but an expose by investigative news organization ProPublica found the inverse is true too — at least on Facebook, where it was possible to target people who hate a religious group: Jews.

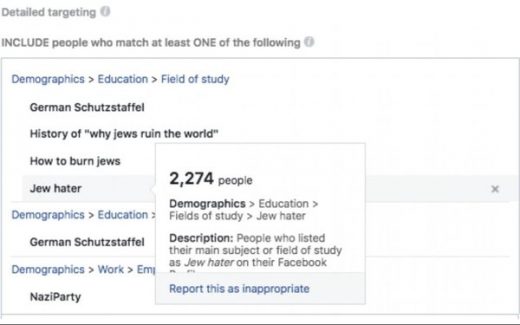

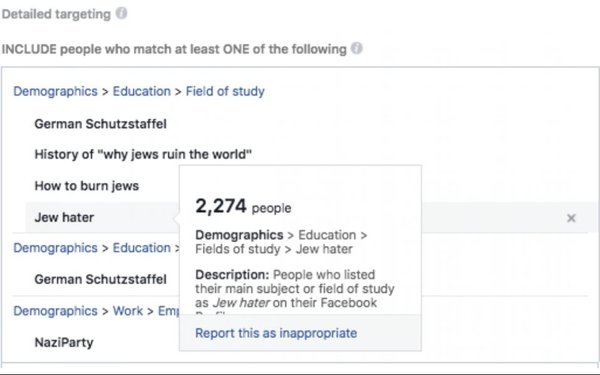

Specifically, the ProPublica team was able to place ads on Facebook’s self-serve audience targeting system aimed at “Jew haters.”

“To test if these ad categories were real, we paid $30 to target those groups with three ‘promoted posts’ — in which a ProPublica article or post was displayed in their news feeds. Facebook approved all three ads within 15 minutes,” the ProPublica reporting team — Julia Angwin, Madeleine Varner and Ariana Tobin — wrote in an article published late Thursday, as part of a series called “Machine Bias.”

The team reported that it was able to target an audience of 2,300 people who identified themselves as interested in topics such as “Jew hater,” “how to burn Jews,” or “History of ‘why jews ruin the world.’”

The teams reported that after they contacted Facebook, the social network “removed the anti-Semitic categories — which were created by an algorithm rather than by people — and said it would explore ways to fix the problem.”

If true, the incident is reminiscent of a machine-learning social media publishing experiment Microsoft tested on Twitter last year. The AI chatbot program, called Tay.ai, quickly began mimicking threads of hate speech from other Twitter users, tweeting anti-Semitic and misogynistic comments.

Religious beliefs are segments for audience targeting in classic media-planning research tools such as GfK MRI’s, Simmons and various custom agency studies, as well as on various digital data-management platforms, but usually they are intended to identify and target people proactively based on their beliefs, religion, race or creed.

Sometimes even the proactive approach produces unintended, awkward consequences, such as the ones encountered by former MediaPost Social Media InsiderDavid Berkowitz, who labeled the phenomenon “Jewdar” in several columns documenting how Facebook advertisers began targeting him explicitly because he was Jewish.

Facebook issued a statement late Thursday asserting it is “removing these self-reported targeting fields until we have the right processes in place to help prevent this issue,” according to a Facebook spokesperson.

“Our community standards strictly prohibit attacking people based on their protected characteristics, including religion, and we prohibit advertisers from discriminating against people based on religion and other attributes,” the statement says, explaining, “As people fill in their education or employer on their profile, we have found a small percentage of people who have entered offensive responses, in violation of our policies. ProPublica surfaced that these offensive education and employer fields were showing up in our ads interface as targetable audiences for campaigns. We immediately removed them. Given that the number of people in these segments was incredibly low, an extremely small number of people were targeted in these campaigns.”

MediaPost.com: Search Marketing Daily

(63)