The New Story Of Computing: Invisible And Smarter Than You

Technology was supposed to make life simple. Instead, it made it more complex. The solution? Computing without computers.

Pizza, wine, and a movie at a friend’s house. Consider what’s involved to pull that off if you’re using your smartphone. Open the dining app to order food, check out Hello Vino to select a Chianti, go to Yelp to find a wine store en route, and run Waze to avoid heavy traffic along the way. Once there, you might tap a streaming video service to select a movie.

That’s five isolated apps and five separate steps to pull off something as simple as pizza night. The rest of our lives are equally as, if not more, complicated. There are hundreds of apps, each designed to help us with a small slice of our life. Yet in pursuit of simplicity, we’ve actually made life more complex.

We are in need of a radical solution, a way to engage an ambient, context-aware machines that can give us the best answer in any dynamic situation. We want the kind of computer that we’ve seen in TV shows like Star Trek and movies like Her, helping us accomplish tasks, from the mundane to the complex, and adjusting and learning as we interact with it. We need shapeless computers—in effect, computing without computers. And to get there, we must design an entirely new user experience, one that works invisibly alongside us, and behaves almost human.

Invisible Machines

Today, screen-based devices dominate our computing experience. They’re unwieldy things, nagging at us to recharge them; interrupting us at dinner; and taking up space in our pockets, on our desks, and on our walls. We put up with them because they let us communicate easily and give us unprecedented access to information. But they are not inevitable. Very soon, thanks to several emerging technologies that are enabling new, lightweight interfaces, these machines will move out of the way and make computing a more natural part of daily life. Here are four interfaces that are paving the way for invisible computing:

Voice Control

Talking to a computer has long been the stuff of great science fiction. It is one of the touchstones of a more human computer interaction. Today, talking to a computer is becoming almost commonplace: Siri has become an integral part of Apple’s product ecosystem; Amazon’s Echo, a voice-controlled personal assistant, was Amazon’s best selling device over $100 this black Friday; and there is even a Barbie (Hello Barbie) that you can talk to. Voice is quickly becoming the always-on addition to modern systems—allowing us to make ad-hoc commands and layer other modes of input with nuance and context.

But even a perfect voice system, one as good as a dedicated human assistant, will never replace all forms of input. Instructing a person, much less a machine, is not often fast, convenient, or accurate enough if the instructions require precision or entail sufficient complexity. Part of the challenge is the user. Most of us cannot form cohesive verbal instructions as quickly as we can choose between buttons and commands. As well, environmental noise challenges and social etiquette will continue to make typing and tapping important modes of input, regardless of advances in speech technology.

Heads Up, Hands Free

We’ll need more than touch screens to engage with shapeless computers. The touch screen, keyboard, and mouse aren’t going away, but they are starting to play smaller roles in our computing future. The original WIMP model (window, mouse, pointer) is still the benchmark for high-fidelity, high-density tasks, such as building spreadsheets and slide presentations. But it’s woefully inadequate if you are trying to follow a recipe while cooking. Your laptop, tablet or phone might be a handy tool, but it is very much in the way if you need to scroll down the page as you knead dough. We need new models of interaction that essentially keep both hands free and our eyes attuned to the task.

Enter augmented reality (AR). The promise of AR is that it will enhance our abilities while keeping us immersed in the world, rather than forcing us to manage a device. But the version we first experience will not resemble what you see in sci-fi movies, at least not anytime soon—the “Terminator-vision” concept in which an implanted heads-up viewer augments our line-of-sight with a seamless layer of computer-rendered visuals and information. While such technology would have profound implications, we haven’t yet solved for the social complications of wearing bulky AR eyewear. It’s a common truth that “the eyes are the window to the soul,” and it will likely remain a huge challenge to successfully wear machines on our faces.

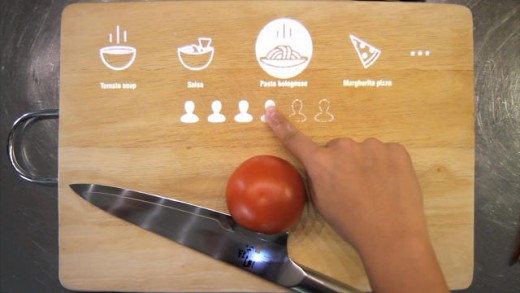

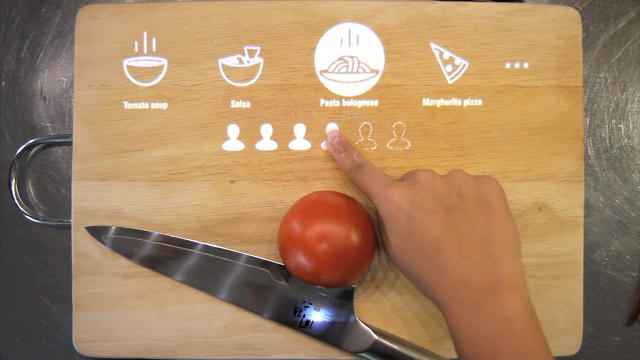

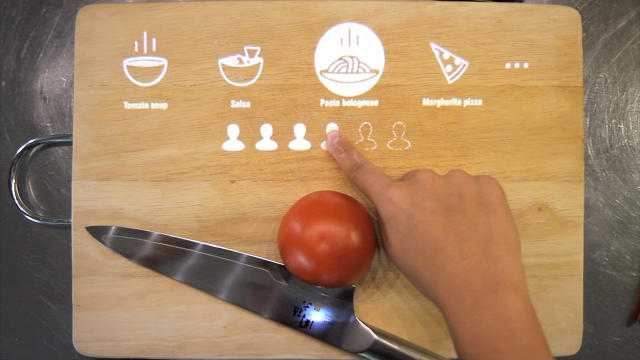

We can approximate the vision of augmented reality through a more practical means of computer projection onto the commons surfaces around us- the tables, walls, and floors in our homes, offices, and public spaces. Such systems, if prevalent enough, could enable us to interact with a computer without a machine on hand. It means we could be busy cooking—messy hands, surfaces, and all—and still interact with a computer to help us. It means people could collaborate, in person, using projections on a shared tabletop. It means any natural surface can become a screen, available on demand, there when we need it, gone when we don’t. Jared Ficklin and I have spent the last few years, both while at frog and now at argodesign, exploring the practicality of this vision. You can see our prototype here:

Computers in Concert

Given how important collaboration is in the workplace, it’s a wonder that we still have so few in-person shared computing solutions. Today we experience computing in a very direct manner—one person operates one machine. This makes designing, selling, and using computers simple. But it’s also very limiting. If you want to ask a computer a question, you need to have one in front of you, no matter whether you’re conversing with friends or driving a car. You need the machine, the app, and the network to get things done. And you need to have it with you.

Picture instead a flexible and all-encompassing “virtual machine” in your home, car, or office composed of all the available computers around you. These computers would be cooperatively tasked according to the needs of the moment, working in concert to serve you. This model would be ideal for offices, where people meet in conference rooms aided by their laptops, phones, and watches, as well as fixed hardware such as a ceiling projector and conferencing systems. Today these devices operate almost completely independently of each other. Imagine if these machines, aware of context, could work together to accurately record a meeting, identify each speaker, and translate the recording into detailed meeting notes with action items. These notes could be automatically and securely shared alongside key documents with everyone in the meeting. An ambient system such as this one could reinvent the social dynamics and value of meetings—not simply formal meetings, but water-cooler exchanges as well.

The underlying concept is not new. Early UNIX was architected so that while the keyboard and screen sat on a desk, the critical CPU power might be in a separate room and storage perhaps in another. This idea went out of fashion when the personal computer stitched those elements—not to mention office tasks like word processing, spreadsheets, and email—all together into a single box. But today we are revisiting this old concept with new thinking around the computer interface. Today our laptops, phones, and watches seamlessly interact with shared cloud services to bring us the best experience. As we develop the shapeless computing of tomorrow, we can extend this “cloud thinking” to the interface and compose virtual entities made up of an expanding array of machines within each user’s reach and permission.

The World Is Your Interface

I have previously written about the concept of imbuing our world of “dumb things”—light switches, doorknobs, salt shakers, and the like—with on-demand computer intelligence.

Why do we need a smart salt shaker? One major obstacle of the much-vaunted Internet of Things (IOT)—the brave new world of everyday objects imbued with high-speed computing and communications—is that it requires a massive proliferation of devices along with their corresponding expansion in computer processing, networking equipment, and power sources. So just as cloud computing moved expensive and complicated computers into concentrated server farms, the concept of Smart-Dumb Things (SDT) could bring to life a more accessible and practical IOT enabled by fewer centralized resources.

Before you imagine the talking candlestick from Beauty and the Beast, let me explain further. The current concept of the smart home entails remote-controlled lights, switches, thermostats, and audio controls. Even a simple installation in a single room requires three or four separate devices, each with its own power requirements, management, and network demands. Instead, our SDT would employ a single device, centrally located in the room. This hidden machine, using computer vision, would essentially “watch” the room for human interaction on a trained surface or object, and translate that interaction into any number of smart-home related actions. Dumb light switches could be trained to act as smart light switches. Even paper drawings of controls placed anywhere in the room could be trained as smart controls. Or random objects, like a salt shaker, could be trained as a volume knob for the home stereo. The world around us becomes the interface—there when we need it, gone when we don’t.

Smart(er) Machines

A proliferation of lightweight interfaces alone won’t streamline computing. We need artificial intelligence to complete the picture.

The hope for artificial intelligence is distorted by talk of “sentient machines” that think and act like humans. That potential distant future should not distract us from the very real technology currently being built. At argodesign I have been working with a company called Cognitive Scale to develop the user interfaces for cognitive computers that assist people with complex tasks. These systems can identify patterns in complex data, adjust to the context at hand, offer credible recommendations in plain English, and make decisions on your behalf. And they improve their capabilities the longer they work with you.

In industries like health care, where billions will be saved through better data management and decision-making, this type of computer acts like a virtual partner, conferring powers that neither human nor machine would have working alone. Imagine a physician at a large hospital whose cognitive computer autonomously correlates regional pollen levels with patient medical records, identifying those at high risk for asthma attacks. The computer sends a smartphone message to high-risk patients with personalized recommendations for avoiding an attack, and also alerts certain emergency rooms when they should expect an influx of asthma cases. This is not science fiction. It is instead the most exciting and profound change in computing today.

Unlike traditional computing, which requires specific and focused human input, a cognitive system saves time by engaging in natural dialogue, understanding context, and drawing conclusions it assumes we may find useful. Rather than serving up content explicitly requested, the system can hunt for information on our behalf and make its own recommendations. Moreover, it is designed to tap into its work history to make better recommendations over time.

All About Cues

Getting to this point requires an entirely new interface for computing—a series of new cues for human-computer engagment. For instance, we all intuitively understand that the tiny trash basket on our screens is a delete icon. But as we reinvent computing, we’ll have to think beyond the keyboard and screen. It’s easy enough to imagine how we might issue commands; voice, gestures, and touch are instinctive to humans. But how might such a computer respond? Its responses will need to be ambient, subtle, and nuanced enough that they contribute to the task at hand without distracting us.

This phenomenon is already well underway. A rich vocabulary of notification beeps, taps, and dialogs is emerging. These cues mimic ordinary human signals, called “phatic expressions”—simple winks, nods, or shrugs—to facilitate communication without interrupting. Our ambient computers will increasingly need to interject us in moments to ask us questions. This new vocabulary of cues will begin to add up into an entirely new language of human-computer interaction.

Today when we’re interrupted by a computer, we’re largely reading system alerts or messages from other people. Similarly, when we use a computer to search for content online, the machine retrieves content that was most likely created by another person. In this manner, the variety and precision of what you find in these alerts or search results is limited to what other people have created. The best the computer can do is deliver what you asked for and leave it to you to draw your own conclusion. But cognitive systems dramatically change this equation. They can spare you the lengthy search results and compose precise answers or hypothesis. As computers begin to deliver this kind of rich communication, we will be challenged in several ways.

Today we have low expectations for quality and precision from a computer when it comes to complex ideas. It’s even baked into the way we talk about it. We don’t call computer queries “questions,” we call them “searches.” The computer delivers us “results,” not “answers.” We assume it’s still our job to sift through the results for the right answer. As we begin to rely on a cognitive system to give us more precise answers, the burden will be on the software to “prove” how it came to those conclusions—to earn our confidence. A physician, for example, would need to have significant trust in her cognitive computer to rely on it for critical decisions about patient care.

The question is, how do you make it possible for a computer to build this trust? Humans use patterns of speech to convey confidence: “There’s a chance of rain” or “This plane might arrive late.” We also engage in layers of dialogue to verify assumptions: “How do you know that?” Are there visual cues or verbal methods a cognitive computer could use to convey confidence?

New interfaces will need to indicate when a machine, not a human, has reached a conclusion, and use a corresponding array of indicators that replace traditional human signals of reliability and confidence. With many of our data-centric technology customers such as Cognitive Scale, we have been developing interfaces that address just this challenge. We have been exploring interfaces that deliver content combined with new expressions of confidence. This kind of feedback drives the actual phrasing of the message (“We believe X to be true” or “It seems as if X is true”) as well as secondary cues like a “confidence meter” that help the user understand this is no longer a black-and-white world.

Cognitive systems need natural, fast, and intuitive methods to build trust. When you think of it that way, it’s really about decoding how people think and communicate. We learn a lot more about a person when they are making decisions, even the most trivial ones—like what’s for lunch. Our task is to help cognitive systems come across as reliable and credible in their decision-making, regardless of the context.

Making Computers Personal

Remember Clippy, the 1990s-era Microsoft Windows assistant that would chime in every few minutes to offer you a not-so-helpful bit of advice as you used Windows? Clippy was a huge failure because it attempted to engage us in a human-like manner, but without the authentic human qualities we expect. In the 1970s the robotics professor Masahiro Mori coined the term “uncanny valley” when observing the revulsion people felt about simulated human characteristics. We’re perfectly fine with cartoon people on television, but mechanical humans in the real world give us the creeps. The reason is that a deep affinity for authentic human behavior and form is encoded in our biology. You can see the same phenomenon in our reaction to cognitive systems. On one hand, we want those systems to engage us with more humanity. Conversely, though, we deeply reject them when they get it wrong. This may be the most profound user interface design challenge we face as computing moves from blunt tools to intelligent behavioral systems.

Today most engagements with software are impersonal. The machine doesn’t know us and its ability to respond is relatively fixed. For example, if I ask for directions from home to work using Google Maps, I’m likely to get the same answer every time—despite the fact that traffic conditions are constantly changing.

Cognitive computing systems are designed to learn from, and about, the people who use them, so these machines can develop more sophisticated responses over time. Every time it faces a new abstract idea and doesn’t have a way of assigning meaning to the abstraction, it must do two things. First it will run a software algorithm—a probability exercise—to come up with its best guess. And second, it may need to simply ask a human for input.

We all have habits and preferences and we’ll want to teach computers to adapt to us. For instance, if your personal assistant interrupts an important phone call to let you know your spouse is on the other line, you might ask the assistant to send a text message the next time. You should be able to train your computer as well, so it knows when and how to nudge you, inform you or interrupt you, if necessary.

We must develop the means of teaching the machine, decide how it should admit its ignorance, and then give it the capability to close knowledge gaps. We must teach it when best to tell us something, when to keep something in mind, and when to forget. These “teaching moments” can happen naturally. Back to the pizza, wine, and movie anecdote at the beginning of this essay—imagine that instead of all those apps, you had a cognitive computer program on your phone. It would identify the pizza restaurants that are open, deliver to the neighborhood, and have good reviews. If you say that you prefer a certain pizza place, it will remember it next time. And it will correlate that choice with your wine, movie, transit, and purchasing preferences, so that your night’s entertainment takes far fewer steps to line up.

Cognitive computing serves as a graceful pairing with invisible interfaces, the next logical step in creating a wide range of products, services, and experiences that are more seamless and elegant. As consumers, we’re demanding increasingly convenient ways to do everyday things like ordering food or finding a cab. As professionals, we want tools that help us perform better without getting in the way. The most loved products are the ones where we can complete the task at hand and not recognize the technology that made it so effortless.

My hope is that the technology giants of the world dive headlong. For better or worse, the world needs an iconic proof point, an “iPhone” of sorts, to illustrate the promise of this next generation of computing. The raw computing power and rich data required to achieve this vision is close. Much of it exists today. The challenge is to put the pieces together into ambient computing with which humans can engage seamlessly and naturally—computing we can trust and teach. Our computing experience may someday resemble Cyrano de Bergerac from the French fairytale, who hides in the bushes while giving the hapless Christian the perfect words to woo the woman of his dreams. It’s easy to imagine such computers serving as our romantic counselors and much more—connecting the dots to help us simplify our complex lives.

Fast Company , Read Full Story

(74)