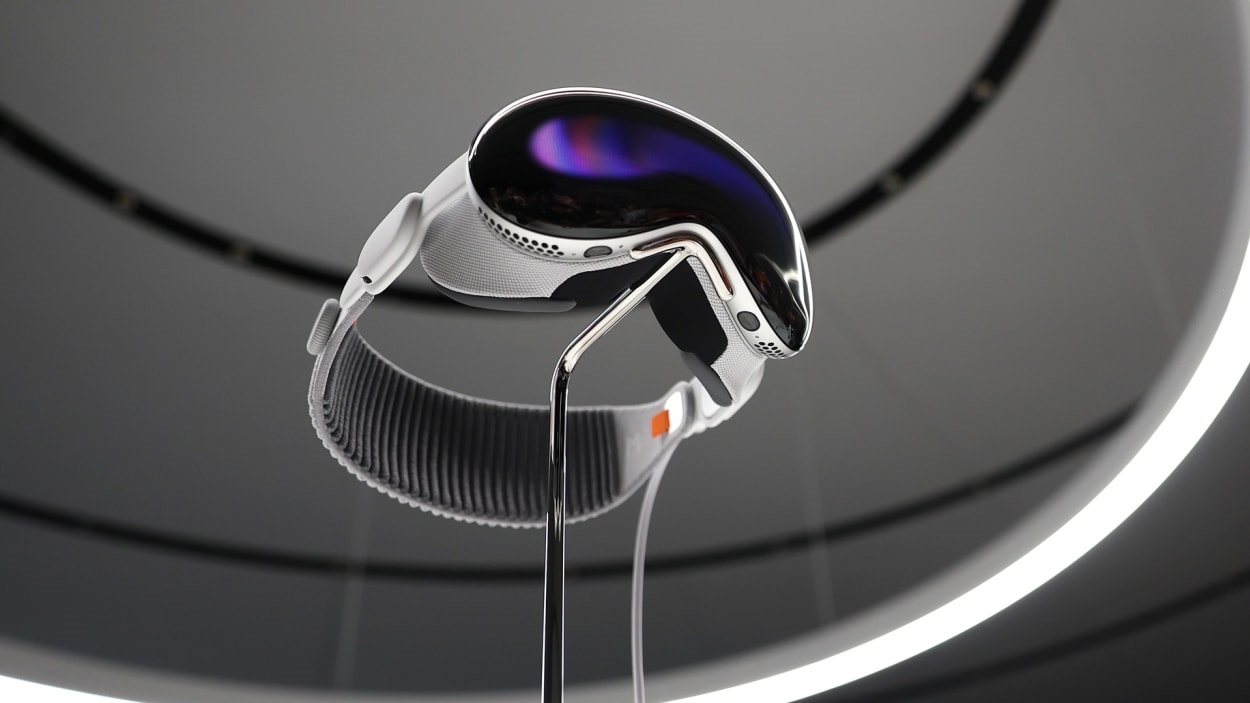

The Vision Pro will have to do until Apple’s ‘real’ spatial computing device shows up

A week after the announcement of Apple’s Vision Pro mixed reality headset, many observers have come to the conclusion that the $3,499 device is not as groundbreaking as Apple might have you believe.

Rather than being a revolutionary “spatial computing” device that ushers in a new way for humans to do their personal computing, the product is really more like a VR headset with some mixed reality features rather awkwardly built on.

Just look at the use cases Apple presented: watching movies, doing work-work in several virtual screens, FaceTiming. Most of these things can be done just as well in a closed virtual reality setting with no integration of the world outside the headset.

To create the “spatial” part, Apple’s headset blocks the user’s direct view of the world and substitutes in a (very high quality) digital representation of the real world (called a “passthrough” image) as captured by the device’s cameras. The headset then mixes the digital content of its apps—movies, 3D photos, Facetime faces, etc.—with the passthrough image. A dial on top of the headset adjusts the relative levels of the two, from maximum mixed reality to fully closed-off dark-theater mode VR.

But all of this depends on the headset forming a seal over the eyes to block out all light from the real world. Apple was very concerned about this design because it isolates the user behind a piece of technology, an idea that would make Jony Ive roll over in his chauffeur-driven Bentley. That’s one reason the quality of the “passthrough” image is so important—it helps the user forget that their eyes are completely covered up. (My colleague Harry McCracken, who tried out the Vision Pro at Apple, said that he felt very comfortable interacting with other people in the room while wearing the headset.)

And Apple was so concerned about the anti-social implication of a headset that covers up the user’s eyes that it spent considerable R&D resources building the “Eyesight” feature, which simulates the user’s eyes on the front of the headset for the benefit of others. The feature also shows when the user is engaged in an app and not attentive to others.

Despite these workarounds, the Vision Pro is still a large-ish, closed, opaque piece of gear that straps to the face. It’s not a very social device. It’s not something a lot of people will wear to the park to play Pokémon Go. It’s a device that’s best used alone, in the safety of one’s own home.

A purer mixed-reality experience would be one where the lenses of the wearable are see-through. A number of XR devices, including the Magic Leap 2 and Microsoft’s Hololens use see-through lenses. Apple reportedly considered using an open headset design with transparent lenses, but decided early on that that technology wasn’t ready for prime time.

Actually, one day after the announcement of the Vision Pro, news broke that Apple had acquired a Los Angeles-based company called Mira whose product projects the interface from an iPhone onto flip-down transparent lenses. It’s easy to see where Apple is headed with future versions of its XR product. It wants to make XR svelte, stylish XR glasses that have see-through lenses. That would be a far truer expression of the company’s excitement about spatial computing.

The Apple Vision Pro, then, should be seen as a precursor, a placeholder, and a work in progress, one that will likely bear little resemblance to the “Vision” product the company releases five years from now.

Cultural hurdles

But even with a stylish transparent design, spatial-computing nirvana may still be out of reach. An open and transparent design will naturally invite users to wear their “face computer” in public. And that could bring in a whole new set of problems—the same ones Google encountered with its Glass product. Glass wasn’t the target of derision because the device looked nerdy. It was mocked mainly because bystanders feared the technology might be recording and analyzing them in some way.

Face-worn computers do a lot more than that, actually, especially when combined with powerful AI models running on the device. From a purely technical perspective, XR glasses can collect all kinds of data from its array of cameras, sensors, and microphones, such as data about the user, their habits, the places they go, and what they do there. Using all that information, the AI might make deep inferences about what information the user might want in various places or contexts, then provide it to them proactively. This makes possible a personal digital assistant that knows the user intimately, mainly because of its ability to gather sensory information in situ.

But, in theory, the technology could also identify people with whom the user comes in contact, and perhaps call up social, business, or personal information about them, and display it within the lenses of the glasses. Or, the AI may make inferences about the emotional meaning of facial movements made by another person during an up-close conversation. That may be great for the wearer, but disconcerting for the person on the other side of the camera.

Google has announced that it will finally discontinue the Glass (enterprise) product this fall. But wearable, spatial computing lives on, and society will evolve toward acceptance or rejection at its own pace. As powerful a technology as Apple’s future XR will likely be, it’s unlikely to cause an immediate cultural shift toward accepting new face-worn computer technology just because it bears the Apple logo.

(55)