This Robot Intentionally Hurts People–And Makes Them Bleed

Asimov’s First Law of Robotics is very clear: Robots may not harm people.

Although there are certainly plenty of large robots, often used in manufacturing, that one would have to consider dangerous, roboticists have generally hewed to that rule.

The “law,” penned by science-fiction giant Isaac Asimov in his 1942 short story Runaround, was one of three rules, the second of which reads, “A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.”

To be sure, accidents involving robots happen, like when someone gets too close to an industrial robot.

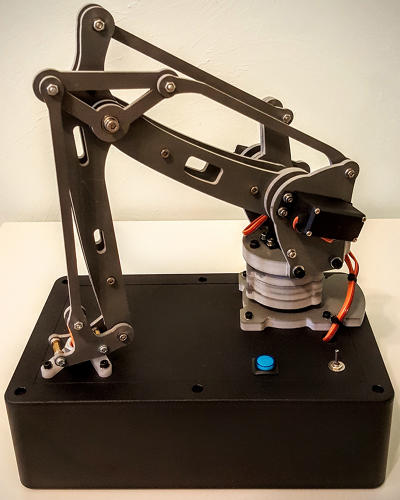

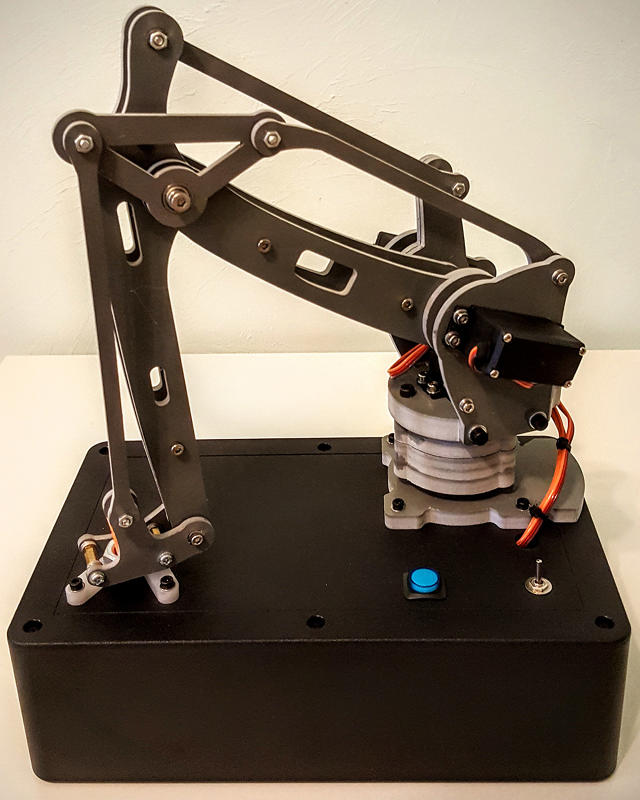

But now a Berkeley, California man wants to start a robust conversation among ethicists, philosophers, lawyers, and others about where technology is going—and what dangers robots will present humanity in the future. Alexander Reben, a roboticist and artist, has built a tabletop robot whose sole mechanical purpose is to hurt people. Reben hopes his Frankenstein gets people talking.

Before you bar your doors and windows, let’s define the terms: The harm caused by Reben’s robot is nothing more than a pinprick, albeit one delivered at high speed, causing the maximum amount of pain a small needle can inflict on a fingertip.

And interestingly, he designed the machine so that injury is inflicted randomly. Sometimes the robot strikes. Sometimes it doesn’t. Even Reben, when he exposes his fingertip to danger, has no idea if he’ll end up shedding blood or not.

“No one’s actually made a robot that was built to intentionally hurt and injure someone,” Reben claims in a giant room on the top floor of the beautiful Victorian mansion where he lives and works as a member of Stochastic Labs, a Berkeley arts, technology, and science events incubator. “I wanted to make a robot that does this that actually exists…That was important to, to take it out of the thought experiment realm into reality, because once something exists in the world, you have to confront it. It becomes more urgent. You can’t just pontificate about it.”

Asked for her opinion about the experiment, Kate Darling, a researcher at the MIT Media Lab who studies the “near-term societal impact of robotic technology,” said she liked it, mainly because it involves robots. “I don’t want to put my hand in it, though,” she adds.

Reben is perhaps best known as the creator of the BlabDroid, a small, harmless-looking robot that somehow inspires the people it encounters to tell it stories about their lives. His work over the years has been centered around the relationships people have with technology and how that technology can help us understand our humanity.

He has been acutely aware that people are increasingly afraid of robots—either because they pose some sort of theoretical physical danger to us or because they’re seen by many to be marching toward replacing us. “Robots are going to take over,” or “Robots are going to take our jobs” are common refrains these days.

Reben wants to force people to confront the issue of how to deal with threats from robots well before they actually happen. Normally, such a job might fall to academics, but Reben believes no research institution could get away with building a robot that actually hurts people. Similarly, no corporation is going to make such a robot because, he believes, “you don’t want to be known as the first company that made a robot to intentionally cause pain.”

Better, he says, to leave such things to the art world, where “people have open minds.”

Given that his robot isn’t ripping people’s arms off, or smashing anyone to little bits, it’s likely that there won’t be much outrage, at least not the kind that would result if his machine was causing severe harm.

Reben hopes people from fields as disparate as law, philosophy, engineering, and ethics will take notice of what he has built. “These cross-disciplinary people need to come together,” Reben says, “to solve some of these problems that no one of them can wrap their heads around or solve completely.”

He imagines that lawyers will debate the liability issues surrounding a robot that can harm people, while ethicists will ponder whether it’s even okay to think about such an experiment. Philosophers will wonder why such a robot exists.

But there’s reason to believe that Asimov’s laws would never have protected us anyway.

“The point of the Three Laws was to fail in interesting ways; that’s what made most of the stories involving them interesting,” Ben Goertzel, AI theorist and chief scientist at financial prediction firm Aidyia Holdings, told io9 in 2014. “So the Three Laws were instructive in terms of teaching us how any attempt to legislate ethics in terms of specific rules is bound to fall apart and have various loopholes.”

Darling contends that, experiment or not, Reben bears the ethical responsibility for any harm caused by his robot since he is the one who designed it.

“We may gradually distance ourselves from ethical responsibility for harm when dealing with autonomous robots,” Darling says. “Of course, the legal system still assigns responsibility…but the further we get from being able to anticipate the behavior of a robot, the less ‘intentional’ the harm.”

She also believes that as technology improves, we may have to rethink the way we look at machines.

“From a responsibility standpoint,” Darling says, “robots will be more than just tools that we wield as an extension of ourselves. With increasingly autonomous technology, it might make more sense to view robots as analogous to animals, whose behavior we also can’t always anticipate.

As for Reben, he just hopes people stop sticking their heads in the sand as autonomous technology advances.

“I want people to start confronting the physicality of it,” Reben says. “It will raise a bit more awareness outside the philosophical realm.”

“There’s always going to be situations where the unforeseen is going to happen, and how to deal with that is going to be an important thing to think about.”

Alexander Reben, a Berkeley artist and roboticist, designed this tabletop robot to intentionally hurt people, breaking Asimov’s First Law of Robotics.

A look at the robot.

The robot delivers a pinprick to people’s fingers.

Fast Company , Read Full Story

(62)