What Emotion-Reading Computers Are Learning About Us

Could your fridge sense that you’re depressed, and stop you from binging on the chocolate ice cream?

Rana el Kaliouby, a Cambridge- and MIT-trained scientist and leader in facial emotion recognition technology, is concerned about how computers are affecting the emotional lives of her two small children.

There is much research that suggests that emotional intelligence develops from social interactions, yet children are increasingly spending their days in front of computers, tablets, and smartphones. Today, children under the age of eight spend on average two full hours a day in front of screens. El Kaliouby is deeply concerned about what happens when children grow up around technology that does not express emotion and cannot read our emotion. Does that cause us, in turn, to stop expressing emotion?

The answer, according to recent research, is yes. A University of California-Los Angeles study last year found that children who had regular access to phones, televisions, and computers were significantly worse at reading human emotions than those who went five days without exposure to technology.

But el Kaliouby does not believe the solution lies in ridding the world of technology. Instead, she believes we should be working to make computers more emotionally intelligent. In 2009, she cofounded a company called Affectiva, just outside Boston, where scientists create tools that allow computers to read faces, precisely connecting each brow furrow or smile line to a specific emotion.

The company has created an “emotion engine” called Affdex that studies faces from webcam footage, identifying subtle movements and relating them to emotional or cognitive states. The technology is sophisticated enough to distinguish smirks from smiles, or unhappy frowns from the empathetic pursing of lips. It then uses these data to measure the subject’s level of joy, surprise, or confusion.

When I met el Kaliouby at TEDWomen in May, she put me in front of an iPad equipped with Affdex technology. An image of my face appeared onscreen covered in dots that appeared to be tracking wrinkles on my forehead, smile lines, cheekbones, and dozens of other relevant points on my face. When I smiled a little, the joy index would go up slightly, and when I burst into a full-blown laugh, the joy index shot up. When I watched a short video, a graph emerged that tracked my facial expression at every second, revealing what parts of the video I found engaging or funny.

“The technology is able to deduce emotions that we might not even be able to articulate, because we are not fully aware of them,” el Kaliouby tells me. “When a viewer sees a funny video, for instance, the Affdex might register a split second of confusion or disgust before the viewer smiles or laughs, indicating that there was actually something disturbing to them in the video.”

As Affectiva’s chief scientist, el Kaliouby believes the applications of emotionally intelligent technology are potentially endless. Affectiva’s technology is currently being used in political polling to identify how people react to political debate, and in games that help to train children with Asperger’s to interpret emotion.

But imagine the possibilities: At some point in the future, el Kaliouby suggests fridges might be equipped to sense when we are depressed in order to prevent us from binging on chocolate ice cream. Or perhaps computers could recognize when we are having a bad day, and offer a word of empathy—or a heartwarming panda video.

For the time being, the majority of Affectiva’s clients are companies interested in better understanding their customers. For instance, brands like Coca-Cola and Mars and TV stations like CBS are using this technology to track how people react to television ads or programming. By studying the faces of viewers as they watch a video, the technology is able to deduce at exactly what point the ad provokes enjoyment, boredom, or confusion.

So far, the company has already analyzed more than 2.9 million videos of faces from subjects in 75 countries. The resulting repository of emotion data points has allowed El Kaliouby and the rest of her Affectiva research team to identify larger patterns about human emotional behavior.

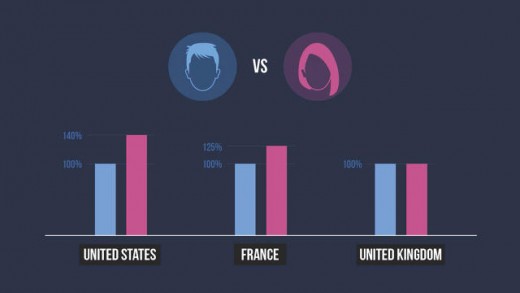

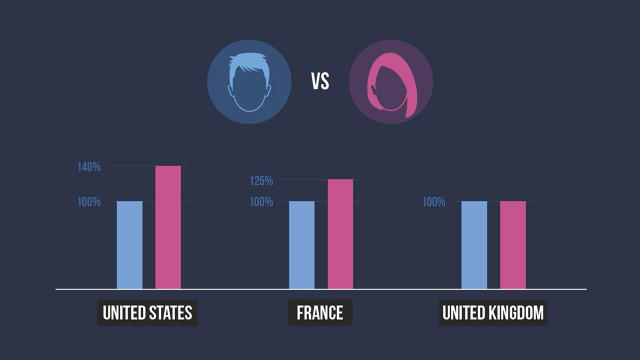

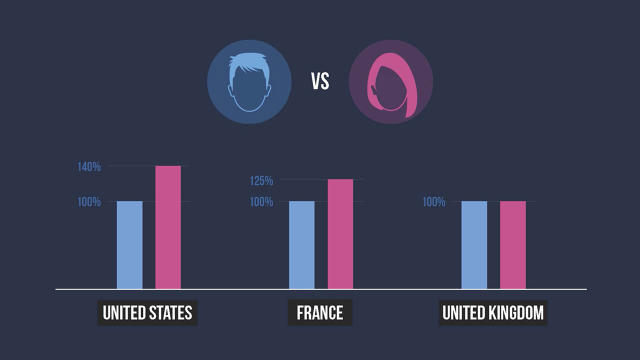

For instance, this database provides fascinating insights about facial expressions and gender. Women, it turns out, tend to smile longer and more intensely than men.

However, the caveat here is that these gender differences vary depending on country. In the United States, women smile 40% more than men, while in France, women smile only 25% more than men. In the United Kingdom, on the other hand, women and men smile at the same rate.

There are also interesting differences in terms of age: People of both genders over 50 tend to be more emotionally expressive than those under 50.

These insights are fascinating, because psychologists have been trying to understand gender differences in smiling for years now. Many have relied on subjects tracking their own smiles and reporting back to researchers. However, there hasn’t been a large-scale, comprehensive study of the differences in male and female facial expressions, and certainly not one that tracks differences across cultures.

To el Kaliouby, these kinds of insights are among the most interesting, because she is ultimately less interested in how human beings interact with machines than in how human beings interact with one another. In its current form, technology appears to be making us less emotive, which she believes inevitably effects the way we relate to one another. “I think it is possible,” she says, “for us to live in a world where we derive all the benefits of technology without losing the emotions that make us human.”

Watch the entire TED talk below.

Fast Company , Read Full Story

(192)