What natural language pros are saying about Google’s Duplex

Google’s much-hyped reservation-setting AI, Duplex, is a one-trick pony with nowhere near the conversational versatility we’ll see in the artificial general intelligence (AGI) of the future. But the natural language interface it employs is provocative because it suggests the way we might interact with the AGIs of the future.

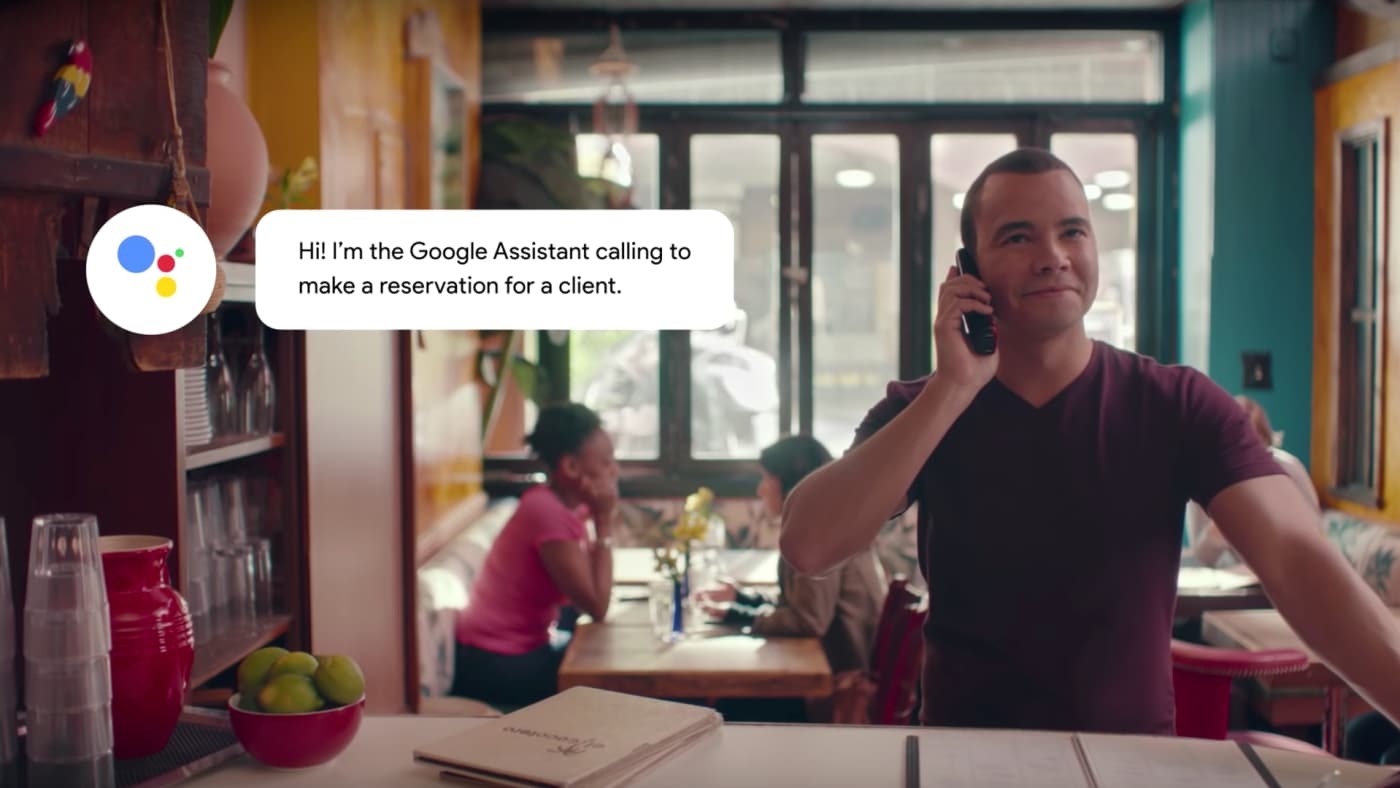

Earlier this week Google invited journalists to field calls from the AI, which tried to say the right words and phrases to set a restaurant reservation (the demos were held at local restaurants in New York and the Bay Area) and to sound natural and human in the process.

The knowledge baked into Duplex consists mainly of terms and times necessary for making a reservation. That narrow data set is not what has people talking; it’s the natural language interface that Duplex uses to communicate with humans. It injects human-sounding “uhs” and “ums” into its speech. It uses dialect: The voices in the demos sound like SoCal surfer dudes and chicks.

Within a narrow context it does feel like engaging in a real conversation, as our Katharine Schwab reported after fielding a call from Duplex at a Thai restaurant in New York. “With its SoCal intonation, pauses, and “ums” peppered throughout the conversation, it sounded uncannily human,” Schwab wrote. “By the end of the conversation, I’d almost forgotten that I was speaking with a powerful piece of machine learning software.”

For now, the public discussion around Duplex may be more important than the technology that Google developed. We may now be having the first of many public conversations about the practical ways in which AGIs should talk to humans.

How much do we want AIs to sound like us? How wide is that uncanny valley between AIs sounding nothing like us and everything like us? When, in conversation, should an AI identify as an AI?

Google has already responded to some initial unease over Duplex by making sure the AI identifies itself early in the calls.”As we’ve said from the beginning, transparency in the technology is important,” a Google spokesperson said in an email.

“We are designing this feature with disclosure built-in, and we’ll make sure the system is appropriately identified,” she said. “What we showed at I/O was an early technology demo . . .”

Botnik founder Jamie Brew thinks those affectations are more than cosmetic. “At least some of those stalling words are a way of honestly signaling that the computer is processing,” Brew says. “I sometimes say the whirr of the central processor is computer-speak for ‘hmm;’ this Google implementation actually brings that into the conversation, which is neat.” Botnik created an AI predictive text keyboard than can be used by creatives for idea generation. Amazon invested.

“So, they’re headed in the right direction–not toward a perfect simulation of human thought, but toward making machine thought legible to people, using the cues we understand,” Brew says.

Narrow domains

Brew says Duplex succeeds because it operates in a narrowly defined, goal-directed domain. It concerns itself only with the knowledge base or training data needed to successfully set up an appointment or request specific information. It’s nowhere near AGI, or artificial intelligence.

“This is not in any way passing the Turing Test, as the AI has been trained on specific scenarios, much like you create “skills” for Alexa,” says Snips.ai CEO Rand Hindi. “It is not capable of having an open conversation, only conversations it has been trained to learn to handle.” Snips is an open-source voice assistant.

“I think it is more of a design breakthrough than a tech one,” Hindi added.

Still, AGI is not science fiction. Companies like Google and IBM are working on AI that can participate in more open-ended or ad hoc conversations with humans. IBM’s Watson AI recently participated in an open-ended debate with a human opponent. Siri developer SRI is working with DARPA to develop a framework by which an AI can manage ad-hoc learning from experience.

Microsoft’s Ying Wang tells me her company has developed a technology called Full Duplex Voice Sense for general (open-domain) conversations. The Chinese chatbot Xiaoice has already made over 600,000 Full Duplex phone calls with humans, she says.

Not only will the scope of an AIs knowledge grow broader in the future, but the speed at which it manages information–and human interactions–will grow, too.

“We don’t need to artificially rate limit our tech to keep it at a speed and dimensionality that resembles our own,” says Botnik’s creative director Michael Frederickson, “but we do need to pay much more attention than we have been to dimensions of validation other than just correctness and speed.”

“We’re absolutely starving for more tech designers who truly consider how a system ‘feels’ to a human user,” Frederickson says. “How fast do we process change, what’s a manageable amount of information to process at one time for most human brains?”

This line of reasoning moves quickly to a basic set of questions about how humans want to live with AIs. If we’re to coexist and collaborate with AIs then roles will have to be defined. Will it resemble the relationship with the (very human) Kirk and the (very intelligent, less human) Spock? Or will it be some dynamic we simply can’t know yet?

Demonstrations like Duplex suggest that the time to start thinking about these issues is now.

According to Frederickson, “Far more work is needed on what interfaces and ‘views’ we’ll need if we’re going to design advanced AI that works in service or collaboration with us, rather than as a higher dimensional replacement.”

Fast Company , Read Full Story

(45)