Who cares about liberty? Julia Angwin and Trevor Paglen on our big fat privacy mess

This piece originally appeared in The End of Trust: McSweeney’s 54, a collection featuring over 30 writers investigating surveillance, technology, and privacy, with special advisers the Electronic Frontier Foundation. For more on these issues, see our series The Privacy Divide.

Amid the daily onslaught of demented headlines, two words are often found forming a drumbeat, connecting seemingly disparate news events: security and privacy. Social media is a story of us sacrificing our privacy, knowingly and unknowingly. This sacrifice is often framed as an individual choice rather than collective action. Breaches of banks, voting machines, hospitals, cities, and data companies like Equifax expose the lie of security–namely, that our information is ever safe from intruders. But artist Trevor Paglen and journalist Julia Angwin, two of the world’s sharpest minds on those subjects, would rather not use those words.

The Berlin-based Paglen, who holds a doctorate in geography from Berkeley, has built his art career on helping to make the unseen visible–using photography to draw our attention to the cables running under the Atlantic that constitute the internet’s infrastructure, for instance, or enlisting amateur trackers to help find American-classified satellites and other unknown space debris.

Angwin, an award-winning journalist and author, has revealed, for instance, the racism and bigotry that undergird Facebook algorithms allowing the company to sell demographics like “Jew hater” to willing advertisers. Last year, she left ProPublica to build a newsroom at the Markup to continue her work of holding tech companies accountable for their impact on society. [In a surprise development this week, Angwin announced she had been ousted from her role as editor-in-chief over disagreements with the publication’s executive director, leading many of the Markup‘s editorial staff to resign in protest.]

Last year, I spoke with Trevor and Julia in New York City about their concerns, their work, and more.

Reyhan Harmanci: So you guys know each other.

Julia Angwin and Trevor Paglen: Yes.

RH: When did your paths cross?

JA: We met before Snowden, yeah, because I was writing my book on surveillance, and you were doing your awesome art.

TP: And you reached out to me and I was like, “You’re some crazy person,” and didn’t write back.

JA: I wrote to him, and I was like, “I want to use one of your art pieces on my website, and how much would it cost?” and he was like, “Whatevs, you’re not even cool enough.”

TP: That is one of the most embarrassing moments of my life. You know, I get a million of those emails every day.

JA: So do I! I ignore all of them, and then one day that person will be sitting right next to me, and I’ll be like, “Heh, sorry.”

The problem with email is that everyone in the world can reach you. It’s a little too much, right? It’s just not a sustainable situation. But yeah, we met sometime in the pre-Snowden era, which now seems like some sort of sepia-toned . . .

TP: . . . wagons and pioneers . . .

JA: Exactly. And what’s interesting about this world is that we’d been walking in the same paths. It’s been an interesting journey, just as a movement, right? It started off about individual rights and surveillance and the government, and very nicely moved into social justice, and I think that’s the right trajectory. Because the problem with the word formerly known as privacy is that it’s not an individual harm, it’s a collective harm.

TP: That’s right.

JA: And framing it around individuals and individual rights has actually led to, I think, a trivialization of the issues. So I’m really happy with the way the movement has grown to encompass a lot more social justice issues.

RH: I want to get into why you guys like the word trust better than surveillance or privacy, two words that I think are associated with both of you in different ways. Do you have any memories of the first time you came to understand that there was an apparatus that could be watching you?

JA: That’s an interesting question. I was the kid who thought my parents knew everything I was doing when I wasn’t with them, so I was afraid to break the rules. I was so obedient. My parents thought television was evil, so at my friends’ houses I would be like, “I can’t watch television.” And they’d be like, “But this is your one chance!”

And I have spent my whole life recovering from that level of obedience by trying to be as disobedient as possible. I did feel like my parents were an all-seeing entity, you know, and I was terrified of it. And I think that probably did shape some of my constant paranoia, perhaps.

RH: Right, yeah. I was a really good kid, too.

JA: Yeah, it’s no good, that goodness.

TP: I think I had the sense of growing up within structures that didn’t work for me and feeling like there was a deep injustice around that. Feeling like the world was set up to move you down certain paths and to enforce certain behaviors and norms that didn’t work for me, and realizing that the value of this word formerly known as privacy, otherwise known as liberty, plays not only at the scale of the individual, but also as a kind of public resource that allows for the possibility of, on one hand, experimentation, but then, on the other hand, things like civil liberties and self-representation.

You realize that in order to try to make the world a more equitable place, and a place where there’s more justice, you must have sectors of society where the rules can be flexible, can be contested and challenged. That’s why I think both of us aren’t interested in privacy as a concept so much as anonymity as a public resource. And I think that there’s a political aspect to that in terms of creating a space for people to exercise rights or demand rights that previously didn’t exist. The classic example is civil rights: There are a lot of people that broke the law. Same with feminism, same with ACT UP, same with the entire history of social justice movements.

And then as we see the intensification of sensing systems, whether that’s an NSA or a Google, we’re seeing those forms of state power and the power of capital encroaching on moments of our everyday lives that they were previously not able to reach. What that translates into if you’re a corporation is trying to extract money out of moments of life that you previously didn’t have access to, whether that’s my Google search history or the location data on my phone that tells you if I’m going to the gym or not. There is a set of de facto rights and liberties that arise from the fact that not every single action in your everyday life has economic or political consequences.

That is one of the things that I think is changing, and one of the things that’s at stake here. When you have corporations or political structures that are able to exercise power in places of our lives that they previously didn’t have access to, simply because they were inefficient, it adds up to a society that’s going to be far more conformist and far more rigid, and actually far more predatory.

JA: I agree.

What’s wrong with “privacy”–and “surveillance”

RH: What makes you guys dislike the word privacy?

JA: Well, when I think of privacy, I think of wanting to be alone. I mean, I think that’s the original meaning of it. But the truth is that the issues we’re discussing are not really about wanting to be solitary. They’re actually about wanting to be able to participate in the wonderful connectedness that the internet has brought to the world, but without giving up everything. So privacy, yes, I do want to protect my data and, really, my autonomy, but it doesn’t mean that I don’t want to interact with people. I think privacy just has a connotation of, “Well you’re just, like, antisocial,” and it’s actually the opposite.

TP: Yeah, absolutely. I think it’s right to articulate the collective good that comes through creating spaces in society that are not subject to the tentacles of capital or political power, you know? Similar to freedom of speech, not all of us have some big thing that we want to exercise with our freedom of speech, but we realize that it’s a collective good.

JA: Yeah, exactly. And so I think privacy feels very individual, and the harm that we’re talking about is really a societal harm. It’s not that it’s not a good proxy; I use that word all the time because it’s a shorthand and people know what I’m talking about, especially since I wrote a book with that in the title. But I do feel like it just isn’t quite right.

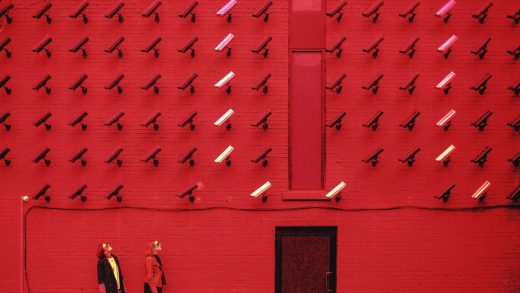

TP: The other side of privacy is the word surveillance, which certainly is a word that started becoming popular in the ’90s when surveillance cameras were installed around cities, and everyone was like, “Oh, you’re being watched by some guy in front of a bunch of screens.” But those screens are gone, and that guy disappeared and is unemployed now because all of that has been automated. The other aspect of surveillance, I think, is that it has been historically associated with forms of state power, and that’s certainly still true. But with that is surveillance capitalism now, which is how you monetize the ability to collect very intimate kinds of information.

JA: Yeah, I think surveillance is a slightly better word for what we’re talking about, but because of the connotation a lot of people see it only as government surveillance. That doesn’t encompass everything, and what it also doesn’t encompass is the effect of it. Surveillance is just the act of watching, but what has it done to the society, right? What does it do to you? What does it do when there’re no pockets where you can have dissident views, or experiment with self-presentation in different ways? What does that look like? That’s really just a form of social control, and, like you said, a move towards conformity. And so that is, I think, why surveillance itself is not quite an aggressive enough word to describe it.

TP: Because surveillance also implies a kind of passivity on the part of the person doing the surveilling, and that’s not true anymore; these are active systems.

[Commissioned by Creative Time Reports, 2013.]

RH: What does it mean to be an active system?

TP: Super simple example: You’re driving in your car and you make an illegal right turn on a red light, and a camera detects that, records your license plate, and issues a traffic ticket–and there’s no human in the loop. You can abstract that out to being denied employment based on some kind of algorithmic system that is doing keyword filtering on résumés. A lot of the work you’ve been doing, Julia, is on this.

JA: Yeah, I mean the thing is, the surveillance is just the beginning of the problem. It’s about, once the data is collected, what are they going to do with it? Increasingly, they’re making automated decisions about us.

And, weirdly, it seems as though the decisions they’re automating first are the most high-stakes human decisions. The criminal justice system is using these systems to predict how likely you are to commit a crime in the future. Or there are systems that are figuring out if you’re going to be hired just by scanning résumés. I think it’s so funny because the automated systems that most people use are probably maps, and those things suck. Like half the time they’re telling you to go to the wrong place, and yet we’re like, “I know! This stuff is so awesome; let’s use it for really high-stakes decisions about whether to put people in jail or not!”

TP: Or kill them!

RH: I think a lot of times people say, “Well, I’m not doing anything wrong. Take my information, sell me stuff. I am not making bombs in my basement.” But that’s only seemingly the tip of the iceberg.

JA: Well, that’s a very privileged position. Basically, the people who say that to me are white. The number of things that are illegal in this world is really high. We have a lot of laws, and there are certain parts of our community, mostly brown people, who are subject to much stronger enforcement of them. And so I think the feeling of, “I’m not doing anything wrong” is actually a canard. Because, of course, even if you were caught doing it, you would get off because that’s how our beautiful society is set up. But in fact, people are monitoring the Twitter feeds of Black Lives Matter, and all the Ferguson people, and trying to find anything to get them on. And that is what happens with surveillance–you can get somebody on anything.

When I was working on my book, I went to visit the Stasi archives in Berlin where they have the files that the Stasi kept on the citizens, and I did a public record request. I got a couple of files which are actually translated, and they’re on my website. What was so surprising to me was that the Stasi had so little, but they only needed some small thing. Like this one high school student, he skipped class a couple times or something, so they went to his house and were like, “You should become an informant, or else we’re going to punish you.” It was all about turning people into informants based on a tiny shred of something they had.

And I think that’s really what surveillance is: it’s about the chilling effect, the self-censorship, where you’re not willing to try anything risky. There could be very strong consequences, particularly if you’re part of a group that they want to oppress.

TP: I disagree in the sense that I think . . . Okay, Reyhan, you’re pregnant. Let’s say I have all your geolocation data, and I know that you visited a liquor store on a certain day. Do I sell that information to your health insurance provider? That’s going to have consequences for your ability to access health care, or mean you may have to pay higher premiums. I think it’s not just a kind of legalistic framework; it’s a framework about “What are places in which I am able to leverage information in order to extract money from you, as well as determine whether or not you become part of the criminal justice system?”

Not just China

RH: Sometimes I have trouble imagining that all of this is happening.

JA: It does seem unreal. In fact, what I find so shocking is that I felt like I had tried to paint the most pessimistic view I could in 2014, and it’s so much worse; it’s so much worse than I imagined. So, let’s look at one simple thing in this country, and then one in China.

In this country, these risk scores that I’ve been talking about are being used when you get arrested. They give you a score, 1 to 10, of how likely you are to commit a future crime, and that can determine your conditions of bail. There’s this huge movement across the nation to, instead of putting people in jail–the higher-risk people–just put them on electronic ankle bracelets. So essentially an automated decision forces somebody to literally have a GPS monitor on them at all times. That is something I actually didn’t think would happen this fast. Three years ago I would have said,”Nah, that’s a little dystopian.”

Now think about China: There’s a region that is very Muslim, and the Chinese government is trying to crack down because they think they’re all terrorists. I was just with this woman from Human Rights Watch who works in that region and aggregated all the information about which surveillance equipment they’d bought in that region and what they’re doing. And they built an automated program, which basically says if you go to the gas station too many times, or you buy fertilizer, or you do anything suspicious. Something pops up that says you’re high-risk and they send you to reeducation camp. It’s an automated system to determine who gets sent into reeducation camp. And I was like, “Oh my god, I can’t believe this is happening right now; we’re not even talking about the future!”

TP: This Chinese citizen credit score system has been in the news a little bit. Basically the pilot programs, which are in operation in advance of the 2020 national rollout, are able to monitor everything that you do on social media, whether you jaywalk or pay bills late or talk critically about the government, collect all these data points, and assign you a citizen score. If you have a high one, you get, like, discounts on movie tickets, and it’s easier to get a travel visa, and if you have a lower one, you’re going to have a really bad time. It’s creating extreme enforcement mechanisms, and I think a lot of us here in the U.S. say, “Oh well, that’s this thing that can only happen in China because they have a different relationship between the state and the economy and a different conception of state power.” But the same exact things are happening here, they’re just taking a different shape because we live under a different flavor of capitalism than China does.

RH: Do Chinese citizens know about this?

JA: Yes.

RH: So these scores are not secret or subterranean . . .

JA: No, they’re meant to be an incentive system, right? It really is just a tool for social control; it’s a tool for conformity, and probably pretty effective, right? I mean, you get actual rewards; it’s very Pavlovian. You get the good things or you don’t. It’s just that governments have not had such granular tools before. There have been ways that the government can try to control citizens, but it’s hard trying to control a population! These tools of surveillance are really part of an apparatus of social control.

RH: How good is the data?

JA: That’s the thing: it doesn’t matter. First of all, the data all sucks, but it doesn’t matter. I was asking this analyst from Human Rights Watch why they don’t just put any random person in the detention camp, because it’s really just meant as a deterrent for everyone else to be scared. You don’t really need any science. But what they realize is that they’re actually trying to attain the good behavior, so even though it cost money to build this algorithm, which nobody’s testing the accuracy of, it creates better incentives. People will act a certain way.

So the data itself could be garbage, but people will think, “Oh, if I try to do good things, I will win. I can game the system.” It’s all about appealing to your illusion that you have some control. Which is actually the same thing as the Facebook privacy settings–it’s built to give you an illusion of control. You fiddle with your little dials, and you’re like, “I’m so locked down!” But you’re not! They’re going to suggest your therapist as your friend, and they’re going to out you to your parents as a gay person. You can’t control it!

RH: I think I have bad personal habits, because I have trouble believing that my phone is really giving information to Google or Facebook or whatever about where I am. I have trouble believing that on some level.

TP: It tells you!

RH: But that I matter enough, even as a consumer, to them.

JA: Well you don’t really matter to them; I mean, they need everybody. It’s more about the voraciousness. They need to be the one place that advertisers go, need to be able to say, “We know everything about everyone.” So it’s true, they’re not sitting around poring over the Reyhan file–unless you have somebody who hates you there who might want to pore over that file. But it’s more about market control. It’s similar to state control in the sense that, in order to win, you need to be a monopolist. That’s actually kind of just the rule of business. And so they–Google and Facebook–are in a race to the death to know everything about everyone.

That’s what they compete on, and that’s what their metric is. Because they go to the same advertisers and they say, “No, buy with us.” And the advertisers are like, “Well, can you get me the pregnant lady on the way to the liquor store? Because that’s what I want.” And whoever can deliver that, they’re in. They’re in a race to acquire data, just the way that oil companies race to get the different oil fields.

TP: The one thing I want to underline again is that we usually think about these as essentially advertising platforms. That’s not the long-term trajectory of them. I think about them as, like, extracting-money-out-of-your-life platforms.

JA: The current way that they extract the money is advertising, but they are going to turn into—are already turning into–intermediaries, gatekeepers. Which is the greatest business of all. “You can’t get anything unless you come through us.”

TP: Yeah, and health insurance, credit . . .

JA: That’s why they want to disrupt all those things–that’s what that means.

“Google will also then become your insurer”

RH: So how is that going to play out? Is it like when you sign up for a service and you can go type in your email or hit the Facebook button? Is that how it’s happening?

JA: Well, that’s how it’s happening right now. But you know Amazon announced healthcare, right? We’re going to see more of that. My husband and I were talking recently about self-driving cars. Google will also then become your insurer. So they’re going to need even more data about you, because they’re going to disrupt insurance and just provide it themselves. They know more about you than anyone, so why shouldn’t they pool the risk? There’s a lot of ways that their avenues are going to come in, and to be honest, they have a real competitive advantage because they have that pool of data already.

And then also it turns out, weirdly, that no one else seems to be able to build good software, so there is also this technological barrier to entry, which I haven’t quite understood. I think of it like Ford: First, when he invented the factory, he had a couple decades’ run of that being the thing. And then everyone built factories, and now you can just build a factory out of a box. So I think that that barrier to entry will disappear. But right now, the insurer companies–even if they had Google’s data set–wouldn’t actually be able to build the software sophisticated enough. They don’t have the AI technology to predict your risk. So these tech companies have two advantages, one of which might disappear.

TP: But the data collection is a massive advantage.

JA: It’s a massive advantage.

TP: I mean, a lot of these systems simply don’t work unless you have data.

JA: Yes, and sometimes they talk about it as their “training data,” which is all the data that they collect and then use to train their models. And their models, by definition, can make better predictions because they have more data to start with. So, you know, when you look at these companies as monopolies, some people think of it in terms of advertising revenue. I tend to think about it in terms of the training data. Maybe that is what needs to be broken up, or maybe it has to be a public resource, or something, because that’s the thing that makes them impossible to compete with.

[Commissioned by Creative Time Reports, 2013.]

RH: How good are they at using this data? A lot of times I’ve been very unimpressed by the end results.

JA: Well, that’s what happens with monopolies: they get lazy. It’s a sure sign of a monopoly, actually.

TP: Yeah, how good is your credit reporting agency?

JA: It’s garbage!

RH: Totally! It all seems like garbage, you know? Facebook is constantly tweaking what they show me, and it’s always wrong.

JA: Right. That’s why there was an announcement recently, like, “Oh, they’re going to change the news feed.” They don’t care what’s in the news feed. It doesn’t matter, you’re not the customer. They don’t want you to leave, but you also have nowhere to go, so . . .

RH: Right. And it’s like Hotel California: You’ll never actually be able to leave.

JA: Yes, correct!

RH: So they’re engaged in this massive long-term effort to be the gatekeepers between you and any service you need, any time you need to type in your name anywhere.

JA: I mean, really, the gatekeeper between you and your money. Like, whatever it takes to do that transaction.

RH: Or to get other people’s money to them.

JA: Yeah, sure, through you.

When surveillance capitalism meets criminal justice

TP: Let’s also not totally ignore the state, too, because records can be subpoenaed, often without a warrant. I think about Ferguson a lot, in terms of it being a predatory municipal system that is issuing, like, tens of thousands of arrest warrants for a population of, like, that many people, and the whole infrastructure is designed to just prey on the whole population. When you control those kinds of large infrastructures, that’s basically the model.

RH: And that is very powerful. How does the current administration’s thirst for privatization play into this sort of thing? Because I could imagine a situation where you want to have national ID cards, and who better to service that than Facebook?

JA: Oh, that effort is under way. There is a national working group that’s been going on for five years, actually, that’s looking for the private sector to develop ways to authenticate identities, and the tech companies are very involved in it. The thing about this administration that’s interesting is, because of their failure to staff any positions, there is some . . .

RH: A silver lining!

JA: Yeah, some of these efforts are actually stalled! So that actually might be a win, I don’t know. But the companies have always been interested in providing that digital identity.

TP: We don’t just have to look at the federal level. You can look at the municipal level, in terms of cities partnering with corporations to issue traffic tickets. Or look at Google in schools. I do think, with the construction of these infrastructures, there is a kind of de facto privatization that’s happening. Apple or Google will go to municipalities; they will provide computers to your schools, but they’re going to collect all the data from all the students using it, et cetera. Then they’ll use that data to in other ways partner with cities to charge for services that would previously have been public services.

Again, there are lots of examples of this with policing–take Vigilant Solutions. This company that does license plate reading and location data will say, “Okay, we have this database of license plates, and we’re going to attach this to your municipal database so you can use our software to identify where all those people are who owe you money, people who have arrest warrants and outstanding court fees. And we’re going to collect a service charge on that.” That’s the business model. I think that’s a vision of the future municipality in every sector.

JA: You know, that’s such a great example because Vigilant really is . . . basically, it’s repo men. So essentially it starts as predatory. All these repo men are already driving around the cities all night, looking for people’s cars that the dealers are repossessing because people haven’t paid. Then they realized, “Oh, the government would actually really love a national database of everybody’s license plates,” so they started scanning with these automated license plate readers on their cars.

So the repo men across the country go out every night–I think it was Baltimore where Vigilant’s competitor had forty cars going out every night–taking photographs of where every car is parked and putting them in a database. And then they build these across the nation, and they’re like, “Oh, DHS, do you want to know where a car is?” and then they bid for a contract. And so you add the government layer and then you add back the predatory piece of it, which is… really spectacular.

RH: But if you talk to an executive at Vigilant, they would be like, “What’s the problem? We’re just providing a kind of service.”

JA: Yeah, I did spend a bunch of time with their competitors based in Chicago, met a bunch of very nice repo men who said, “Look, we’re doing the Lord’s work. I mean, these are bad people, and we have to catch them.” And then I said, “Can you look up my car?” and boom, they pushed a button, and there it was, right on the street in front of my house! I was like, holy shit.

TP: Wow.

JA: That is so creepy.

RH: That’s crazy! That’s the kind of thing where, again, I’d be like, “Nobody knows where my car is; I don’t know where my car is! They’re not fucking finding it,” you know?

JA: Right.

TP: But you could take it further. Think about the next generation of Ford cars. They know exactly where your car is all the time, so now Ford becomes a big data company providing geolocation data.

RH: And you’re not even going to own your car since its software is now property of the carmaker.

TP: It goes back to the thing about whether you’re going to liquor stores, whether you’re speeding. Tomorrow, Ford could make a program for law enforcement that says, “Every time somebody speeds, whatever jurisdiction you’re in, you can issue them a ticket if you sign up for our . . . ” You know? I mean, this is a full traffic enforcement partnership program.

JA: And one thing I just want to say, because I have to raise the issue of due process at least once during any conversation like this. My husband and I fight about this all the time: he’s like, “I want them to know I’m driving safely because I’m safe, and I’m happy to have the GPS in the car, and I’m awesome, and I’m going to benefit from this system.” But the problem is, when you think about red-light cameras and automated tickets, which are already becoming pretty pervasive, it’s really a due process issue. How do you fight it? It would probably be really hard to do. Like, are we going to have to install dashboard cameras surveilling ourselves in order to have some sort of protection against the state surveilling us, or whoever is surveilling us?

I think a lot of the issues raised by this type of thing are really about, and I hate to say it, but our individual rights. Like about the fact that you can’t fight these systems.

TP: That’s true. There’s a lot of de facto rights we’ve had because systems of power are inefficient. Using a framework of criminal justice where this is the law, this is the speeding limit, and if you break that you’re breaking the law–that’s not actually how we’ve historically lived.

JA: Right.

TP: And that is very easy to change with these kinds of systems.

RH: Yeah, because then if you’re looking to target somebody, that’s where the data’s weaponized. And also, there’s so much rank incompetence in criminal justice, and in all these human systems. Maybe its saving grace has been that humans can only process so much stuff at any one time.

TP: I think the funny thing is that it’s been police unions most vocally opposed to installing things like red-light cameras because they want that inefficiency.

RH: Yeah, and they say, “We’re making judgment calls.”

JA: I mean there was this crazy story–you know the truckers, right? The truckers are being surveilled heavily by their employers. A lot of them are freelance, but there are federal rules about how many hours you can drive. It’s one of these classic examples–sort of like welfare, where you can’t actually eat enough with the amount of money they give you, but it’s illegal to work extra.

So similarly, for truckers, the number of hours you need to drive to make money is more than the hours you are allowed to drive. And to ensure the truckers don’t exceed these miles, they’ve added all these GPS monitors to the trucks, and the truckers are involved in these enormous countersurveillance strategies of, like, rolling backwards for miles, and doing all this crazy stuff. And there was this amazing thing one day at Newark, a year or two ago, where the control tower lost contact with the planes at Newark Airport, and it turned out it was because of a trucker. He was driving by the airport and he had such strong countersurveillance for his GPS monitor that he interrupted the air traffic!

TP: Wow!

RH: Wild. God, a decade ago I was on BART, and I never do this, but I was talking to the guy next to me and he was a truck driver. He was telling me about the surveillance of it at the time. He was like, “This has already been going on.” This was 10 years ago! He said the first kinds of self-driving cars are going to be trucks.

JA: Yes, that’s right, they are going to be trucks.

RH: I just remember getting off BART and being like, “What if that was an oracle or something?”

JA: The truckers are bringing us the news from the future.

How we outsourced trust to companies we don’t trust

RH: So this conversation is being framed as “the end of trust,” but did we have trust?

JA: I’m not sure I agree with “the end of trust.” I’m more into viewing this as an outsourcing of trust right now. So essentially I think that in this endless quest for efficiency and control we’ve chosen–and sometimes I think it’s a good thing–to trust the data over human interactions. We’re in this huge debate, for instance, about fake news. We’ve decided it’s all Facebook’s problem. They need to determine what is real and what is not. Why did we outsource the trust of all of what’s true in the world, all facts, to Facebook? Or to Google, to the news algorithm? It doesn’t make any sense.

And so in the aggregation of data, we have also somehow given away a lot of our metrics of trust, and that’s partly because these companies have so much data, and they make such a strong case that they can do it with the math, and they’re going to win it with the AI, they’re going to bring us the perfect predictions.

What I’m really concerned about is the fact that we’ve broken the human systems of trust that we understood. Studies show that when you look at a person, you establish trust with them, like, in a millisecond. And you can tell people who are not trustworthy right away. You can’t even articulate it; you just know. But online and through data, we just don’t have those systems, so it’s really easy to create fake stuff online, and we don’t know how to build a trustworthy system. That’s what is most interesting to me about the moment we’re in.

I think we’re at a moment where we could reclaim it. We could say, “This is what journalism is, and the rest of that stuff is not.” Let’s affirmatively declare what’s trustworthy. We’ve always had information gatekeepers, right? Teachers would say, “Read this encyclopedia, that’s where the facts are.” Now my kids come home and just look things up on the internet. Well, that’s not the right way to do it. I think we’re at a period where we haven’t figured out how to establish trust online, and we’ve outsourced it to these big companies who don’t have our interests at heart.

RH: In terms of “the end of trust,” what does feel threatening or worth creating collective action around?

TP: For me, it’s the ability of infrastructures, whether they’re private or policing, to get access to moments of your everyday life far beyond what they were capable of 20 years ago. It’s not a change in kind, it’s just the ability for capital and the police to literally colonize life in much more expansive ways.

RH: Both of you guys are engaged in work that tries to make this stuff visible–and it’s very hard. The companies don’t want you to see it. A lot of your work, Trevor, has been about the visceral stuff, and your work, Julia, has been about saying, “We’ve tested these algorithms, we can show you how Facebook chooses to show a 25-year-old one job ad and a 55-year-old another.” What do you wish you could show people?

TP: There’re very clear limits on the politics of making these things visible. Yeah, sure, it’s step one, and it’s helpful because it develops a vocabulary that you can use to have a conversation. The work that you do, Julia, creates a vocabulary for us to be able to talk about the relationship between Facebook and due process, which we didn’t have the vocabulary, really, to even have an intelligible conversation about before.

I think it’s just one part of a much bigger project, but doesn’t in and of itself do anything. That’s why we have to develop a different sense of civics, really. A more conscious sense of which parts of our everyday life we want to subject to these kinds of infrastructures, and which we don’t. There is good stuff that you can do, for example, with artificial intelligence, like encouraging energy efficiency. So I think we need to say, “Okay, these are things that we want to optimize, and these are things that we don’t want to optimize.” It shouldn’t just be up to capital, and up to the police, and up to the national security state to decide what those optimization criteria are going to be.

What’s wrong with AI

JA: I strongly believe that in order to solve a problem, you have to diagnose it, and that we’re still in the diagnosis phase of this. If you think about the turn of the century and industrialization, we had, I don’t know, 30 years of child labor, unlimited work hours, terrible working conditions, and it took a lot of journalist muckraking and advocacy to diagnose the problem and have some understanding of what it was, and then the activism to get laws changed.

I feel like we’re in a second industrialization of data information. I think some call it the second machine age. We’re in the phase of just waking up from the heady excitement and euphoria of having access to technology at our fingertips at all times. That’s really been our last 20 years, that euphoria, and now we’re like, “Whoa, looks like there’re some downsides.” I see my role as trying to make as clear as possible what the downsides are, and diagnosing them really accurately so that they can be solvable. That’s hard work, and lots more people need to be doing it. It’s increasingly becoming a field, but I don’t think we’re all the way there.

One thing we really haven’t tackled: It sounds really trite, but we haven’t talked about brainwashing. The truth is that humans are really susceptible. We’ve never before been in a world where people could be exposed to every horrible idea in the universe. It looks like, from what you see out there, people are pretty easily convinced by pretty idiotic stuff, and we don’t know enough about that, that brain psychology. What’s interesting is the tech companies have been hiring up some of the best people in that field, and so it’s also a little disturbing that they may know more than the academic literature that’s presented.

RH: That’s crazy.

JA: I know, I hate saying brainwashing. There’s probably a better word for it. Cognitive persuasion or something.

RH: Or just understanding humans as animals, and that we’re getting these animal signals.

JA: Yeah, right. I mean, we have those behavioral economics. That’s led a little bit down the road, but they just haven’t gone as far as what you see with the spiral of the recommendation engines, for instance, on Facebook, where you search for one thing related to vaccines and you’re led down every single conspiracy theory of anti-vaxx and chemtrails, and you end up drinking raw water out of the sewer at the end of it.

RH: Right, you spend $40 at a Rainbow Grocery in San Francisco buying your raw water.

JA: It’s an amazing pathway. People do get radicalized through these types of things, and we just don’t understand enough about countermeasures.

RH: Yeah, that’s really scary. Do you guys interact with young people? Do they give you any sense of hope?

JA: My daughter’s awesome. She’s 13. I actually have great hope for the youth because she really only talks on Snapchat, so she’s completely into ephemeral everything. And her curation of her public personality is so aggressive and so amazing. She has, like, five pictures on her Instagram, heavily curated, and she has, of course, five different Instagrams for different rings of friendship. It’s pretty impressive! I mean it takes a lot of time, and I’m sad for her that she has to spend that time, because at that age literally all I wore was pink and turquoise for two straight years and there’s no documentation of it. Like, nothing. It was the height of Esprit. And I can just tell you that, and you’ll never see it. She will always have that trail, which is a bummer, but I also appreciate that she’s aware of it and she knows how to manage it even though it’s a sad task, I think.

RH: Yeah. I’m always fascinated by teenagers’ computer hygiene habits.

JA: I think they’re better than adults’.

RH: I’m curious about AI stuff. There’s a lot of hype around AI, but all I ever really see of it are misshapen pictures on Reddit or something. I’m like, “We’re training them to recognize pictures of dogs,” you know? What’s the end goal there? Is this technology going to powerfully shape our future in a way that is scary and hidden? Or is it a lot of work, a lot of money, and a lot of time for a result that seems, frankly, not that impressive?

TP: Yeah, there’re misshapen pictures on Facebook and Reddit and that sort of thing–that’s people like us playing with it. That’s not actually what’s going on under the hood. That’s not what’s driving these companies.

JA: I want us to back up a little bit because AI has been defined too narrowly. AI used to just mean automated decision-making. Then recently it’s been defined mostly as machine learning. Machine learning is in its early stages, so you see that they make mistakes. Google characterizing black faces as gorillas because they had shitty [racially biased] training data. But in fact the threat of AI is, to me, broader than just the machine learning piece of it. The threat is really this idea of giving over trust and decision-making to programs that we’ve put some rules into and just let loose. And when we put those rules in, we put our biases in. So essentially, we’ve automated our biases and made them inescapable. You have no due process anymore to fight against those biases.

One thing that’s really important is we’re moving into a world where some people are judged by machines, and that’s the poor and the most vulnerable, and some people are judged by humans, and that’s the rich. And so essentially only some people are sorted by AI–or by whatever we call it: algorithms, automated decisions–and then other people have the fast track. It’s like the TSA PreCheck.

TP: An article came out reporting that Tesco, the UK’s largest private employer, uses AI as much as possible in its hiring process. Whereas this afternoon I had to be part of a committee hiring the new director of our organization: it’s all people.

JA: Yes, right, exactly. It’s almost like two separate worlds. And that’s what I think is most disturbing. I have written about the biases within AI’s technology, but the thing is, those biases are only within the pool of people who are being judged by it, which is already a subset.

TP: My friend had a funny quote. We were talking about this and she said, “The singularity already happened: It’s called neoliberalism.”

JA: Oh yeah, that’s the greatest quote of all time. I will say this, just because I want to be on the record about it: I really have no patience for singularity discussions because what they do–just to be really explicit about it–is move the ball over here, so that you’re not allowed to be concerned about anything that’s happening right now in real life. They’re like, “You have to wait until the robots murder humans to be worried, and you’re not allowed to be worried about what’s happening right now with welfare distribution, and the sorting of the ‘poor’ from the ‘deserving poor,’”–which is really what all of those automated systems are: this idea that there are some people who are deserving poor and some people who aren’t. Morally, that is already a false construct, but then we’ve automated those decisions so they’re incontestable.

RH: So you could go through the whole system and never see a human, but all the human biases have already been explicitly embedded.

JA: Yeah, there’s a great book just out on that called Automating Inequality by Virginia Eubanks that I highly recommend.

Personal OPSEC

RH: Can you two talk a bit about your personal security hygiene?

TP: Well, people are always like, “We choose to give our data to Google.” No, we don’t. If you want to have your job, if you want to have my job, you have to use these devices. I mean, it’s just part of living in society, right?

JA: Correct.

TP: I have somebody who works for me who chooses not to, and it’s a complete, utter headache where literally the whole studio has to conform to this one person who won’t have a smartphone. It’s worth it because they’re really talented, but at the same time, at any other company, forget it. These are such a part of our everyday infrastructures that you don’t have the individual capacity to choose to use them or not. Even if you don’t use Facebook, they still have your stuff.

JA: Yeah, I agree. I don’t want to give the impression that there is some choice. The reason I wrote my book from the first-person account of trying to opt out of all these things was to show that it didn’t work. And I’m not sure everybody got that picture, so I want to just state it really clearly. I do small measures here and there around the corners, but, no, I live in the world, and that’s what the price of living in the world is right now. I want to end on the note that this is a collective problem that you can’t solve individually.

RH: Right, like, downloading Signal is great, but it’s not going to solve the larger issues.

TP: Exactly. I think a really important takeaway from this conversation is that these issues have historically been articulated at the scale of the individual, and that makes no sense with the current infrastructures we’re living in.

JA: Right. But do download Signal.

(4)