YouTube Has Finally Started Hiding Extremist Videos, Even If It Won’t Delete Them All

Hateful people and groups have learned to be subtle when they post online. Not every white supremacist openly advocates lynchings, nor does every radical cleric call for suicide bombings. But they do stoke resentment toward groups of people with content that is offensive and may nudge others toward violence.

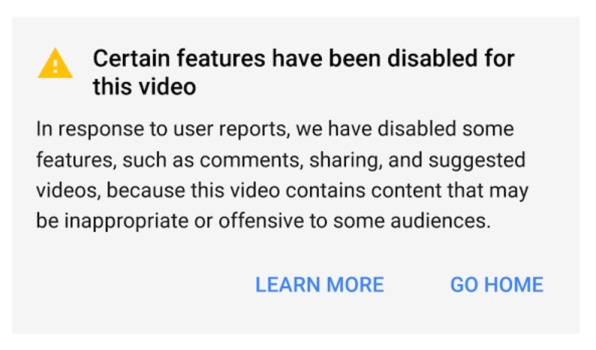

That’s what YouTube has concluded after months of consulting with experts on extremism, such as the Anti-Defamation League. And today the video platform has introduced a new way to deal with videos that brush against, but don’t quite cross, the line to merit an outright ban—hiding them behind a warning page, forbidding ads, disabling comments and likes, and keeping them out of “Up next” suggested video lists.

YouTube announced in a blog post on August 1 that these measures were coming, but it just flipped the switch on them today, sources at YouTube confirmed to Fast Company. It’s the social network’s latest crack at pushing down extremist and offensive content without crushing freedom of expression, sources say.

YouTube isn’t announcing what specific users, channels, or videos will get the new treatment, but we’ll be keeping an eye out. YouTube did provide examples of the kinds of videos likely to get flagged, however. Holocaust denial, for instance, is patently false and inherently hateful. But in YouTube’s view, it doesn’t necessarily cross the line into banned actions such as targeting individuals or inciting violence.

Critics may well disagree with that. “When you look at the material, it’s put in a context that hides, or shrouds itself, under freedom of speech. But basically it’s extreme hate speech that’s tied to a global effort,” says Rabbi Abraham Cooper of the Simon Wiesenthal Center, mentioning Iran’s use of the propaganda.

It seems YouTube decided to err on the side of free expression—which jibes with its decision in May to promote former ACLU attorney Juniper Downs to head of public policy and government relations. (YouTube does ban Holocaust denial in countries like Germany where it is illegal, though.) YouTube, it appears, decided that preaching any kind of supremacy—whites over blacks, Christians over Jews, Muslims over Christians, etc.—isn’t enough on its own to get a ban based on inciting violence or promoting terrorism.

YouTube isn’t making all these calls on its own. Over the years it’s recruited a group of non-government organizations, including the Simon Wiesenthal Center, the Anti-Defamation League, and the Southern Poverty Law Center (SPLC), as well as government agencies such as the U.K.’s Counter Terrorism Internet Referral Unit into a “Trusted Flagger” program. The program had 63 member organizations at the beginning of the year and has taken on more than half of the 50 new members that YouTube says had planned to add in 2017. We pinged a few of the organizations today. Key staffers at the SPLC were out, but the press office sent an email saying, “[W]e have been invited to be a trusted flagger and are very pleased with Google’s new policies.”

“This is a good development that clearly marks content as controversial, but does not limit anyone’s freedom of expression,” wrote Brittan Heller, the ADL’s director of technology and society, in an email. “It places content from extremists where it belongs: in the extreme corners of the platform. We think YouTube has struck the right balance.”

The Wiesenthal Center is less sanguine. “We were approach and agreed and are happy to have that designation [as a Trusted Flagger],” says Cooper, who says that, nevertheless, his organization is “not happy campers.” Cooper criticizes not so much YouTube’s policies as the amount of effort it expends to uphold them.

Many Opinions

YouTube is working from several lists of extremist or hate groups. Some are banned outright, like the 61 on the U.S. State Department’s Foreign Terrorist Organization list (with members including Al Qaeda, Basque Fatherland and Liberty, Hamas, and ISIS). Other lists present judgment calls for YouTube. The SPLC’s current list of 917 hate groups in the U.S. includes some usual suspects, like avowed white supremacists, black separatists, Nazis, and KKK chapters.

But some choices are controversial, such as including reformed Islamist Maajid Nawaz (author of Radical: My Journey Out Of Islamist Extremism) in the SPLC’s Field Guide to Anti-Muslim Extremists. (Also in the Field Guide is activist and author Ayaan Hirsi Ali, who just wrote a stinging critique of the SPLC in a New York Times op-ed.)

Regardless of what authority flags a video, YouTube says that it will review each one independently, and provide the poster a chance to appeal the decision. Copper, however, is wary of YouTube’s resources to make some of the tough calls and says that the Google-owned internet giant should make better use of its expert collaborators. “We’re not saying that every company should become historians, up on every new symbol of extremist groups left and right, but for the teams of people they put in place, we’re happy to train them.”

YouTube has to walk a line not only between free speech and hate speech, but between different definitions of what qualifies as hate speech—and a perpetrator of hate. One thing that is clear: Like many tech companies, YouTube is upping its efforts in responding to an online explosion of both terror and hate speech. Whether it’s doing enough is debatable and still too early to tell. But Cooper, whose organization issues an annual report card for tech companies (YouTube currently earns a B- for terrorism and a D for hate), offers a way to judge success. “When we see the bad actors leaving YouTube for other ways of getting their videos up, then we’ll know that they deserve a stellar grade.”

Fast Company , Read Full Story

(20)