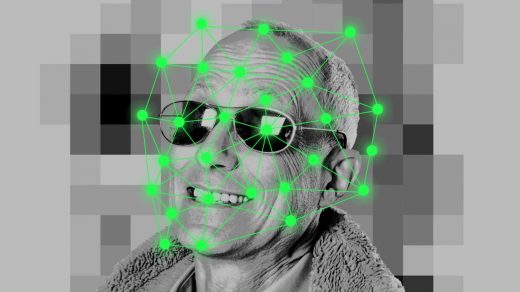

AI celebrity deepfakes are over. Hoaxes as entertainment are just beginning

Last month, a photo of Pope Francis wearing a Balenciaga parka shocked the world. The image was posted to Reddit by a user (who was high on magic mushrooms) and, though it looked real enough to fool a large cross-section of Twitter, it was actually created by Midjourney, a generative-AI tool that turns text into images.

But Balenciaga pope is only the tip of the celebrity deepfake iceberg. Users have been using a whole suite of AI tools, including a sophisticated bit of audio cloning software, to make famous figures say and do whatever they want. And these memes—if you can even call them that anymore—are both higher tech than anything we’ve ever seen before and also part of a decades-long tradition going all the way back to the first internet users who powered up Photoshop to make memes about former President George W. Bush getting a shoe thrown at his head.

But as these tools become more sophisticated, the line between art project, meme, political cartoon, and elaborate hoax blurs more and more. They also push us further into a brave new world where all of those things essentially become the same thing, no matter how much confusion it creates.

The users making these videos rarely explain how they did it, but the majority are using an app called ElevenLabs. It analyzes a chunk of audio and then creates an AI model that sounds similar.

For the most part, people are using it to goof off. Here’s President Joe Biden apologizing for not being able to visit East Palestine, Ohio, because he’s stuck on the mysterious island from the TV show Lost. Here’s podcaster Joe Rogan explaining that he went to the beach that makes you old from the film Old. Here’s Biden and former presidents Donald Trump and Barack Obama arguing for almost six minutes about how to rank the best Zelda games. And, probably most impressive of all, here are Midjourney images of Harry Potter characters dressed up like Balenciaga models that were then animated with another AI and given actual dialogue with yet another. I won’t link to it, but there’s also a very NSFW—and rather convincing—Tucker Carlson deepfake going around.

There’s even one TikTok channel called Thrashachusetts that uses ElevenLabs’s AI to tell interconnected stories about U.S. presidents as if they were punks in a local DIY music scene. Thrashachusetts’s creator, who asked to only be referred to as Paul, tells Fast Company that he had seen an AI video of presidents playing the card game Yu-Gi-Oh! and figured he might be able to make something similar about punk music.

“I started thinking of all of this stupid, mundane scene drama, or scene politics,” he says. “And I was like, ‘How can I tell that in a way that is funny and is relatable using the most important decision-makers in the world?’”

The Thrashachusetts videos aren’t believable deepfakes in any sense. The videos feature presidents’ voices arguing about their favorite Hatebreed album and Paul photoshops different outfits onto the characters to reflect the particular punk subculture they’re affiliated with—which makes the whole project weirdly reminiscent of early JibJab videos from 15 years ago, when comedians doing impressions of presidents would sing wacky songs and go incredibly viral in email forwards.

But, unlike JibJab, or Paul’s personal inspiration, old Machinima videos, as cartoonish as his videos are, he said he’s still worried about how the same technology behind his TikTok videos could be used to abuse others. “Generally, the average person has a better grasp of what’s real and what’s not compared to five years ago,” he says. “So, that’s a little bit comforting. But how long does it last?”

Max Rizzuto, a research associate at the Atlantic Council’s Digital Forensic Research Lab, says that part of the problem with separating out the fun and benign uses of deepfake technology from the malicious ones is that there isn’t much of an academic distinction: The same tools are behind both.

“All of the coverage dating back to the first examples of these forms of manipulation has been around alarmism, which is not necessarily, you know, the fault of the researchers,” Rizzuto says. “That’s kind of framed what is allowable discourse for this kind of technology. Everybody kind of needs to be careful about it.”

The single most prevalent malicious use case for deepfake technology is non-consensual sexual material. It has never been easier to render an image—or, now, a video with audio—of a person committing a sexual act they never did. But there are other, more complicated concerns, as well.

In late January, when ElevenLabs first launched, 4chan users found it and started mass-producing deeply offensive audio deepfakes. One of them, which depicted Biden going on a transphobic tirade, was then tweeted supportively by a member of Kenya’s parliament. Then, in February, a reporter for Vice used ElevenLabs’s audio-cloning AI tool to break into his own bank account.

There’s also going to just be some people who choose to believe AI-generated art—even if it’s obviously fake.

“I don’t have a lot of faith in people’s capacity to disassociate, or separate, the message or the fake image, from the possibility that it’s a fake image,” Rizzuto says. “Even when it’s disproven, the emotions that they experienced are not nullified.”

Case in point: Former President Donald Trump was not arrested in the middle of the street by NYPD officers as if he was in an action movie, though many of his supporters have been using AI to imagine just that.

One of the most prolific creators of these deepfake videos is a TV writer named Zach Silberberg. He made the video about Biden getting trapped on the island from Lost, as well as the video about Rogan going to the beach that makes you old.

Silberberg tells Fast Company that usually he isn’t worrying about duping anyone with the videos he makes because they’re pretty outlandish. Though, after he made the Biden video, he said he did start getting messages from Trump supporters that may not have thought it was totally real, but didn’t seem to think it was totally fake either.

“People latched on to it, and I feel like they didn’t even watch the full video,” he says. “They just saw the first two seconds and were like, ‘Yeah, fuck that.’”

But Silberberg also thinks that this current trend of using AI to make famous people say funny things might already be over, at least as far as the meme economy goes. In the world of AI, trends happen at a speed we’ve never really experienced before. What might be the most sophisticated thing in the entire world one day is boring the next.

“I can say already that I think this wave has died,” he says. “There was a period where there was a boom for it. Where I was seeing a lot of presidents gaming videos. There was one where it was, like, Biden and Trump playing Minecraft with the Joker. There were all of these AI-generated conversation pieces.”

Silberberg has concluded that users are already bored of that sort of thing. He actually sees the Balenciaga pope as a possible sign of where we’re going next—the hoax as entertainment.

“The Pope wearing a big puffy coat went super viral for no reason. Because people thought it was real. And that’s the scary thing,” he says. “I wish that the people using the technology would stop making something that was obviously fake, and it’s just funny, but that’s not what’s going to happen.”

(16)