facebook Says Its New AI analysis computer systems Are Twice As highly effective As anything before

Code-named “large Sur,” the new computing structure is the results of 18 months of design work with partners like Quanta and Nvidia.

December 10, 2015

fb mentioned as of late it has developed a new computing system geared toward artificial intelligence research that is twice as fast and twice as efficient as anything else on hand prior to.

at the moment, desktop studying and synthetic intelligence are, hand in hand, becoming the lifeblood of large new applications all through the industry and analysis communities. however at the same time as that dynamic has been significantly driven by computers that are more powerful and extra environment friendly, business is reaching the limits of what these computers can do.

increasingly, facebook is creating elements of its trade established on synthetic intelligence, and the social networking large’s skill to build and educate developed AI fashions has been tied to the power of the hardware it makes use of.

among its up to date AI initiatives had been efforts to make fb more straightforward to make use of for the blind, and to incorporate synthetic intelligence into on a regular basis users’ duties.

until now, the company has been relying on off-the-shelf computer systems, however as its AI and machine-finding out wants have advanced, it has determined that it could now not rely upon others’ hardware. That’s why facebook designed the “subsequent-generation” computing hardware it has code-named “giant Sur.”

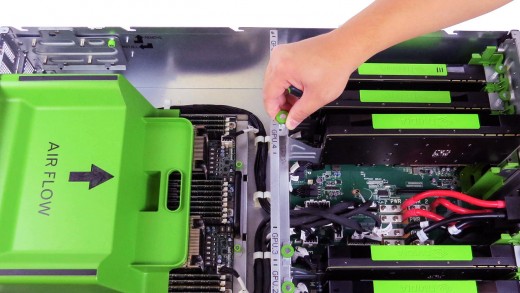

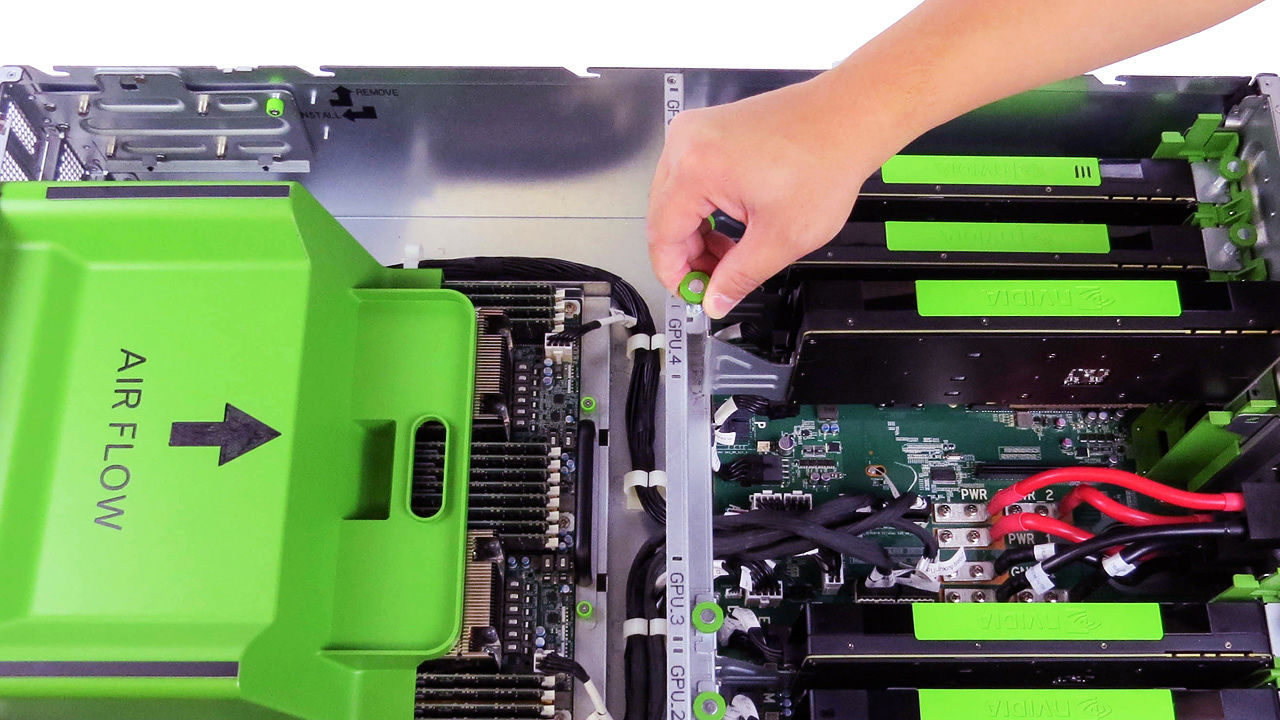

the brand new Open-Rack-appropriate gadget, designed over 18 months along with partners like Quanta and processing manufacturers like Nvidia, options eight images processing devices (GPUs) of up to 300 watts apiece. facebook says that the brand new device is twice as quick as its previous hardware, giving it a 100% improve within the effectivity of neural networks coaching, and the flexibility to explore neural networks which are two times as massive as before.

“Distributing training throughout eight GPUs,” fb AI research (honest) engineering director Serkan Piantino wrote in a weblog post, “allows us to scale the dimensions and velocity of our networks with the aid of another issue of two.”

Piantino delivered in the submit that fb plans on open-sourcing the big Sur hardware and will post the design supplies to the Open Compute challenge (OCP) in a bid to “make it a lot more straightforward for AI researchers to share tactics and applied sciences” and for others to assist support the OCP.

“the bigger you’re making those neural networks, the easier they work, and the better you educate them,” stated Yann LeCun, the director of truthful, right through a press convention call earlier this month. “in no time, we hit the boundaries of restricted memory.”

On the decision, Piantino said that some of the main design specs for giant Sur machines was once that they be “energy environment friendly, both with regards to the power they use, and the power needed to chill them in our data centers.”

For facebook, it will be significant to have these higher, extra highly effective, and efficient computer systems. That’s as a result of Piantino informed journalists, “our capabilities continue to grow, and with each and every new functionality, whether or not it’s computer imaginative and prescient, or speech, our fashions get dearer to run, incrementally, every time.”

also, he mentioned, as the fair workforce has moved from analysis to capability, it has considered product groups from across fb attain out about collaborations.

As LeCun put it, “part of our job at [FAIR] is to put ourselves out of a job.”

quick company , learn Full Story

(38)