Why Facebook’s Trending Topics Spam Problem Can’t Be Solved With Algorithms

When Facebook unceremoniously fired the team of human editors responsible for curating its Trending section, surely it didn’t expect to be betrayed by its algorithm so quickly. Facebook laid off all the Trending curators on Friday, under the guise of wanting to reduce bias and “make the product more automated.” The Trending team is being repopulated with engineers, who will oversee the algorithms charged with sussing out Trending content.

It took just two days for this approach to backfire: On Sunday, “Megyn Kelly” was trending on Facebook, supported by a story that said she was fired from Fox News and supported Hillary Clinton—news that turned out to be false.

This gaffe flies in the face of Facebook’s claim that the process of choosing Trending topics has not actually changed. The Trending curators were tasked with writing descriptions and summaries for each topic; according to Facebook, the decision to automate Trending has eliminated only that step, tweaking the appearance of topics in the Trending module. Like the recently departed editorial team, the engineers who are now monitoring Trending have the ability to approve or dismiss topics—but that didn’t prevent the news about Kelly from circulating, since an inaccurate post with enough traction would technically meet the criteria for being included in Trending.

As Facebook told CBS News:

We also want to share a bit more context on how it happened. A topic is eligible for Trending if it meets the criteria for being a real-world news event and there are a sufficient number of relevant articles and posts about that topic. Over the weekend, this topic met those conditions and the Trending review team accepted it thinking it was a real-world topic. We then re-reviewed the topic based on the likelihood that there were inaccuracies in the articles. We determined it was a hoax and it is no longer being shown in Trending. We’re working to make our detection of hoax and satirical stories quicker and more accurate.

But it’s not just accuracy that has taken a hit since Facebook restructured Trending. On Monday, the latest news about the EpiPen, for example, translated to just “Mylan” in the Trending module and only appeared in the Trending vertical dedicated to politics. An editor would have also added that Trending topic to the science vertical, a source with knowledge of the matter told Fast Company—but the algorithm on its own did not know to do this. That’s why the Trending module has been more sparsely populated: Additional curators were usually assigned to verticals like science, to ensure there was enough content.

Still, the events of the past five days are unsurprising when you look at Facebook’s trajectory over the last few months, after a Gizmodo article published in May alleged that the Trending section had been burying conservative news. Though a slew of publications had written about the Kelly incident, Facebook’s own Trending fracas did not start trending until Tuesday night—and when it did, it was under the topic name “Megyn Kelly,” a misleading description that does not mention Facebook unless you click into the topic.

If you’ve been watching Facebook’s Trending topics for some time, you may have noted something that curators had noticed—and complained about—to the powers that be at Facebook: the increased presence of Trending spam. To fill the Trending module, curators had to draw from topics that were trending not on the internet, but specifically on Facebook. This was not the case prior to Gizmodo‘s story, a source told Fast Company—in fact, before the article was published, curators could introduce topics into Trending if there was major news that was not being talked about enough on Facebook or the internet as a whole. If a story was not being discussed much, the news sources included in the topic page would reflect that.

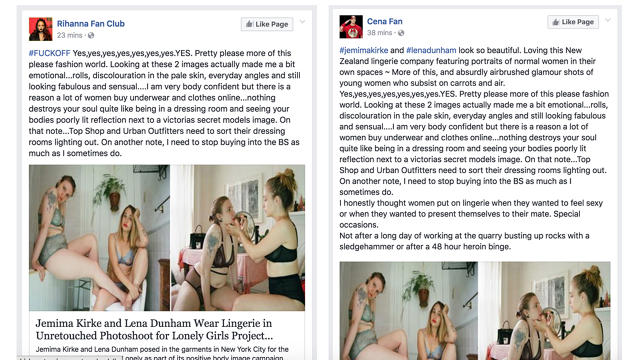

This explains why some of the topic pages include links from sites that you’ve never heard of or do not view as authoritative news sources. What it doesn’t explain is why the “public posts” section of so many Trending topics are deluged with posts from spam accounts like “Cena Fan” and “Rihanna Fan Club.”

Consider the results last week when I clicked on random trending topics related to, say, Lena Dunham or Alexa Vega. Every post I saw that appeared to be spam repeated the same text, almost verbatim, regardless of topic. And this was even before Facebook got rid of its human editors. As it turns out, the issue of spam is something the Trending curators repeatedly brought up with Facebook. The algorithms were responsible for choosing public posts for each Trending topic and additional news links; the only thing curators had a hand in was sifting through topics, writing the module topic descriptors, and putting together the story summary at the top of each topic page.

Curators, in fact, had no control over what showed up on the page, once users scrolled past the image up top—that was left to the whims and fancies of the omniscient algorithm. Any complaints about spam and questionable links fell on deaf ears, a source told us.

A Facebook representative declined to comment.

As Facebook has attempted to neutralize allegations of bias, it has doubled down on using algorithms to run the Trending section—and for what? Trending is even more muddled now than it was before, increasingly linking to irrelevant public posts. If users were not clicking on topic pages before, they certainly won’t do so now, based on just a few cryptic words and a shrinking list of Trending topics.

“To be honest, I hadn’t really clicked on too many trending topics within Facebook because I found they were usually hours or days old,” On Base Marketing CEO and former SocialTimes editor Justin Lafferty told Fast Company. “When I did, it was rare I found what I really wanted: what my friends were saying about this topic. Others I’ve talked to in the past about Facebook’s trending section reported similar issues. It just wasn’t relevant enough to be something they explore regularly.”

So what, then, is the future of Trending topics? Facebook could just ax the Trending section, rather than swallow its pride and hire back a team of editors. It’s difficult to imagine a different course of action. As has been said before, relying entirely on an algorithm does not remove humans (or bias) from the equation—algorithms are created by humans and made smarter and more effective with human input. In the case of the Trending section, the proof is in the pudding.

Fast Company , Read Full Story

(26)